Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 54-64 |

| Gender | Male, 100% |

| Sad | 82.7% |

| Calm | 6.3% |

| Disgusted | 4.5% |

| Confused | 3.3% |

| Angry | 1.2% |

| Fear | 0.9% |

| Surprised | 0.8% |

| Happy | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.7% | |

Categories

Imagga

created on 2022-01-09

| streetview architecture | 70% | |

| paintings art | 26.6% | |

| interior objects | 1.1% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a man standing next to a window | 77.8% | |

| a man standing in front of a window | 77.7% | |

| a man and a woman standing in front of a window | 60.5% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

photograph of a couple in their living room.

Salesforce

Created by general-english-image-caption-blip on 2025-05-18

a photograph of a man in a suit and tie is standing in front of a table with glasses

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

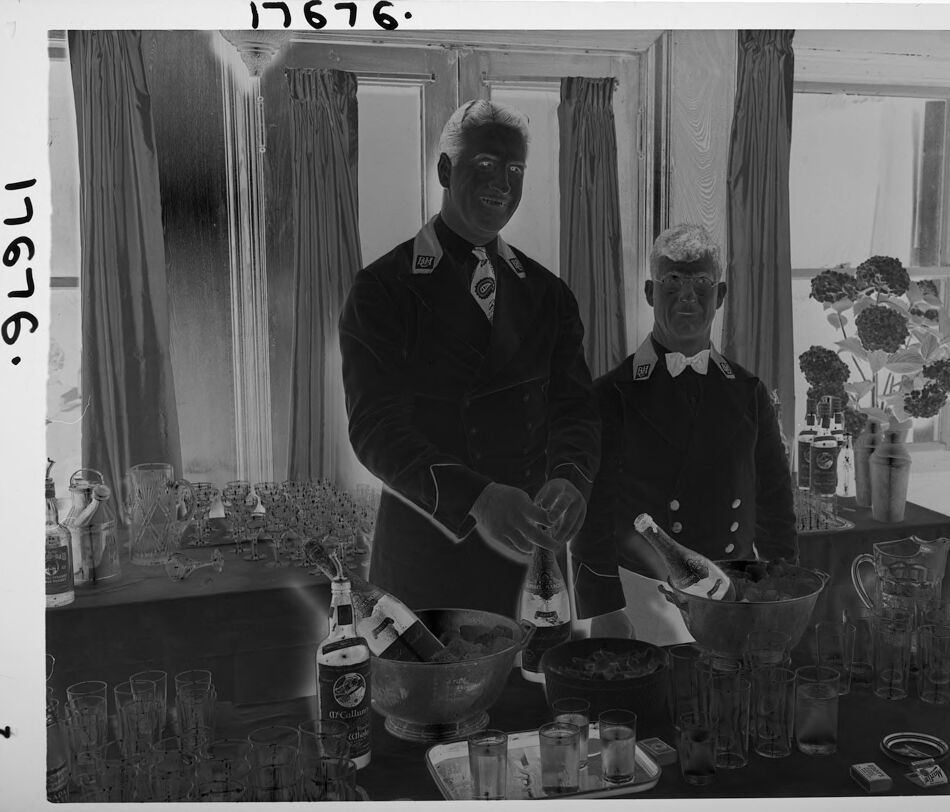

The image depicts two people dressed in formal attire, including suits with insignia, standing behind a table set up with drinks and barware. The table holds various glasses, bottles (including labeled wine or champagne bottles), and ice buckets. In the background, there are windows with curtains and a floral arrangement in a vase. The scene suggests a catering or bar service setting, likely in preparation for an event or gathering.

Created by gpt-4o-2024-08-06 on 2025-06-17

The image is a black-and-white photograph featuring two people standing behind a table set for a party or gathering. They are dressed in formal uniforms with jackets that have epaulettes, and one wears a bow tie. The table in front of them is covered with various items typically associated with a beverage service: two large bowls with bottles partially submerged in ice, several empty glasses, and two beer bottles. To the side, there are multiple glass and bottle containers, including a visible label that has the text "Grolsch" on it, which is a brand of Dutch beer. In the background, a window with curtained panes shows pots of flowers placed on the sill.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image shows two men in formal attire, likely waiters or servers, standing in what appears to be a dining or catering setting. The room is filled with various glassware, dishes, and other serving items. The men are standing behind a table or counter, suggesting they are preparing or serving food and drinks. The image has a black and white, vintage aesthetic, indicating it was likely taken some time in the past.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This is a black and white photograph showing two bartenders or servers working at what appears to be a drink station or bar. They are wearing formal uniforms with bow ties and appear to be preparing drinks. On the counter in front of them are numerous glasses, punch bowls, and bottles. There's also what looks like a vase with flowers in the background near a window with curtains. The image has a vintage quality to it, likely from several decades ago. The setup suggests this might be from a formal event or gathering where drinks were being served.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of two men standing behind a bar, surrounded by various bottles and glasses. The man on the left has short hair and is wearing a dark jacket with a white bow tie and a name tag on his lapel. He is holding a bottle of beer in his right hand. The man on the right also has short hair and is wearing a similar dark jacket with a white bow tie and a name tag on his lapel. He is holding a bottle of beer in his left hand.

In front of the men, there are several bottles of beer, glasses, and other drinks. On the table to the right, there is a vase with flowers and a few bottles of liquor. The background of the image shows a window with curtains and a doorway leading to another room.

The overall atmosphere of the image suggests that the two men are bartenders or servers at a restaurant or bar, preparing drinks for customers. The presence of the bottles and glasses, as well as the name tags on their jackets, reinforces this interpretation.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

This image is a black-and-white photograph of two men in uniform standing behind a table with various bottles and glasses. The man on the left has short, light-colored hair and is wearing a dark jacket with a tie and a patch on his shoulder. He is holding a bottle in his right hand and appears to be pouring it into a glass. The man on the right also has short, light-colored hair and is wearing a similar uniform. He is holding a bottle in his left hand and has a white bow tie around his neck.

The table in front of them is covered with a dark cloth and features several bottles, glasses, and other items. There are also some flowers in a vase on the right side of the table. In the background, there are windows with curtains and a doorway with a curtain. The overall atmosphere suggests that the men are working at a bar or restaurant, possibly in the 1940s or 1950s based on their uniforms and hairstyles.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph of two men standing behind a table, possibly in a restaurant or bar setting. The man on the left is holding a bottle of wine and pouring it into a wine glass, while the man on the right is holding a bottle of champagne and pouring it into a champagne flute. There are several bottles of wine and champagne on the table, along with glasses, a pitcher, and a bowl. Behind the men, there are two large windows with curtains, and a flower arrangement on a table in the background. The image has a watermark with the number "17676" in the top left corner.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The image is a black-and-white photograph of two men standing behind a table with various items on it. The man on the left is wearing a uniform with a bow tie and holding a bottle of champagne. The man on the right is also wearing a uniform and is holding a glass. The table is filled with glasses, bottles, and other items. There is a vase with flowers on the right side of the table.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

The black and white image depicts two men dressed in formal attire, likely serving at an event. The man on the left is taller and is wearing a suit with a tie, while the man on the right is wearing a tuxedo with a bow tie. Both men have what appear to be service badges on their lapels. They are standing behind a table laden with drinks, including bottles of liquor, cocktail glasses, and ice buckets filled with what are likely champagne or wine bottles.

The table is set up in front of a window covered by curtains. There are also flowers in a vase on the table, adding a touch of elegance to the scene. The image has a grainy quality, which suggests it may be an older photograph.

The men appear to be in the middle of serving drinks, creating a sense of activity and preparation. The overall impression is one of professional service and attention to detail.

Created by gemini-2.0-flash-lite on 2025-05-17

Here is a description of the image:

The image appears to be an inverted black and white photograph, possibly from an old negative. It depicts two men, likely bartenders, standing behind a table laden with various bar accessories and drinks. They both wear formal uniforms, and one is seemingly in the act of pouring a drink from a bottle.

The background shows a window with curtains, and the setup suggests a bar or catering service for a special event. There are an assortment of glasses, bottles, ice buckets, and other items commonly found at a bar. The lighting is quite bright, and the entire scene gives off an impression of elegance and formality.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The black-and-white photograph shows two men in uniform standing behind a table laden with various items, likely at a bar or a similar setting. The men are dressed in formal attire, which includes dark jackets with epaulets, white shirts, and ties. They appear to be preparing drinks or serving beverages.

On the table, there are several bottles of alcohol, including what looks like a bottle of Irish whiskey. There are also numerous glasses, including beer glasses and wine glasses, some of which are filled with beverages. Additionally, there are large bowls, possibly containing punch or ice, and a few buckets or containers that might be used for chilling bottles.

The background shows curtains and windows, suggesting that the setting is indoors, possibly in a room with natural light. There are also flower arrangements on the table, adding a decorative touch to the scene. The overall atmosphere suggests a formal or semi-formal event, possibly a reception or a party.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-24

This is a black-and-white photograph showing two individuals in a formal setting. They are dressed in what appears to be a uniform, which suggests they might be part of a service or event staff. Each individual is wearing a dark jacket with white accents and a white collar, indicating a uniformed role, possibly in a catering or hospitality setting.

The two individuals are standing behind a table that is set up with various items typically associated with a party or formal gathering. On the table, there are several glassware items, including wine glasses and tumblers, as well as bottles, which could be for wine or champagne, and a cooler or bucket for keeping drinks chilled. There are also some bowls on the table, one of which appears to be holding ice and possibly food.

In the background, there is a window with curtains, and a table with more glassware and a bouquet of flowers, adding to the formal and celebratory atmosphere of the event. The setting appears to be indoors, possibly in a banquet hall or a similar venue.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-24

This black-and-white photograph, marked with the number "17676" in the top left corner, depicts two men dressed in formal attire standing behind a table set up with various beverages and glasses. The man on the left is pouring a drink from a bottle, while the man on the right is holding a bottle of champagne in an ice bucket. The table is laden with an assortment of bottles, glasses, and other items, suggesting a festive or celebratory occasion. Behind them, a window with curtains and a vase of flowers is visible. The overall setting appears to be indoors, possibly in a lodge or cabin, given the wooden paneling and rustic decor.