Machine Generated Data

Tags

Color Analysis

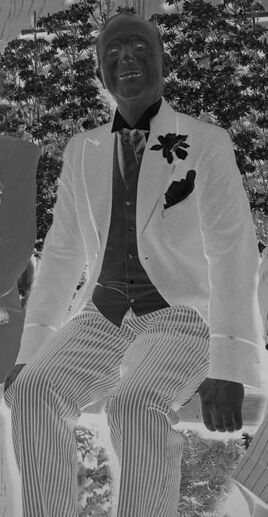

Face analysis

Amazon

AWS Rekognition

| Age | 40-48 |

| Gender | Male, 99.7% |

| Calm | 46% |

| Happy | 22.7% |

| Sad | 20.4% |

| Disgusted | 5.5% |

| Confused | 2.4% |

| Surprised | 1.5% |

| Angry | 0.8% |

| Fear | 0.7% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.3% | |

Categories

Imagga

created on 2022-01-15

| streetview architecture | 49.6% | |

| paintings art | 36.7% | |

| pets animals | 5.7% | |

| nature landscape | 1.9% | |

| beaches seaside | 1.8% | |

| people portraits | 1.8% | |

| text visuals | 1.4% | |

Captions

Microsoft

created by unknown on 2022-01-15

| a group of people posing for a photo | 88.7% | |

| a group of people posing for the camera | 88.6% | |

| a group of people posing for a picture | 88.5% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

photograph of politician and his wife, with their daughters.

Salesforce

Created by general-english-image-caption-blip on 2025-05-23

a photograph of a man and woman sitting on a bench in a park

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

This is a black-and-white negative image featuring three individuals seated in an indoor setting. The decor includes an ornate mantel adorned with vases, potted plants, and other furnishings, suggesting a formal or elegant environment. The individuals are wearing formal attire, with hats and flowers as accessories. There are intricate patterns visible on the furniture and plants placed near the subjects, adding to the refined ambiance of the image. The wall behind them features framed artwork and candles.

Created by gpt-4o-2024-08-06 on 2025-06-17

This image appears to be a negative of a formal group portrait. Three individuals are seated in an elegant interior setting, with ornate decor and lush greenery in the background. The person on the left is wearing a dress with a large hat adorned with flowers, while the two people on the right are dressed in suits. The suits feature decorative elements such as lapels and boutonnieres, with one suit displaying a striped pattern. The setting includes decorative elements like potted plants, ornate vases, tall candlesticks, and a large framed painting or mirror above the mantel. The atmosphere suggests a sense of sophistication and formality. Various markings and numbers, such as “14725,” are visible along the top and sides of the image.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image appears to be a black and white photograph of three individuals dressed in formal attire, posing together in an ornate, decorative setting. The individuals are wearing white suits with floral accents, and they appear to be smiling and posing for the camera. The background features various decorative elements, including curtains, mirrors, and potted plants, creating an elegant and opulent atmosphere.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-20

The black and white photograph shows three smiling African American men dressed in formal white suits and black ties, sitting together in an ornately decorated room. Behind them are large framed portraits on the walls, decorative molding, and hanging plants or vines, giving the space an elegant, stately appearance. The men look happy and are posing together for the photograph, likely at some kind of formal event or celebration based on their attire and the grand setting.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This appears to be a black and white vintage photograph from around the 1920s or 1930s. In the image, there are several people wearing light-colored suits or jackets, likely white or cream colored, with pinstripes. The setting appears to be an interior space with decorative elements visible in the background, including what looks like a mantelpiece or shelf with vases and decorative items. There's also what appears to be a potted plant or floral arrangement visible in the frame. The image has some damage or deterioration visible, which is common in photographs of this age.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-03

The image is a black and white photograph of three people sitting in front of a fireplace, with a plant and a lamp in the background. The photo appears to be from the 1920s or 1930s, based on the clothing and hairstyles of the individuals.

- Three people sitting in front of a fireplace:

- The person on the left is wearing a hat and a long coat, and has their hands clasped together in their lap.

- The person in the middle is wearing a suit and tie, and has a flower in their lapel.

- The person on the right is wearing a suit and tie, and has his hands clasped together in front of him.

- A plant and a lamp in the background:

- There is a large plant with dark green leaves behind the three people.

- There is a lamp with a dark shade on a table to the left of the plant.

- A fireplace with a mantle:

- The fireplace is decorated with a mantle that has several objects on it, including a vase, a picture frame, and a clock.

- The fireplace itself is not visible, but it appears to be a large stone or brick structure.

- A wall with a window:

- The wall behind the fireplace has a window with curtains that are pulled back.

- The window appears to be a large, multi-paned window with a decorative frame.

Overall, the image suggests that the three people are posing for a formal portrait in a living room or parlor. The fireplace and mantle add a sense of warmth and coziness to the scene, while the plant and lamp provide a touch of elegance.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-03

The image is a black and white photograph of three people posing for a picture in a living room. The individuals are dressed in formal attire, with the woman on the left wearing a hat and a dress, while the two men on the right are wearing suits. They are seated in front of a fireplace, surrounded by plants and decorative items.

The overall atmosphere of the image suggests a formal gathering or event, possibly a wedding or a family portrait. The use of formal attire and the presence of a fireplace and plants create a sense of warmth and elegance, while the black and white tone gives the image a classic and timeless feel.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-06-02

The monochromatic image shows three people sitting on a bench in front of a fireplace. Two men are wearing white suits with a flower on their chests, and a woman is wearing a dress with a hat. A potted plant is placed on either side of the people. A frame is mounted on the wall above the fireplace. A lamp is placed on the left side of the fireplace.

Created by amazon.nova-lite-v1:0 on 2025-06-02

The image is a black-and-white photograph featuring three individuals dressed in formal attire, seated on a bench. They are surrounded by plants and a fireplace, with a lamp and picture frame in the background. The photograph has a white border and the numbers "14725" and "14725" are written on the left and right sides of the image, respectively. The individuals are smiling and appear to be posing for the camera.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-18

Here's a description of the image:

Overall Impression: The image is a black and white negative of a posed photograph. Three people are seated together in what appears to be a well-decorated indoor space. The image has a vintage feel, likely from the early to mid-20th century.

People:

- Left: A woman is seated. She wears a hat, a long dress, and a floral accessory, possibly a corsage. She has her hands in her lap.

- Middle: A man is seated. He's wearing a suit, a tie with a floral accessory.

- Right: A man is seated. He's wearing a pinstriped suit. His hands are clasped in his lap.

Setting:

- The background features an ornate fireplace mantelpiece adorned with decorative items, including what might be a vase or urn, a framed picture, and possibly small potted plants.

- Potted plants are visible, arranged around the seated people.

Style & Atmosphere:

- The photograph appears to be formal, likely taken on a special occasion or for a portrait.

- The overall aesthetic is classic and elegant, reflecting the fashion and decor of the period.

Additional details

- The numbers "14725" are written in the top-left and left side of the frame.

- The image seems to be a negative image, meaning the colors are inverted, so everything appears light instead of dark.

Created by gemini-2.0-flash on 2025-05-18

Here is a description of the image:

The image appears to be a black and white negative of a photograph. It depicts three people sitting on a bench in what looks like a formal setting. There is a woman on the left, wearing a hat, dress, and a scarf with a flower pin. In the middle is a man in a suit, vest, and tie. To his right is another man in a pinstripe suit, tie, and pocket square, with his hands clasped together.

Behind the people is a decorated mantelpiece. There are vases, picture frames, and plants on top of the mantel. Potted plants surround the bench, adding to the formal or celebratory atmosphere.

The number "14725" is written on the top and side of the image, likely a catalog or identification number.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image is a black-and-white photograph featuring three men dressed in formal attire, standing in front of a decorated backdrop. They appear to be part of a performance or a staged event. Here are some detailed observations:

Attire:

- The man on the left is wearing a white suit with a patterned vest and a wide-brimmed hat adorned with flowers. He also has a flower on his lapel.

- The man in the center is dressed in a white suit with a dark vest and a bow tie. He has a flower pinned to his lapel and is wearing a hat with a flower.

- The man on the right is wearing a white pinstripe suit with a dark tie and a flower on his lapel. He is also wearing a hat with a flower.

Accessories:

- All three men are wearing gloves.

- They each have a flower pinned to their lapels, which matches the floral theme of their hats and the backdrop.

Backdrop:

- The backdrop includes large potted plants with flowers, adding to the festive atmosphere.

- There are decorative elements such as a framed mirror and other ornamental items on a shelf behind them.

Setting:

- The setting appears to be indoors, possibly a stage or a decorated room.

- The overall ambiance suggests a celebratory or theatrical event.

The image has a vintage feel, possibly from the mid-20th century, given the style of clothing and the black-and-white photography. The men's poses and attire indicate they might be performers or participants in a special event.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-24

The image is a black-and-white photograph that appears to be from the mid-20th century, given its style and quality. It shows three individuals seated in a formal setting, likely a room decorated for a special occasion or event. The room is adorned with ornate decorations, including flowers in vases and framed artwork on the wall.

- The person on the left is a woman wearing a formal outfit, including a hat decorated with flowers and a matching coat. She has a sophisticated look.

- The central figure is a man dressed in a suit with a striped pattern, seated in a relaxed but formal pose. He is holding a glass in his hand.

- The person on the right is also a man, dressed in a similar striped suit, sitting with his hands clasped in front of him.

The setting suggests a formal event or gathering, possibly a social or ceremonial occasion. The overall atmosphere appears festive and elegant.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-24

This is a black-and-white photograph of three individuals, two men and one woman, dressed in formal attire. They are seated on a stone or marble bench. The woman on the left is wearing a light-colored suit with a large hat and a flower accessory. The man in the middle is dressed in a light-colored pinstripe suit with a flower on his lapel, and the man on the right is wearing a light-colored suit with a dark tie. Behind them, there are ornate decorations, including plants and a fireplace. The setting appears to be an elegant indoor environment, possibly a formal event or gathering. The image has a vintage feel, suggesting it might be from an earlier time period. There are numbers and markings on the image, possibly indicating it is a photograph from an archive or collection.