Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 30-40 |

| Gender | Male, 64.3% |

| Calm | 99.9% |

| Happy | 0% |

| Sad | 0% |

| Confused | 0% |

| Disgusted | 0% |

| Surprised | 0% |

| Fear | 0% |

| Angry | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.6% | |

Categories

Imagga

created on 2022-01-15

| streetview architecture | 73.4% | |

| paintings art | 12.8% | |

| interior objects | 7.3% | |

| nature landscape | 4.9% | |

Captions

Microsoft

created by unknown on 2022-01-15

| text | 60.2% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-15

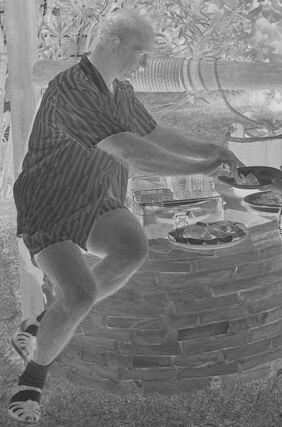

black and white photograph of a family cooking in a barbecue.

Salesforce

Created by general-english-image-caption-blip on 2025-05-05

a photograph of a man and a woman are standing in front of a table with a table cloth

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-11

The image appears to be a negative of a social gathering outdoors. A table covered with a cloth is set up in the foreground, topped with various dishes, bowls, and desserts, suggesting a meal or celebration. A man and a child are interacting near a structure resembling a stone well or a grill. In the background, a group of people is seated on chairs, possibly enjoying the event. The setting includes trees and a grassy area, indicating it takes place in a garden or park.

Created by gpt-4o-2024-08-06 on 2025-06-11

The image is a negative photograph depicting a social gathering or picnic scene outdoors. In the foreground, there is a table covered with a cloth, upon which various dishes and food items are placed. To the left, a man in striped clothing is kneeling near a brick structure that resembles a wishing well, interacting with a child who is holding a plate. The gathering appears to be taking place in a grassy area with trees and foliage in the background. In the mid-ground, several people are seated in a semi-circle, suggesting a communal, relaxed atmosphere. The vegetation includes a mix of leafy trees and possibly palm-like plants, indicating a setting with diverse flora.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-16

The image appears to depict an outdoor gathering or event. There is a table set up with various food items and dishes, suggesting a meal or refreshments being served. Several people can be seen seated around the table, seemingly engaged in conversation or enjoying the food. The background features lush vegetation, including palm trees, creating a tropical or garden-like setting. The overall scene conveys a sense of community and social interaction taking place in an outdoor, natural environment.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-16

This appears to be a vintage black and white photograph of an outdoor gathering or party. There's a covered serving area or buffet table set up with what looks like various dishes and plates of food laid out. Several people are gathered around the table, appearing to be serving or getting food. The setting seems to be in a yard or outdoor space with some foliage visible in the background. The image has a casual, informal feel typical of mid-20th century social gatherings. The lighting and contrast in the photo gives it a distinctive period look, and there's a number visible in the corner that might be a date or photo identification number (4-1211 4).

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image depicts a black-and-white photograph of a group of people gathered in a backyard setting, likely during a social event or celebration.

Key Elements:

- Setting: The scene is set in a backyard, with a grassy area and trees visible in the background.

- Table and Food: A table is positioned in the foreground, covered with a dark-colored tablecloth. Various dishes and glasses are arranged on the table, suggesting a meal or snack is being served.

- People: Several individuals are seated around the table, while others stand nearby. They appear to be engaged in conversation and enjoying each other's company.

- Atmosphere: The overall atmosphere of the image conveys a sense of warmth and camaraderie among the group.

Summary:

The image captures a joyful moment of social interaction and community bonding, with the group gathered around the table and enjoying each other's company in a relaxed outdoor setting.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

This image depicts a black-and-white photograph of a backyard barbecue scene. The central focus is a table covered with a dark tablecloth, adorned with various dishes and plates. A man, dressed in shorts and a striped shirt, is seen serving food from a grill situated to the left of the table. On the right side of the table, a woman stands, wearing a dress and an apron.

In the background, several individuals are seated on benches, while trees and bushes surround the area. The overall atmosphere suggests a casual outdoor gathering or celebration.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-30

A black-and-white photo shows a group of people gathered around a table and a grill, possibly for a barbecue party. The man on the left is wearing slippers and is leaning over the grill. The woman on the right is wearing a necklace and is standing next to a table covered with a tablecloth. There are several people sitting on chairs behind them. The area is surrounded by trees.

Created by amazon.nova-lite-v1:0 on 2025-05-30

The image is a black-and-white photograph depicting a family gathering or picnic in a backyard. The setting is outdoors, with a table covered in a dark cloth and laden with various food items. A woman stands near the table, possibly overseeing the meal preparations or serving food. A man is seen stepping onto a brick structure, possibly a barbecue grill or a makeshift oven, to cook food. Several people are seated on the grass around the table, enjoying the meal. The image conveys a sense of togetherness and family bonding during a casual outdoor gathering.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-05

Here's a description of the image:

The image is a black and white, negative photograph capturing a group of people gathered for what appears to be a picnic or outdoor meal.

Here's a breakdown of the scene:

- Foreground: A man, presumably the host or cook, is seen in the foreground. He's positioned near what looks like a well-shaped structure. He is interacting with the well and appears to be serving food on a plate. A young girl is standing next to the man. A large table laden with food and drinks dominates the center. The table is covered with a dark cloth and holds dishes, plates, and various food items.

- Midground: Several people are seated on a bench or chairs, seemingly enjoying the gathering. They are positioned behind the table.

- Background: A lush, possibly wooded, area forms the backdrop, adding depth to the scene.

- Overall Impression: The photograph exudes a sense of a relaxed, social gathering, potentially a family picnic or a community event. The composition suggests a casual, intimate moment captured in time. The style of the photograph and clothing worn by the people suggests the image was taken some time ago.

Created by gemini-2.0-flash on 2025-05-05

Here is a description of the image:

This is a black and white negative of a photograph depicting a group of people gathered for what appears to be an outdoor meal or gathering.

In the foreground, a man is leaning over what looks like a well. He's wearing a collared shirt and shorts. Beside him, a child is holding a plate, possibly receiving food from the man.

Behind them, there is a table spread with a variety of dishes. Several people are seated on a bench in the background, watching or conversing. A woman is standing near the table, possibly serving or supervising the arrangement.

The setting seems to be a backyard or garden area, with trees and foliage visible in the background. The overall atmosphere suggests a relaxed, communal event.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-06

The image depicts a group of people gathered outdoors for a social event, likely a barbecue or picnic. The setting appears to be a backyard or garden with lush greenery and trees in the background.

Key details include:

Foreground:

- A man in a striped shirt is seated on a brick structure, possibly a barbecue grill, and appears to be cooking or preparing food.

- A woman standing next to him is holding a plate of food.

- A table covered with a dark tablecloth is laden with various dishes, bowls, and utensils.

Background:

- Several people are seated on a bench or chairs, engaged in conversation and enjoying the meal.

- The background includes a garden with various plants and trees, and a structure with a slanted roof, possibly a shed or a small pavilion.

- The atmosphere seems relaxed and convivial, typical of a casual outdoor gathering.

Additional Notes:

- The photograph is in black and white, giving it a vintage feel.

- The image has a number "41211 4" written at the bottom, which might be a reference or catalog number.

Overall, the image captures a moment of social interaction and enjoyment in a pleasant outdoor setting.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-11

This is a black-and-white photograph depicting an outdoor gathering, likely a family or community event. The setting appears to be at night, as the sky is dark. The scene is illuminated by artificial lighting, which highlights the people and objects in the foreground.

In the foreground, there is a table covered with a dark cloth, laden with various dishes and food items, suggesting a buffet or serving line for the gathering. A woman on the right side of the table stands near the food, possibly a server or organizer, holding a tray with food. There are several plates and bowls on the table, indicating that food is being served.

On the left side of the table, a man is serving himself food from a dish placed on the table. Another woman is standing near him, also serving herself. The background shows a group of people sitting on a bench or low wall, engaged in conversation. The area is surrounded by vegetation, including trees and a palm tree, adding to the outdoor ambiance. The setting gives the impression of a relaxed, social event, possibly a casual party or a community gathering.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-11

This is a black-and-white image of a group of people gathered outdoors for what appears to be a barbecue or a picnic. The scene is set at night, as indicated by the dark sky and the artificial lighting.

- On the left side of the image, a man is standing next to a brick barbecue grill, serving food onto a plate held by a child.

- In the center, a group of people is seated on a bench under a wooden pavilion, watching the man serve the food. They appear to be enjoying the gathering and are dressed in casual summer attire.

- On the right side, a woman is standing next to a table covered with a tablecloth. The table is set with various dishes, including cakes, bowls, and cups, suggesting that this is a meal or a celebration.

- The background features some trees and a palm tree, indicating that the location might be in a warm climate.

- The overall atmosphere of the image is relaxed and social, with people enjoying each other's company and a shared meal.