Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 40-48 |

| Gender | Male, 97.5% |

| Calm | 98.7% |

| Sad | 0.7% |

| Angry | 0.2% |

| Confused | 0.1% |

| Disgusted | 0.1% |

| Surprised | 0.1% |

| Happy | 0.1% |

| Fear | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 96.1% | |

Categories

Imagga

created on 2022-01-09

| streetview architecture | 84.9% | |

| paintings art | 6.5% | |

| beaches seaside | 5.4% | |

| people portraits | 1.7% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a group of people posing for a photo | 88% | |

| a group of people posing for a photo in front of a crowd | 87.5% | |

| a group of people posing for the camera | 87.4% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

a group of people gather around a table to eat lunch.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a group of people standing around a table with food

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

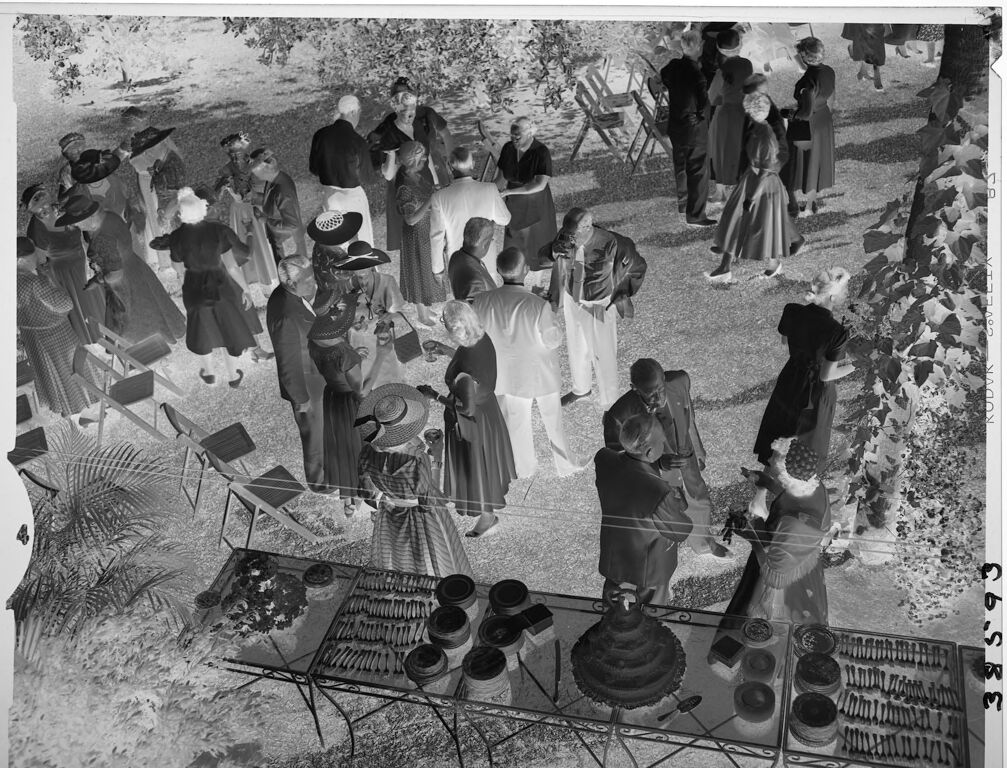

This is a black-and-white negative photograph of an outdoor gathering. The image shows a group of people dressed in formal attire, possibly attending an event or a party in a garden or park setting. Most individuals are engaged in conversation, standing in small groups scattered across the area. There are chairs arranged nearby, some occupied and others empty.

In the foreground, there is a table with various items displayed, which could be food or other offerings (though the negative format makes the details harder to discern). A layered cake or decorative centerpiece is prominent on the table, surrounded by plates, utensils, and other objects.

The scene is shaded by trees, and their foliage is visible at the edges of the image. The overall composition suggests an elegant social gathering, perhaps in the mid-20th century based on the clothing styles.

Created by gpt-4o-2024-08-06 on 2025-06-17

The image is an inverted photograph, possibly a negative, of an outdoor gathering. It depicts a group of people socializing at what appears to be a formal event, such as a garden party. The individuals are dressed in formal attire; the women are wearing dresses and hats, while the men are in suits. The setting includes a setup of tables that display various items, possibly food or drink, although the details are difficult to discern due to the negative format. The scene takes place in a garden area, as suggested by the trees, plants, and natural surroundings visible in the image. The chairs positioned around suggest it might be a sitting area for the guests. The overall atmosphere suggests a lively social interaction amidst a well-dressed crowd.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image appears to depict an outdoor social gathering or event in a garden setting. There are numerous people present, dressed in a variety of clothing styles from what seems to be an earlier historical period. The people are gathered around tables and chairs, and there are various items and decorations visible, such as umbrellas, hats, and what looks like some kind of display or exhibit. The overall scene conveys a sense of a lively social occasion taking place in an outdoor, garden-like environment.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This is a black and white photograph taken from an elevated angle, showing what appears to be a social gathering or event outdoors. In the foreground, there are tables displaying what look like hats and other items for sale or display. The crowd consists of people dressed in 1940s or 1950s style clothing - the women are wearing long skirts and dresses, and the men are in suits. The scene takes place on what appears to be a lawn or garden area, with some trees or foliage visible around the edges of the frame. The lighting suggests it's during daytime, and the gathering seems to be some sort of formal or semi-formal outdoor event, possibly a church social, garden party, or community gathering.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image depicts a black-and-white photograph of a gathering of people in a park or garden setting. The scene is captured from an aerial perspective, looking down on the group.

People:

- Approximately 30 individuals are present, dressed in formal attire, with men wearing suits and women wearing dresses and hats.

- Some people are standing in small groups, while others are walking or mingling.

- A few individuals are positioned near the center of the image, while others are scattered throughout the scene.

Setting:

- The gathering appears to be taking place in a park or garden, with trees and foliage visible in the background.

- The ground is covered with grass or a similar surface.

- A few chairs and tables are scattered throughout the area, suggesting that the gathering may be a formal event or party.

Other Details:

- The image has a vintage or antique feel to it, suggesting that it may have been taken in the early 20th century.

- The photograph is framed by a white border, with some handwritten notes or markings visible along the edges.

Overall, the image captures a moment in time of a social gathering in a park or garden setting, with a focus on the people and their interactions.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

The image depicts a black-and-white photograph of a gathering of people in an outdoor setting, likely a garden or park. The scene is captured from an aerial perspective, with the camera positioned above the subjects.

In the foreground, a table is visible, adorned with various items such as plates, cups, and what appears to be a cake or other desserts. The table is surrounded by several individuals, some of whom are standing while others are seated on chairs. The people in the image are dressed in formal attire, with men wearing suits and women wearing dresses and hats.

The background of the image features trees and grass, suggesting that the gathering is taking place in a natural setting. The overall atmosphere of the image appears to be one of celebration or socializing, with the presence of food and drinks indicating that the event may be a party or reception.

It's worth noting that the image has been edited or manipulated in some way, as evidenced by the presence of lines and marks throughout the photo. These edits may have been made to enhance the image or to remove certain elements, but they do not detract from the overall clarity and detail of the photograph.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph depicting a social gathering or event. The scene is set outdoors, with a variety of people gathered in a spacious area. The group appears to be engaged in conversation, with some individuals standing and others seated on chairs. The setting includes a table with various items, possibly food or beverages, suggesting a casual or celebratory atmosphere. The photograph captures a moment of interaction and social engagement among the attendees.

Created by amazon.nova-pro-v1:0 on 2025-06-08

A black and white photo of an outdoor party. Several people are gathered, some are standing, and some are sitting. Some of them are wearing hats, while others are wearing shoes. There are chairs and tables around the area. A table with a cake and other items is placed in front of the people. There are trees and plants on the side.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

This is a black and white image that appears to show a gathering or social event. The image is taken from an elevated perspective, looking down on a group of people standing on a lawn. Many of the people in the image are dressed in what appear to be formal or semi-formal attire, and some of the women are wearing hats.

In the foreground, there is a table laden with various items, which might be food or other party supplies. There are also some plants and foliage visible in the lower left corner of the image. In the background, more people are standing and mingling, with some chairs set out as well. The scene overall suggests a wedding or a similar celebration. The image also appears to be a negative, as the tones are inverted.

Created by gemini-2.0-flash-lite on 2025-05-17

This black and white photograph captures a gathering of people, possibly a garden party or outdoor reception. The image is taken from a high angle, providing a broad view of the scene.

Here are the key observations:

- People: Numerous individuals are present, dressed in attire that suggests a formal or semi-formal event. Hats, dresses, suits, and coats are prominent. The composition emphasizes the variety in the people's attire.

- Setting: The event appears to be taking place outdoors, likely in a garden. There's lush greenery, including what seem to be plants and trees, surrounding the attendees.

- Table with items: A table is central in the foreground, with a variety of items set on it. This could be a display of food, decorations, or serving items. The presence of a cake suggests a celebration.

- Arrangement: There are several chairs placed around, hinting at a space for seating and socializing.

- Ambience: The overall tone suggests a relaxed, yet organized social occasion.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-05

This black-and-white photograph captures a lively outdoor social event, likely from the mid-20th century, given the style of clothing and overall ambiance. The scene is set in a garden or park-like area with grass and trees, suggesting a pleasant day.

In the foreground, there are several tables laden with food and beverages. The tables are covered with white cloths and are filled with an assortment of dishes, including what appears to be cakes, pastries, and other refreshments. There are also some large, ornate cakes or desserts prominently displayed.

The attendees, dressed in formal attire typical of the period, are engaged in conversation and mingling. Men are wearing suits and hats, while women are dressed in elegant dresses and hats, indicative of a semi-formal or formal gathering. Some individuals are seen holding plates and serving themselves from the tables, while others are standing in small groups, chatting.

The overall atmosphere is one of conviviality and social interaction, with people enjoying each other's company in a relaxed outdoor setting. The photograph likely captures a special occasion, such as a garden party, reception, or community event.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-04

The image is a black-and-white photograph depicting a social gathering outdoors. It appears to be a formal event, possibly a garden party or a reception, held during the mid-20th century.

Setting and Atmosphere: The scene is set in a garden with grass and some trees in the background, creating a serene and elegant outdoor setting. There are chairs arranged around a central table that holds various food and drink items, suggesting a catered event. The lighting suggests that the photo was taken during the evening, as the scene is illuminated by artificial light.

Attire: The attendees are dressed in formal attire typical of the 1940s or 1950s. Women are seen wearing long dresses and hats, while men are in suits and ties. The clothing reflects the fashion trends of the time.

Activity: The guests are gathered around the central table, engaging in conversation and socializing. Some are standing, while others are seated on the chairs nearby. The overall mood appears to be relaxed and convivial.

Details: The table is laden with dishes, plates, glasses, and cutlery, indicating that the event is a sit-down meal. The table setup is meticulous, with utensils arranged neatly, and the presence of what looks like a cake or dessert suggests that the gathering is a celebration.

The photograph captures the essence of a mid-20th-century social occasion, emphasizing the importance of community, elegance, and formal attire in such gatherings.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-04

This black and white image captures a lively outdoor gathering, possibly a party or celebration, likely from the mid-20th century based on the fashion and style. The scene is bustling with people dressed in formal attire. Women are wearing dresses with various patterns and hairstyles, some adorned with hats, while men are in suits and ties. The gathering is set in a garden with trees providing shade and scattered leaves on the ground, indicating a fall or autumn season.

A prominent feature in the foreground is a table laden with an assortment of food and drink. There are bowls, cups, and what appear to be small cakes or pastries arranged on a tiered stand. The table is surrounded by people, some of whom are engaged in conversation, while others are serving themselves food. The atmosphere appears convivial and festive, with a mix of socializing and dining. The image captures a moment of communal enjoyment and interaction, evoking a sense of nostalgia for a bygone era.