Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

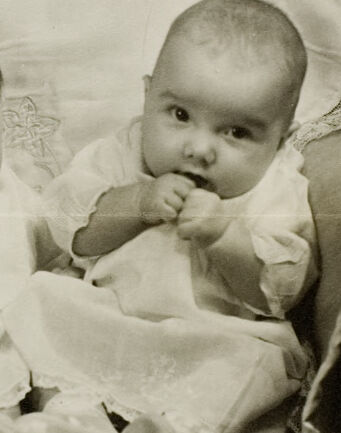

| Age | 0-3 |

| Gender | Female, 99.9% |

| Angry | 76.2% |

| Calm | 10.2% |

| Surprised | 5.6% |

| Disgusted | 2.6% |

| Sad | 2.1% |

| Fear | 1.8% |

| Confused | 1.1% |

| Happy | 0.4% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 94.6% | |

Categories

Imagga

created on 2022-01-22

| people portraits | 99.8% | |

Captions

Microsoft

created by unknown on 2022-01-22

| a person holding a baby | 89.9% | |

| a person holding a baby | 89.6% | |

| a person holding a baby | 80.5% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

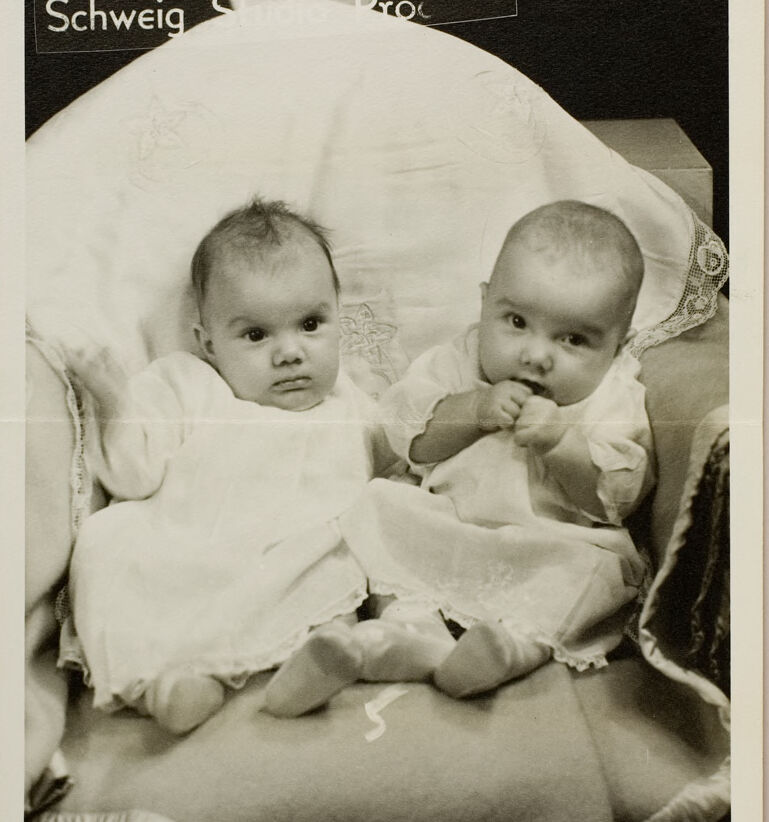

person and her brother, in the same picture, ca.

Salesforce

Created by general-english-image-caption-blip on 2025-05-23

a photograph of a couple of babies sitting on a couch

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-14

The image shows two infants seated side by side on a cushioned surface. They are dressed in delicate, white garments with intricate embroidery or lace details. The background includes a fabric drape with floral patterns, adding a soft and decorative touch to the scene. Text at the top reads "Schweig Studio Pro."

Created by gpt-4o-2024-08-06 on 2025-06-14

The image depicts two infants dressed in white, lacy gowns and sitting closely together on a soft, cushioned surface. The background features a large, intricately embroidered cloth draped behind them, giving a classic, vintage feel to the photograph. There's a partially visible sign at the top of the image with text that appears to be part of a brand or studio name, "Schweig Studio Pho," likely indicating the photography studio. The overall tone of the image is black and white, suggesting it is an older photograph.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-17

The image shows two young babies sitting together on what appears to be a blanket or cushion. The babies are dressed in white clothing and have a serious, thoughtful expression on their faces as they look directly at the camera. The background is slightly blurred, but it seems to be a studio or indoor setting. The image has a vintage, black and white aesthetic, suggesting it may be an older photograph.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-17

This is a vintage black and white photograph showing two infants, likely twins, sitting or propped up together. They are both wearing white christening-style gowns or dresses with lace details. The babies appear to be around the same age, perhaps a few months old. They are positioned on what looks like a cushioned surface or piece of furniture. The photo has a studio-style quality to it, with "Schweig" visible at the top of the image, likely the photographer's or studio's name. The image has that classic, formal portrait quality characteristic of early-to-mid 20th century baby photography.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-25

This image is a vintage photograph of two babies, likely twins, dressed in white outfits and lying on a white blanket or pillow. The baby on the left has dark hair and is looking directly at the camera, while the baby on the right is bald and appears to be sucking on their hand. Both babies are wearing white dresses with lace details and are positioned on a white blanket or pillow with lace trim.

In the top-left corner of the image, there is a watermark that reads "Schweig Studio" in white text. The background of the image is dark, suggesting that it was taken indoors. Overall, the image appears to be a formal portrait of the two babies, possibly taken for a special occasion or to commemorate their birth.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-25

The image is a black-and-white photograph of two babies lying on a couch, with the title "Schweig Schweig Pro" at the top.

- Two babies:

- Both babies are wearing white clothing.

- The baby on the left has dark hair and is looking to the right.

- The baby on the right has light hair and is looking forward.

- The babies appear to be identical twins.

- A couch:

- The couch is covered with a white blanket or sheet.

- There are pillows behind the babies.

- A table:

- The table is visible in the background behind the couch.

- It appears to be made of wood.

The image suggests that the babies are being photographed in a domestic setting, possibly in their home. The title "Schweig Schweig Pro" may indicate that the image is part of a series or a project related to silence or quietness.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-25

The image features a black-and-white photograph of two babies sitting on a couch. The babies are wearing white clothes with lace details. The baby on the left is looking directly at the camera, while the baby on the right is looking down. The couch is covered with a white cloth, and the image has a watermark with the text "Schweig Studio Pro" in the top left corner.

Created by amazon.nova-pro-v1:0 on 2025-05-25

The image shows two babies sitting on a couch. They are wearing white outfits. One of the babies has a hand in its mouth, while the other baby has one hand raised. The babies are sitting on a white cushion with a lace design. The photo is in black and white. There is a piece of paper with the text "Schweig Studio Pro" pasted on the top left corner of the photo.

Google Gemini

Created by gemini-2.0-flash on 2025-05-16

The photo is a black and white image featuring two babies, presumably twins, seated on what appears to be a cushioned surface or low couch. Both babies are wearing white, frilly dresses with delicate details, and matching white booties.

The baby on the left has slightly more hair, styled with a few strands standing up, and gazes directly at the camera with a solemn expression. The baby on the right has less hair and is captured with their hand near their mouth, as if they were about to suck on their fingers or thumb.

The background is somewhat blurred and appears to include elements of a studio setup. At the top of the frame, slightly obscured, is the text "Schweig Studio Pro," suggesting the photograph was taken by Schweig Studio. The overall composition is classic, indicative of a formal portrait from an earlier era, likely the mid-20th century.

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

The image is a black and white photograph. It depicts two babies, likely twins, lying side-by-side on a soft, cushioned surface. They are dressed in similar white, loose-fitting gowns or onesies. The babies have round faces, with visible chins and a soft, innocent expression. One baby has a slight frown, while the other has their fingers near their mouth, possibly sucking on them.

The backdrop seems to be a slightly rounded cushion or padded surface, likely a prop from a photography studio. There's a hint of lace trim. The image appears to be an older photograph, possibly from the mid-20th century, given the classic black and white style and the studio setting. The top of the photo has some text indicating the studio name: "Schweig Studio Pro...".

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-26

The image is a vintage black-and-white photograph featuring two infants. The babies are lying on a couch or a cushioned surface, and they are both dressed in white garments, possibly christening gowns or nightgowns. The infant on the left is lying on their back with their head slightly raised, looking straight ahead with a neutral expression. The infant on the right is propped up on their elbow, leaning towards the other baby with one hand in their mouth, appearing to be sucking on their fingers.

The background includes a decorative, lace-trimmed canopy or curtain that arches over the babies, adding a soft and delicate touch to the scene. The photograph appears to have been taken in a studio setting, as indicated by the professional backdrop and the text "Schweig Studios Pro" visible at the top of the image. The overall atmosphere of the photograph is tender and nostalgic, capturing a moment of innocence and sibling connection.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-14

The image is a black-and-white photograph of two babies lying side by side on a soft, padded surface, possibly a chair or a similar piece of furniture. Both babies are dressed in white outfits with ruffled edges, suggesting a formal or special occasion. The baby on the left appears to be looking directly at the camera with a neutral expression, while the baby on the right seems to be chewing on something, possibly their fingers or a small object in their hand. The background features a light-colored fabric with some decorative patterns, and the photograph has a vintage quality to it. The top of the image has a label that reads "Schweig Studios," indicating the name of the studio that took the photograph.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-14

This is a black-and-white vintage photograph showcasing two babies seated closely together in a cozy and decorative setting. Both children appear to be roughly the same age and are dressed in matching white, lace-trimmed gowns, giving the impression that they might be twins. The baby on the left has slightly more hair and is sitting with a calm expression, while the baby on the right has less hair, is holding their hands up near their mouth, and appears to be chewing or sucking on something.

The babies are seated on a soft, cushioned surface with a lace-edged blanket or shawl draped around them. The background is dark, which contrasts with the bright clothing and the soft white elements, making the babies the focal point of the image. The photograph appears to have a studio setting, as suggested by the text "Schweig Studio Pro" in the upper left corner, indicating the photographer or studio responsible for the image. The overall tone is nostalgic and wholesome, capturing a tender moment of the babies' early childhood.