Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

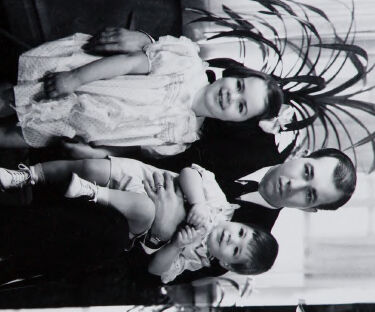

| Age | 31-47 |

| Gender | Male, 99.5% |

| Surprised | 0.1% |

| Fear | 0.1% |

| Disgusted | 0.3% |

| Calm | 95.4% |

| Angry | 0.4% |

| Confused | 1.7% |

| Happy | 0.2% |

| Sad | 1.9% |

Feature analysis

Amazon

| Person | 97.9% | |

Categories

Imagga

| paintings art | 99.9% | |

Captions

Microsoft

created by unknown on 2019-11-16

| a black and white photo of a person | 66.6% | |

| an old photo of a person | 66.5% | |

| old photo of a person | 66.4% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-05

| a photograph of a black and white photo of a woman in a dress | -100% | |

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-05

Here's a description of the image:

The image appears to be a contact sheet of black and white photographs. It's divided into two sections.

Left Section:

- Features a well-dressed woman standing indoors. She's in a long, lacy gown, with her hands on her hips and looking directly at the camera.

- The background suggests a well-appointed interior, with a decorated wall, a dark piece of furniture (possibly a piano or sideboard), a mirror, and a door.

- The room has a rug on the floor.

Right Section:

- This section shows a series of photos.

- The photos appear to be of a man holding two young children. They are probably the same family.

- The father in the images is smartly dressed.

- The children are wearing light-colored outfits.

The contact sheet shows a professional photographic study.

Created by gemini-2.0-flash on 2025-05-05

The image shows two black and white photographs placed next to each other on a black backdrop.

The photograph on the left side is a full-length portrait of a woman in a dress. She has dark hair and is standing with her hands on her hips. In the background is a wall with textured wallpaper, a door with a sheer curtain, a framed picture, and a table with a vase of flowers and some figurines. The floor has a patterned rug.

The photograph on the right side is a series of images of a man with two young children, likely a family portrait. The man is wearing a suit and tie. The children are wearing dresses. There is a large potted plant in the background. The image appears to be repeated, and the orientation of the people is sideways.