Title

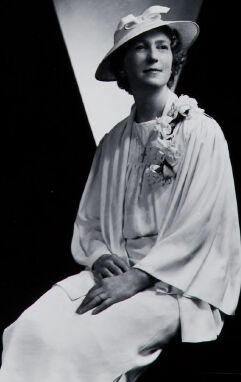

Untitled (two photographs: old man posed reading in den next to bay windows with plants on ledge; double studio portrait of woman wearing dress, hat, corsage, and cross)

|

Date

1935-1940, printed later

|

|

People

Artist: Martin Schweig, American 20th century

|

Classification

Photographs

|

|

Credit Line

Harvard Art Museums/Fogg Museum, Transfer from the Carpenter Center for the Visual Arts, American Professional Photographers Collection, 4.2002.10288

|

|

Human Generated Data

Title

Untitled (two photographs: old man posed reading in den next to bay windows with plants on ledge; double studio portrait of woman wearing dress, hat, corsage, and cross)

People

Artist: Martin Schweig, American 20th century

Date

1935-1940, printed later

Classification

Photographs

Credit Line

Harvard Art Museums/Fogg Museum, Transfer from the Carpenter Center for the Visual Arts, American Professional Photographers Collection, 4.2002.10288