Machine Generated Data

Tags

Color Analysis

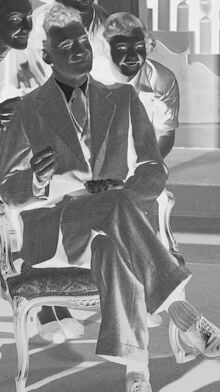

Face analysis

Amazon

AWS Rekognition

| Age | 35-43 |

| Gender | Male, 97.5% |

| Happy | 58.4% |

| Calm | 36.2% |

| Surprised | 2.9% |

| Confused | 0.7% |

| Sad | 0.7% |

| Disgusted | 0.4% |

| Angry | 0.4% |

| Fear | 0.3% |

Feature analysis

Amazon

| Person | 99% | |

Categories

Imagga

| paintings art | 78.4% | |

| people portraits | 8.5% | |

| cars vehicles | 3.1% | |

| food drinks | 3.1% | |

| beaches seaside | 2.6% | |

| streetview architecture | 1.7% | |

| interior objects | 1.3% | |

Captions

Microsoft

created by unknown on 2022-01-15

| a group of people standing in front of a building | 82.3% | |

| a group of people standing outside of a building | 81.8% | |

| a group of people standing on top of a building | 76.6% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-17

| a photograph of a group of people sitting on a chair | -100% | |

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-18

Here is a description of the image:

The image is a black and white negative of a staged scene. It appears to be a family portrait or a scene from a film or play.

Main elements and their arrangement:

- Centered figure: A man is seated in a chair, dressed in a suit. He appears to be the focus of the photograph.

- Surrounding family: Around the seated man, there are four other figures (presumably family members). They are standing, leaning, and smiling around him.

- Photographer: A man kneels in front of the seated figure, appears to be the photographer, holding a camera.

- Setting: The scene is set in what appears to be a living room. There is a staircase, a large window with curtains, a lamp, a picture on the wall, a couch, and other decorative elements.

Overall impression:

The image appears to be a carefully posed scene. The negative format adds a unique artistic look to the photograph. It may be a candid shot of a family portrait session.

Created by gemini-2.0-flash on 2025-05-18

Here is a description of the image:

This is a black and white negative of a photograph that captures a family scene indoors. On the left side of the frame, a man sits in a chair, elegantly dressed in a suit, his legs crossed. Surrounding him are three people, possibly family members, leaning in to be part of the shot. To the right, a man is kneeling, holding up what appears to be a camera to take the photograph. The setting includes furniture such as a sofa, chairs, and a staircase in the background. Decorative elements like a painting on the wall and a floral arrangement add to the homey ambiance of the scene. The image has a clear "KODAK" stamp on the right side, indicating the brand of film used. Numerical notations, such as "35166-A," are visible along the bottom edge, suggesting a catalogue or archival code.