Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 39-47 |

| Gender | Female, 86.1% |

| Happy | 87.1% |

| Fear | 8.5% |

| Surprised | 2.3% |

| Angry | 0.6% |

| Sad | 0.5% |

| Calm | 0.4% |

| Disgusted | 0.3% |

| Confused | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 97.7% | |

Categories

Imagga

created on 2022-01-09

| paintings art | 99.1% | |

Captions

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

a vintage photo of a woman riding a horse.

Salesforce

Created by general-english-image-caption-blip on 2025-05-03

a photograph of a man and woman riding on a ride in a ferris wheel

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-12

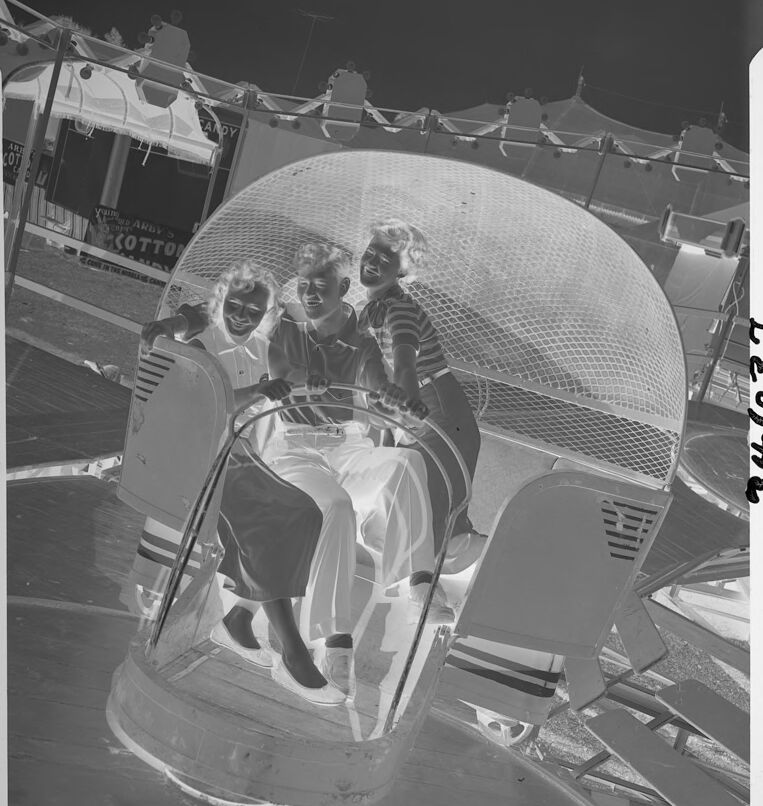

This is a black and white negative image capturing a carnival or amusement park scene. It features an amusement ride, with two individuals seated inside a pod-like enclosure. The ride appears to have a metallic frame and netting as part of its design. The background shows a tent structure with decorative lights strung across. The atmosphere suggests a lively outdoor setting typical of fairs or festivals.

Created by gpt-4o-2024-08-06 on 2025-06-12

The image depicts an inverted negative of a vintage photograph taken at an amusement park or fair. The scene features three people in the seat of a carnival ride, likely a spinning ride based on the design of the car. The ride car has a protective mesh or grid behind the seats, and there are various fairground structures visible in the background, including tents and stalls. String lights can be seen above, suggesting a lively atmosphere typical of a fair. There is a handwritten numerical code in the border of the negative.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image shows three people sitting inside a transparent dome-shaped structure at what appears to be an amusement park or carnival. The structure is surrounded by other carnival rides and attractions, including a sign that says "Cotton". The people inside the dome seem to be enjoying themselves, with smiles on their faces. The image has a black and white tone, giving it a vintage or historical feel.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This is a black and white photograph that appears to be from the 1950s, showing three people riding in what looks like an amusement park ride or carnival attraction. They are seated in what appears to be an enclosed pod or capsule with a mesh dome cover. The riders are wearing typical 1950s casual attire - skirts and casual tops. The image captures them smiling and enjoying themselves while on the ride. In the background, you can see some carnival tents or structures with decorative bunting or flags. The photograph has a nostalgic quality typical of mid-20th century fairground photography.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-05

The image depicts a black-and-white photograph of three people riding a spinning teacup ride at an amusement park. The three individuals, two women and one man, are seated inside a clear plastic or glass dome, which is attached to a large, spinning base. The ride appears to be in motion, as the people are leaning to the side and holding onto the handles for support.

In the background, there are several other rides and attractions visible, including a Ferris wheel and a cotton candy stand. The overall atmosphere of the image suggests a fun and lively day at the amusement park, with the three riders enjoying their time together on the spinning teacup ride.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-05

The image is a black-and-white photograph of three people riding a carnival ride. The ride appears to be a spinning teacup-style attraction, with the riders seated in a large, dome-shaped vehicle. The vehicle is made of metal and has a mesh roof, allowing the riders to see out while they spin around.

The three riders are all smiling and looking at the camera. They are dressed in casual clothing, with the man in the middle wearing a striped shirt and white pants, and the two women on either side of him wearing dresses. The woman on the left is wearing a dark dress, while the woman on the right is wearing a light-colored dress with horizontal stripes.

In the background, there are several other carnival rides and attractions visible, including a Ferris wheel and a game booth. The sky above is dark, suggesting that the photo was taken at night or in low light conditions.

Overall, the image captures a fun and carefree moment at a carnival or amusement park, with the three riders enjoying themselves on the spinning ride.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-03

The image is a black-and-white photograph of three people riding a spinning ride at an amusement park. The ride is a transparent, spherical vehicle with a net-like structure on the outside. The three people are sitting inside the ride, and two of them are holding onto the handles while the other one is sitting in the middle. The ride is spinning, and the people are smiling and seem to be enjoying themselves. The image has a vintage look, and it appears to be an old photograph.

Created by amazon.nova-pro-v1:0 on 2025-06-03

The black-and-white image features three people sitting in a ride that looks like a giant ball with a mesh net. The ride is on a platform with a metal fence. The people are smiling and seem to be posing for a photo. The woman on the left is wearing a white blouse and pants, while the man in the middle is wearing a striped shirt and pants. Behind them are tents and other rides.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-07

Here's a description of the image:

Overall Impression: The image is a black and white negative of a snapshot, capturing a moment of fun and excitement. It appears to be taken at a fair or amusement park.

Subject: The primary focus is on three young people (likely teenagers or young adults) riding a carnival ride. They are all smiling and appear to be enjoying themselves. The ride seems to be a type of rotating vehicle with a mesh covering or cage around it.

Setting: The background shows elements of a fair, including signs for "Candy Cotton" and the structures of other rides or booths. The setting suggests a sunny day at a carnival or fair.

Composition: The shot is taken from a slightly upward angle, emphasizing the thrill of the ride. The composition effectively captures the joy and excitement of the moment. The negative quality contributes to the nostalgic feel of the image.

Created by gemini-2.0-flash on 2025-05-07

Here is a description of the image:

The image is an inverted black-and-white photograph of three young people riding on a carnival or amusement park ride. The ride appears to be a circular gondola-type ride, possibly one that swings or rotates. The passengers are smiling and appear to be enjoying the ride. The gondola has a wire-mesh cage over the top and is designed with a steering wheel in front.

In the background, there is what appears to be a carnival or fair setting, with tent-like structures and signs, one of which reads "Cotton Candy".

The image is slightly tilted. The number "34637" is stamped in the upper right side of the image.

The inversion of the image means that what would normally be light areas are dark, and vice versa. This affects the perception of details and textures in the photograph.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image is a black-and-white photograph depicting three children enjoying a ride at what appears to be an amusement park or fair. The ride they are on seems to be a type of spinning or rotating ride, possibly a "rocket" or "spaceship" themed ride, given its design. The children are seated inside a compartment that has a mesh or netted dome covering the top. They appear to be having a good time, as they are smiling and laughing.

The ride vehicle is cylindrical and has a retro-futuristic design, with visible vents and a control panel in front of the children. The background shows other rides and structures typical of an amusement park, including what looks like a carousel or merry-go-round. The overall scene captures a moment of joy and excitement, likely from a bygone era, given the style of the ride and the black-and-white nature of the photograph.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-04

This image is a black-and-white photograph of a vintage amusement park scene. It features two individuals sitting in a ride that resembles a futuristic or space-themed vehicle, enclosed in a large, rounded structure with a mesh-like exterior. The ride appears to be part of a fair or carnival, as indicated by the background which includes other attractions, signage, and booths. The individuals are smiling and appear to be enjoying the ride, with one person driving and the other seated beside them, also smiling. The overall atmosphere suggests a fun and nostalgic setting from a bygone era.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-04

This black-and-white photograph features three people riding a carnival-style amusement ride. The ride consists of a circular, rotating platform with multiple seats, each enclosed by a transparent plastic dome-like structure. The individuals are seated in one of the cabins, holding onto the controls and smiling, appearing to enjoy the ride. The background shows parts of a carnival or fair, including a tent and some light fixtures overhead. The image has a vintage feel due to its monochromatic tone and the style of clothing worn by the riders, suggesting it was taken in the mid-20th century.