Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 22-30 |

| Gender | Male, 97.6% |

| Happy | 62.6% |

| Calm | 29% |

| Sad | 3% |

| Angry | 2.7% |

| Fear | 1% |

| Surprised | 0.7% |

| Disgusted | 0.5% |

| Confused | 0.5% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 94.9% | |

Categories

Imagga

created on 2022-01-15

| streetview architecture | 85.5% | |

| nature landscape | 6.9% | |

| beaches seaside | 3.1% | |

| cars vehicles | 2.3% | |

Captions

Microsoft

created by unknown on 2022-01-15

| a vintage photo of a person | 80.7% | |

| a vintage photo of a group of people standing around a plane | 53.8% | |

| a vintage photo of a crowd | 53.7% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-11

a view of the pool area.

Salesforce

Created by general-english-image-caption-blip on 2025-05-20

a photograph of a group of people sitting on a bench in a park

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-08

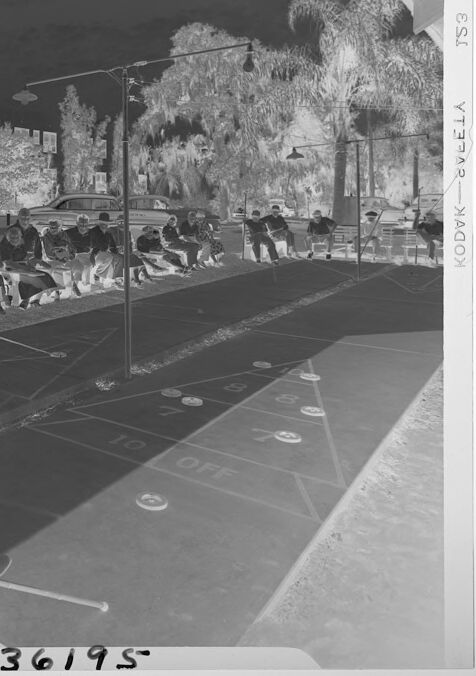

This image shows a reversed black-and-white negative of a social or recreational scene. It appears to depict a shuffleboard court surrounded by a group of people seated and standing. Some individuals are actively participating, holding cues for playing shuffleboard, while others sit on benches observing. The background features palm trees and buildings, suggesting a warm and sunny location, possibly a residential or vacation area. Cars are parked near the buildings, adding to the casual and leisurely vibe of the image. The scene gives a nostalgic feel, potentially taken in the mid-20th century, based on the clothing styles and atmosphere.

Created by gpt-4o-2024-08-06 on 2025-06-08

The image appears to be a black-and-white photographic negative featuring a group of people engaged in an outdoor shuffleboard game. The scene includes several elderly individuals who are either seated on benches or standing around the shuffleboard court. They are likely residents or visitors enjoying a leisure activity. The presence of trees, including palm trees, and mid-20th-century automobiles suggests a suburban or resort setting, possibly in a warm climate. Buildings in the background look residential, adding to the tranquil atmosphere. The image has a vintage feel, linked to the kodak film used to capture it. It's likely taken from the mid-20th century, given the style of clothing and cars.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-13

The image appears to depict an outdoor nighttime scene in a tropical or subtropical location. There are palm trees and other lush vegetation visible in the background, suggesting a warm climate. In the foreground, there is a large group of people seated at tables, seemingly engaged in some kind of social or recreational activity. The scene has a festive, lively atmosphere, with people gathered together and interacting. The overall impression is of a vibrant, social gathering taking place under the night sky.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-13

This is a black and white photograph that appears to be from an earlier era, showing people playing shuffleboard at what looks like a retirement community or recreational facility. The scene includes several people seated along benches watching the game, while others are actively playing on the shuffleboard court. The court is marked with typical shuffleboard scoring lines and numbers. In the background, you can see apartment buildings or residential units and palm trees, suggesting this might be in a warmer climate location, possibly Florida or another southern state. The image has that distinctive mid-20th century quality to it, with the women wearing dresses typical of that period.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-26

The image depicts a black-and-white photograph of a group of people gathered around a shuffleboard court. The scene is set in a park or outdoor recreational area, with trees and buildings visible in the background.

In the foreground, several individuals are engaged in playing shuffleboard, while others sit on benches or stand nearby, watching the game. The atmosphere appears to be relaxed and social, with people enjoying each other's company and the outdoor setting.

The image also features a timestamp in the bottom-left corner, which reads "36195." This suggests that the photograph was taken on a specific date, although the exact date is not provided.

Overall, the image captures a moment of leisure and community, highlighting the importance of social activities and outdoor recreation in people's lives.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-26

The image is a black-and-white photograph of a group of people playing shuffleboard on a sunny day. The game is set up on a long, rectangular court with lines and numbers painted on it. The players are standing or sitting around the court, some holding cues or mallets, while others watch the game unfold.

In the background, there are trees and buildings visible, suggesting that the game is taking place in a park or other outdoor recreational area. The overall atmosphere of the image is one of leisure and enjoyment, as the players seem to be having a good time and enjoying each other's company.

The image appears to be a vintage photograph, possibly from the mid-20th century, given the style of clothing and the equipment used in the game. It is likely that the image was taken by a professional photographer or someone who was interested in capturing the moment and preserving it for posterity.

Overall, the image provides a glimpse into a bygone era and offers a sense of nostalgia and warmth. It is a reminder of the importance of recreation and socializing, and the joy that can be found in simple activities like playing shuffleboard with friends.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-26

A black-and-white photo shows a group of people playing a game on the street. The game has a board with numbers and words written on it. The players are holding sticks and are standing or sitting on benches. Some of them are wearing hats. On the left side, there is a woman sitting on a bench. Behind them, there are trees, street lights, and buildings.

Created by amazon.nova-lite-v1:0 on 2025-05-26

The image is a black-and-white photograph of a group of people gathered around a game of shuffleboard. The shuffleboard court is visible in the foreground, with players standing on either side of the court, holding paddles and sliding discs down the court. The image has a vintage feel, with a watermark in the bottom right corner that reads "36195" and "EVELIA KODAK." In the background, there are trees, buildings, and cars parked in front of a building.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-04-29

Here's a description of the image:

Overall Impression: The image captures a vintage scene, likely from the mid-20th century, of a group of people playing shuffleboard. The photograph is in negative format, which reverses the colors.

Setting: The game is being played outdoors on what appears to be a sunny day. There are buildings in the background suggesting a residential or community area. Palm trees and other foliage are visible, giving a sense of a warm climate.

People and Activities:

- A group of people are either actively playing or watching the game.

- Some are seated on benches lining the shuffleboard court.

- One person appears to be readying their disc to be played.

- Others are watching the game attentively.

Shuffleboard Court:

- The shuffleboard court is clearly marked with lines and numbered scoring areas.

- Discs are positioned on the court.

Other details:

- The image is a photograph of a printed document, which is an early version of a film roll.

Overall, the photo captures a leisure activity.

Created by gemini-2.0-flash on 2025-04-29

Here's a description of the image, noting that it appears to be a photo negative:

The image is a black and white photo negative depicting a group of people engaged in a shuffleboard game. The scene takes place outdoors, with a shuffleboard court prominently featured in the foreground. The court is marked with numbers and "OFF" zones, and several pucks are scattered across its surface.

Along the sides of the shuffleboard court, rows of people are seated on benches. Most appear to be spectators, watching the game unfold. Several people stand around the court, actively participating in the game with shuffleboard cues.

In the background, there's a building with multiple stories and numerous windows, suggesting it could be an apartment complex or a hotel. Tall palm trees are interspersed throughout the scene, adding to the outdoor ambiance. Cars are parked along the side of the building.

Overhead lighting fixtures are positioned above the shuffleboard court, indicating the possibility of nighttime play.

Overall, the image presents a community scene, with people gathering together to enjoy a game of shuffleboard in a leisurely setting. The presence of palm trees and a sizable building suggests the location could be in a warm climate, possibly a resort community or retirement village. The word "KODAK" and "SAFETY" can be seen on the right side of the photo. A number "36195" is imprinted on the lower part of the image.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-27

The image depicts a group of people engaged in a game of shuffleboard, an outdoor activity typically enjoyed by older adults. The scene appears to be set in a retirement community or a recreational area, given the relaxed and social atmosphere.

Key details include:

- Participants: There are several individuals, mostly elderly, actively participating in the game. They are using long cues to push weighted discs down the shuffleboard court.

- Setting: The shuffleboard courts are outdoors, surrounded by trees and buildings that look like residential apartments or condominiums. The presence of parked cars and benches suggests a communal area.

- Activity: The game involves players sliding discs down a narrow court, aiming for specific scoring zones marked on the surface.

- Attire: The participants are dressed in casual, comfortable clothing appropriate for a leisurely outdoor activity.

- Atmosphere: The overall mood of the image is relaxed and social, with people enjoying a communal activity in a pleasant outdoor setting.

The image is in black and white, which might suggest it was taken some time ago, capturing a moment of leisure and community engagement.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-07

The image is a black and white photograph depicting a lively scene of people enjoying a game of shuffleboard outdoors. The setting appears to be a community park or recreational area, with park benches and trees in the background.

In the foreground, a large shuffleboard table is prominently featured. The table has numbers and sections marked on it, indicating the scoring areas of the game. Several participants are engaged in the game, some standing and others seated on benches. The individuals are dressed in casual attire, suggesting a relaxed and social environment.

The background shows a row of buildings, possibly apartments or community structures, and more trees. There are also a few parked cars, adding to the suburban or residential feel of the setting. The image has a vintage quality, with a visible date code "36195" in the bottom right corner, indicating it might be from the 1930s or 1940s. The overall atmosphere is one of community and leisure.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-07

This is a black-and-white photograph showing a nighttime scene of people playing shuffleboard in an outdoor setting. The players, mostly elderly individuals, are seated in chairs along two parallel shuffleboard courts. They are using long sticks to push pucks across the courts, which are marked with numbers and scoring zones. The area is illuminated by overhead streetlights, casting shadows of the players and their equipment. In the background, there are palm trees and a multi-story building, suggesting a warm climate and possibly a community or recreational area. The photograph has a vintage feel, possibly from the mid-20th century, and the number "36195" is printed in the bottom right corner.