Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 37-45 |

| Gender | Female, 99.5% |

| Happy | 61.9% |

| Sad | 21.8% |

| Calm | 10.1% |

| Surprised | 2.5% |

| Confused | 1.6% |

| Disgusted | 0.9% |

| Angry | 0.7% |

| Fear | 0.6% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.4% | |

Categories

Imagga

created on 2022-01-15

| people portraits | 55.7% | |

| streetview architecture | 23.8% | |

| events parties | 16.4% | |

| text visuals | 1.8% | |

Captions

Microsoft

created by unknown on 2022-01-15

| a group of people posing for a photo | 82.7% | |

| a group of people posing for the camera | 82.6% | |

| a person standing in front of a group of people posing for a photo | 68.8% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

photograph of a group of young people dancing in front of a christmas tree.

Salesforce

Created by general-english-image-caption-blip on 2025-05-23

a photograph of a group of women in dresses and hats are standing in a room

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-14

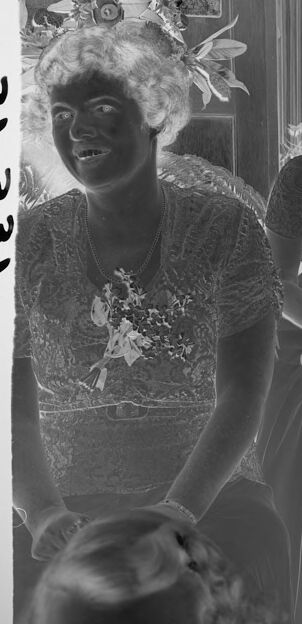

The image features four individuals sitting closely together, dressed in formal attire. Details include elegant dresses, floral decorations, and corsages worn by some of them. The setting includes a background with bookshelves, ornate floral arrangements, and furniture, suggesting an indoor space with a refined or celebratory atmosphere. A large horn-shaped object, likely a gramophone or decor piece, is visible near the center of the composition. Additional people and objects are partially visible in the foreground and background. The photo is presented in a negative format.

Created by gpt-4o-2024-08-06 on 2025-06-14

The image appears to show a group of four individuals sitting together in a room. They are dressed in formal attire, with three of them wearing dresses adorned with corsages or floral decorations. The individuals are seated closely, some with their hands resting in their laps or lightly clasped together. The setting suggests an indoor space, perhaps a study or library, as indicated by the presence of books on shelves visible on the right of the image. Additionally, there is a large floral arrangement seen in the background, which contributes to the formal and decorated atmosphere of the scene. The image is in black and white, which may lend a vintage or old-fashioned feel to the scene.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image appears to depict a group of four women dressed in formal attire, likely from a bygone era. They are standing in what seems to be a room with a bookshelf and a window in the background. The women are wearing dresses with floral patterns and have their hair styled in a manner characteristic of the time period. They appear to be engaged in some kind of social gathering or event, perhaps a party or a celebration, based on their attire and the setting.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This appears to be a black and white negative photograph, which makes the subjects appear in reverse tones (dark appears light and vice versa). The image shows what appears to be a group of people in formal attire, possibly at a social gathering or celebration. They are wearing what look like dresses and are positioned in an interior setting with bookshelves visible in the background. The photograph appears to be from an earlier era, possibly early-to-mid 20th century, based on the clothing styles and photographic technique. Some of the subjects are wearing corsages or floral decorations, suggesting this might be from a wedding or formal event. The lighting creates a striking contrast effect due to the negative format of the image.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of four women in formal attire, likely taken in the 1930s. The women are dressed in elegant dresses with floral patterns and accessories, such as necklaces and wristlets. They are positioned in a room with a bookshelf, a door, and a chandelier, suggesting a formal setting.

The women are engaged in conversation, with one of them leaning forward, possibly to listen to another. The atmosphere appears to be one of social gathering or celebration, with the women's smiles and relaxed postures indicating a sense of camaraderie and enjoyment.

In the background, the bookshelf and door provide a sense of depth and context, while the chandelier adds a touch of luxury and sophistication. The overall mood of the image is one of refinement and elegance, capturing a moment of social interaction and connection among the four women.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of four women in formal attire, with a man partially visible on the right side. The women are dressed in long dresses, and the two on the left wear floral corsages. They appear to be seated in a room with bookshelves and a large vase or sculpture behind them.

The atmosphere suggests a formal event or gathering, possibly a wedding or anniversary celebration. The women's attire and the presence of corsages support this interpretation. The image may have been taken in the mid-20th century, given the style of the dresses and hairstyles.

Overall, the image captures a moment of elegance and sophistication, with the women's formal attire and the refined setting creating a sense of refinement and culture.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph featuring a group of women gathered in a room. The women are dressed in elegant, vintage clothing, suggesting that this might be a formal event or a staged photograph. The women are standing in a circle, with one woman at the center holding a bouquet of flowers. The women are smiling and appear to be engaged in conversation. The room has a wooden door, a bookshelf filled with books, and a lamp. The image has a watermark with the text "21 331" and "N.W.E." in the upper left corner.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The image is a black-and-white photograph with a watermark on the left side. It shows a group of women standing together, possibly in a room. They are all wearing dresses and appear to be posing for a photograph. One of them is seated, and another is standing beside her. Behind them, there is a bookshelf with books arranged on it. The image has a vintage look and seems to be from an old photograph.

Google Gemini

Created by gemini-2.0-flash on 2025-05-18

Here is a description of the image:

The image appears to be a black-and-white photograph of four women and a partial view of a man at what looks like an event. The photograph has an inverted color scheme, meaning the dark areas are light and vice versa.

The women are dressed elegantly, with what appear to be dresses or gowns, and some are wearing jewelry like necklaces. The woman on the left is seated and wearing what looks like a detailed dress. The woman next to her is holding a small object (possibly a roll of paper) and also appears to be wearing a dress with some kind of embellishment on the chest. The third woman has her hands folded in her lap, and the fourth woman is seated and wearing a long, flowing gown.

In the background, there's a bookshelf filled with books, and an object that resembles a horn or part of a musical instrument. Behind the first two women, there's what seems to be a curtain or decorative backdrop and some floral arrangements.

The bottom of the image shows the backs of what seem to be two children's heads. To the right, there is a man's partial face, indicating he is seated and observing the group.

Overall, the setting is formal and suggests an occasion or gathering, possibly a celebration or special event. The inverted colors give the photograph an unusual and somewhat surreal quality.

Created by gemini-2.0-flash-lite on 2025-05-18

Here's a description of the image:

Overall Impression: The image appears to be a vintage photograph, likely taken indoors. It features a group of women, possibly a family or friends, gathered in a room.

Key Features:

- The women: There are at least four women prominently displayed, all dressed in what appears to be formal attire typical of an earlier era. They seem to be the main focus of the picture. They appear to be smiling or looking intently at something just outside of the frame.

- Setting: The background includes elements like a built-in bookshelf filled with books, suggesting a library or a home with a dedicated study space. There are also elements such as a large musical instrument and a doorframe.

- Lighting and Tone: The photograph is a negative image, meaning the colors are inverted. This gives the image a unique look.

- Composition: The composition is centered around the group of women.

- Objects: There's a floral arrangement in the background.

Possible interpretations:

This picture captures a moment in time of family or friends gathering together for a special event, possibly a celebration or formal occasion.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image is a black-and-white photograph featuring four women dressed in elegant attire, likely from a mid-20th century setting. The women are posing together, possibly for a formal event or a special occasion.

- The woman on the far left is wearing a dress with a detailed pattern and has a floral accessory in her hair. She is smiling and appears to be holding a small object in her hand.

- The second woman from the left is wearing a dark dress with a floral corsage pinned to her chest. She is smiling and has her hands clasped in front of her.

- The third woman is also wearing a dark dress with a floral corsage and has a similar hairstyle to the others. She is smiling and appears to be holding something in her hand.

- The woman on the far right is wearing a lighter-colored dress and has a floral accessory in her hair. She is smiling and has her hands resting on her lap.

The background includes a chandelier, curtains, and a bookshelf, suggesting an indoor setting, possibly a home or a formal venue. The overall atmosphere of the image is cheerful and celebratory.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-24

The image is a black-and-white photograph featuring four women sitting together in a formal setting, possibly during an event or gathering. The women are dressed in elegant attire, with one wearing a dark dress adorned with lace and another in a lighter, sheer top. All of them are holding or have flowers, suggesting a ceremonial or celebratory occasion. The background includes a room with a bookshelf filled with books, a chandelier, and decorative elements, adding to the formal atmosphere. The overall mood appears to be cheerful, with the women engaged in conversation and sharing a moment of joy.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-24

This black-and-white photograph captures a group of four women engaged in conversation, seated in an indoor setting that appears to be a formal or celebratory event. The women are dressed elegantly, with two of them holding bouquets, suggesting that they might be part of a wedding party. The woman on the far left is wearing an elaborate hat adorned with feathers and flowers. The background features a bookshelf filled with books, a chandelier, and a curtain, adding to the formal atmosphere of the scene. The image has a vintage quality, with some numbers and markings visible on the borders, indicating it might be part of a collection or archive.