Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 35-43 |

| Gender | Female, 93.6% |

| Calm | 37.4% |

| Surprised | 32.1% |

| Happy | 28.4% |

| Fear | 0.8% |

| Disgusted | 0.4% |

| Angry | 0.3% |

| Sad | 0.3% |

| Confused | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 91.3% | |

Categories

Imagga

created on 2022-01-15

| streetview architecture | 77.1% | |

| paintings art | 22.1% | |

Captions

Microsoft

created by unknown on 2022-01-15

| text | 23.1% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-15

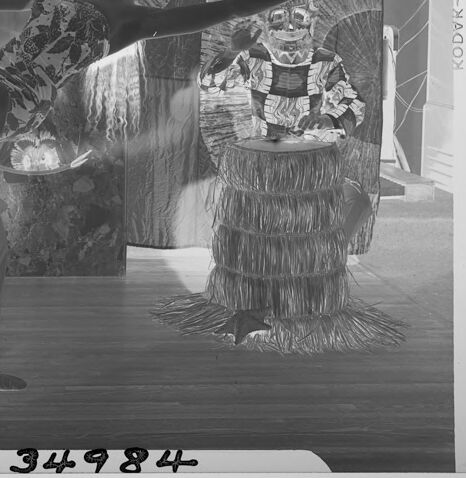

dancers perform during the opening ceremony.

Salesforce

Created by general-english-image-caption-blip on 2025-05-15

a photograph of a man in a costume is performing a dance

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-13

This is a black-and-white negative image depicting a vibrant scene with performers dressed in elaborate costumes and engaging in an energetic dance. At the center, a person in a patterned outfit lifts a dancer, who is wearing a form-fitting outfit and gracefully extending her arms and legs. Surrounding them are three individuals in intricate masks and costumes. One is positioned to the left, playing a drum-like instrument, while another stands to the right with a grass-skirt outfit, also near a drum. The background features textured, circular designs and a prominent statue or sculpture with a humanoid face. The overall image conveys a festive or ceremonial atmosphere.

Created by gpt-4o-2024-08-06 on 2025-06-13

The image is a black and white photo negative featuring a performance scene with people in elaborate costumes. At the center, a male dancer is lifting a female dancer. The man is wearing a mask with ornate detailing and a costume that covers his body, including his legs and arms. The woman, held in the air horizontally, is wearing a fitted costume, possibly a leotard, and her hair is styled back.

In the background, there are two other individuals, also in costume. On the left, a person is dressed in a long, patterned robe and a tall, mask resembling an animal with long ears. They appear to be playing an upright drum or possibly posing with it. On the right, another person is sitting behind a drum covered with grass skirts or similar materials and is wearing a mask as well. The backdrop includes large decorative elements, such as what appears to be a large, sculpted face or figure, possibly symbolizing a deity or spirit, adding a cultural or ritualistic context to the scene. The overall composition suggests a vibrant and theatrical atmosphere.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image appears to be a black and white photograph depicting a theatrical or ceremonial performance. It shows a person wearing an elaborate, ornate costume and mask, performing a dynamic, expressive dance or movement. The background is filled with various sculptural and decorative elements, including masks, figures, and other abstract shapes and patterns. The overall scene has a surreal, dreamlike quality, suggesting a ritual or cultural performance of some kind.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This appears to be a historical black and white photograph showing what looks like a theatrical or stage performance. The scene includes elaborate costumes and what appears to be decorative set pieces with fan-like or sunburst patterns in the background. There are figures in period dress, with one wearing what looks like a patterned or spotted dress. The setting appears to be on a wooden floor stage, and there are vertical columns or pillars visible in the composition. The image has a number "34984" at the bottom, suggesting it may be from an archive or catalog. The overall aesthetic suggests this might be from an early 20th century theatrical production or performance.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of a man and woman performing on stage, surrounded by various props and costumes.

The man is wearing a mask with horns and a long-sleeved shirt with a pattern of white lines. He has his right leg raised in a kick, with his left arm extended to the side and his right arm bent at the elbow. The woman is wearing a dress with a floral pattern and has her arms outstretched to the sides. She is standing behind the man, with her head tilted back and her eyes closed.

There are several props and costumes on stage, including a drum, a mask with a long beard, and a statue of a person wearing a headdress. The background of the image appears to be a stage or performance area, with a curtain or backdrop behind the performers.

The overall atmosphere of the image suggests that it was taken during a performance or rehearsal, possibly for a play or dance routine. The use of masks and costumes adds a sense of drama and spectacle to the scene, while the poses and expressions of the performers convey a sense of energy and movement.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

The image depicts a black-and-white photograph of a man and woman in a dance pose, surrounded by various objects. The man is dressed in a costume featuring a mask, while the woman wears a floral dress and a headpiece. They are positioned in front of a backdrop adorned with a large, circular design and a carved face.

In the foreground, several objects are visible, including a drum, a statue, and a decorative piece resembling a tree trunk. The overall atmosphere of the image suggests that it may be related to a performance or cultural event, possibly from the 1940s or 1950s based on the style of the costumes and the black-and-white photography.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image features a black-and-white photograph of a performance or theatrical scene. In the center, two performers are captured mid-action, one standing on the other's shoulders. The performer on the shoulders wears a mask and holds a spear, while the performer on the ground is dressed in a flowing costume. Behind them, a large backdrop displays intricate designs and a statue, possibly depicting a cultural or mythological figure. On either side of the performers, there are additional props and figures, including a drum and what appears to be a costumed character. The image captures a moment of dynamic movement and theatrical flair, suggesting a performance in a cultural or artistic context.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The image is a black-and-white photograph of a performance or dance scene. It features two individuals, one male and one female, engaging in a dynamic pose. The male performer is wearing a mask and traditional costume, while the female performer is dressed in a flowing outfit with intricate patterns. They are positioned in a way that suggests movement and energy, with the female performer's leg raised and the male performer's arm extended.

Google Gemini

Created by gemini-2.0-flash on 2025-05-09

Here's a description of the image:

Overall Impression:

The image is a black and white photographic negative, which gives it an inverted tonal range. It seems to depict a theatrical or performance art scene. The overall mood is somewhat surreal and theatrical.

Main Subjects:

- Performers: There are two clearly defined performers. One wears a horned mask, the other is wearing a dress with flowers in their hair and both performers are in mid-motion, creating a dynamic pose.

- Props and Costumes: The performers and backdrops feature elaborate costumes, masks, and props. These elements suggest a cultural or fantastical theme.

- Stage/Setting: The scene appears to be set on a stage or studio with backdrops. These backdrops contain masks, and patterns.

Composition:

- The performers are centrally positioned and are the primary focal point.

- The backdrop and props are arranged to create a visually layered scene.

- The inverted tones of the negative create a stark contrast, emphasizing the shapes and textures of the costumes and backdrops.

Inverted Tones and Details:

Because it's a negative, light areas appear dark, and dark areas appear light. This affects how we perceive the materials and textures. For example, what appears to be dark fabric in the negative would likely be a light or bright fabric in reality.

Overall Interpretation:

This image looks like a theatrical performance, capturing a moment of action and artifice. The masks, costumes, and props suggest a story being told through movement and visual presentation.

Created by gemini-2.0-flash-lite on 2025-05-09

Certainly! Here's a description of the image:

Overall Impression:

The image is a negative of a staged photograph, likely from a performance or theatrical production. It features people in costumes and a backdrop that suggests a cultural or artistic theme.

Key Elements:

Central Figures:

- A person wearing a costume with a mask-like face and an elaborate headdress. They seem to be in motion, likely dancing.

- A woman in a patterned strapless outfit and a floral headpiece is either being held up by the person in the mask or perhaps leaning. Her pose also suggests movement.

Background & Props:

- There's a backdrop with a repeating circular design, which provides a layered aesthetic.

- Other figures in costumes, including one with an animal-like mask and another standing near a wrapped drum-like object.

- A decorative, straw-like cylindrical base in front of one of the figures.

- A striped vase.

Style & Tone:

- The image has a formal or theatrical look.

- The negative effect gives it a unique aesthetic.

- The composition creates a sense of performance and visual storytelling.

Let me know if you'd like a more detailed analysis!

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-05

The image depicts a dynamic and artistic scene, likely from a performance or a theatrical event. The central figure is a person in mid-air, performing an acrobatic or dance move, with their body parallel to the ground and arms outstretched. This individual is dressed in a dark outfit with white accents, which contrasts sharply with the background.

In the background, there are three other figures, each wearing elaborate costumes. The figure on the left is wearing a costume with a leopard print pattern and a headdress with antlers. The figure in the center is dressed in a costume with a striped pattern and a headdress that appears to have feathers or spikes. The figure on the right is wearing a costume with a checkered pattern and a skirt made of grass or similar material.

The backdrop features a large, stylized face with a halo or sunburst design around it, adding to the dramatic and cultural atmosphere of the scene. There is also a tall drum or similar percussion instrument in front of the central figure, suggesting a musical element to the performance.

Overall, the image captures a moment of intense movement and cultural expression, with a strong emphasis on costumes and artistic presentation.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-04

The image is a black and white photograph depicting a performance or artistic scene. It appears to be a staged setting, possibly for a cultural or theatrical display. In the foreground, there is a performer in a traditional or theatrical costume, executing a dynamic pose with one leg extended and arms outstretched. The performer is wearing a headdress with intricate details and a patterned outfit.

In the background, there are various props and performers, including one dressed in a costume resembling a naturalistic animal skin with horns, standing next to a conical structure. Another performer is positioned behind a circular backdrop with a face-like design, wearing a flowing garment. There are also other figures and decorative elements that contribute to the overall visual storytelling of the scene. The backdrop and props suggest a thematic or cultural representation, possibly inspired by indigenous or folkloric traditions. The photograph is dated with the number "34984" in the bottom right corner.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-04

This is a black-and-white image depicting a theatrical or cultural performance. At the center, a man is dancing with his arms outstretched, wearing a patterned outfit and a mask with horns. To his left, a person is playing a conga drum, dressed in a patterned dress with a mask that has horns and a circular design. To the right, another person is playing a drum decorated with fringe, wearing a mask that resembles a skull. In the background, there is a large, patterned backdrop featuring a central figure with a headdress, surrounded by radiating lines. The setting appears to be indoors, possibly a studio or a stage. The image has a vintage feel, suggesting it might be from the mid-20th century. The number "34984" is printed at the bottom right corner.