Machine Generated Data

Tags

Amazon

created on 2022-01-15

| Clothing | 99.2 | |

|

| ||

| Apparel | 99.2 | |

|

| ||

| Person | 99 | |

|

| ||

| Human | 99 | |

|

| ||

| Home Decor | 91.5 | |

|

| ||

| Dress | 86.7 | |

|

| ||

| Female | 82.9 | |

|

| ||

| Indoors | 82.4 | |

|

| ||

| Room | 76.6 | |

|

| ||

| Robe | 75.9 | |

|

| ||

| Fashion | 75.9 | |

|

| ||

| Gown | 75.8 | |

|

| ||

| Art | 73 | |

|

| ||

| Face | 67.1 | |

|

| ||

| Woman | 67 | |

|

| ||

| Architecture | 65.4 | |

|

| ||

| Building | 65.4 | |

|

| ||

| Wedding | 64.6 | |

|

| ||

| Portrait | 64.5 | |

|

| ||

| Photography | 64.5 | |

|

| ||

| Photo | 64.5 | |

|

| ||

| People | 60.5 | |

|

| ||

| Door | 59.5 | |

|

| ||

| Street | 58.5 | |

|

| ||

| Urban | 58.5 | |

|

| ||

| Road | 58.5 | |

|

| ||

| City | 58.5 | |

|

| ||

| Town | 58.5 | |

|

| ||

| Wedding Gown | 58.3 | |

|

| ||

| Bridegroom | 58.2 | |

|

| ||

| Alley | 57.6 | |

|

| ||

| Alleyway | 57.6 | |

|

| ||

| Girl | 55.5 | |

|

| ||

Clarifai

created on 2023-10-26

Imagga

created on 2022-01-15

| architecture | 46.6 | |

|

| ||

| building | 34.6 | |

|

| ||

| old | 25.8 | |

|

| ||

| city | 25.8 | |

|

| ||

| religion | 22.4 | |

|

| ||

| history | 22.4 | |

|

| ||

| famous | 22.3 | |

|

| ||

| tourism | 22.3 | |

|

| ||

| ancient | 21.6 | |

|

| ||

| sculpture | 21.2 | |

|

| ||

| art | 20.9 | |

|

| ||

| negative | 20.4 | |

|

| ||

| historic | 20.2 | |

|

| ||

| film | 19.4 | |

|

| ||

| structure | 19 | |

|

| ||

| monument | 18.7 | |

|

| ||

| travel | 18.3 | |

|

| ||

| landmark | 18 | |

|

| ||

| arch | 18 | |

|

| ||

| window | 17.6 | |

|

| ||

| stone | 17.5 | |

|

| ||

| marble | 16.2 | |

|

| ||

| tourist | 15.9 | |

|

| ||

| column | 15.9 | |

|

| ||

| church | 15.7 | |

|

| ||

| culture | 15.4 | |

|

| ||

| historical | 15 | |

|

| ||

| statue | 14.5 | |

|

| ||

| house | 14.1 | |

|

| ||

| exterior | 13.8 | |

|

| ||

| door | 13.7 | |

|

| ||

| house of cards | 13.1 | |

|

| ||

| town | 13 | |

|

| ||

| photographic paper | 12.8 | |

|

| ||

| palace | 12.3 | |

|

| ||

| street | 12 | |

|

| ||

| facade | 11 | |

|

| ||

| urban | 10.5 | |

|

| ||

| buildings | 10.4 | |

|

| ||

| destination | 10.3 | |

|

| ||

| national | 10 | |

|

| ||

| columns | 9.8 | |

|

| ||

| antique | 9.8 | |

|

| ||

| entrance | 9.7 | |

|

| ||

| architectural | 9.6 | |

|

| ||

| memorial | 9.5 | |

|

| ||

| god | 8.6 | |

|

| ||

| attraction | 8.6 | |

|

| ||

| room | 8.5 | |

|

| ||

| photographic equipment | 8.5 | |

|

| ||

| temple | 8.2 | |

|

| ||

| cathedral | 8 | |

|

| ||

| home | 8 | |

|

| ||

| baroque | 7.8 | |

|

| ||

| people | 7.8 | |

|

| ||

| holy | 7.7 | |

|

| ||

| wall | 7.7 | |

|

| ||

| cityscape | 7.6 | |

|

| ||

| religious | 7.5 | |

|

| ||

| vintage | 7.4 | |

|

| ||

| decoration | 7.3 | |

|

| ||

| tower | 7.2 | |

|

| ||

Google

created on 2022-01-15

| Picture frame | 87.9 | |

|

| ||

| Art | 84.9 | |

|

| ||

| Black-and-white | 82.4 | |

|

| ||

| Line | 81.6 | |

|

| ||

| Building | 81.4 | |

|

| ||

| Font | 77 | |

|

| ||

| Monochrome | 70.7 | |

|

| ||

| Holy places | 70.5 | |

|

| ||

| Monochrome photography | 70 | |

|

| ||

| Room | 69.9 | |

|

| ||

| Arch | 68.9 | |

|

| ||

| Visual arts | 68.4 | |

|

| ||

| Symmetry | 67.1 | |

|

| ||

| History | 64.8 | |

|

| ||

| Stock photography | 63.4 | |

|

| ||

| Molding | 60.9 | |

|

| ||

| Rectangle | 58.9 | |

|

| ||

| Collection | 57.7 | |

|

| ||

| Facade | 56.1 | |

|

| ||

| Religious institute | 51.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

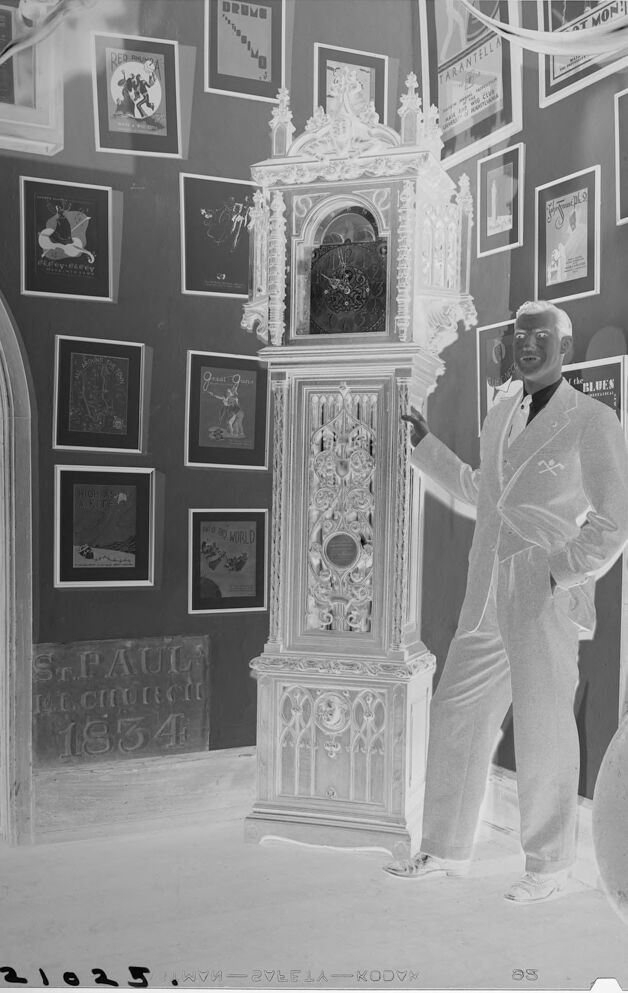

| Age | 30-40 |

| Gender | Male, 99.9% |

| Surprised | 60.1% |

| Confused | 25.3% |

| Happy | 6% |

| Sad | 3.1% |

| Disgusted | 2.2% |

| Calm | 2% |

| Angry | 1% |

| Fear | 0.2% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Person

| Person | 99% | |

|

| ||

Categories

Imagga

| streetview architecture | 94% | |

|

| ||

| interior objects | 5.1% | |

|

| ||

Captions

Microsoft

created on 2022-01-15

| a person standing in front of a building | 66.8% | |

|

| ||

| a person standing in front of a building | 66.4% | |

|

| ||

| a man and a woman standing in front of a building | 42.3% | |

|

| ||

Text analysis

Amazon

WORLD

1834

MON

as

21025

TARANTELLA

BLUES

the

RED

HIGH

-

AT MON

DRUMB

AT

Litras RED

NO THE WORLD

Sr.PAUL

real

MADE

THE

KIA

CLUB

MAY

DL'S

Quality

NO

LICENSE

MAY LX

LX

Litras

DRUMS

RED

T MON

TARANTELLA

941

Otat O

GLUES

Sr.PAUL

1854

21025 LELA-KODV

DRUMS

RED

T

MON

TARANTELLA

941

Otat

O

GLUES

Sr.PAUL

1854

21025

LELA-KODV