Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 36-44 |

| Gender | Female, 51% |

| Happy | 89.8% |

| Calm | 3.9% |

| Sad | 3.4% |

| Surprised | 1.8% |

| Confused | 0.5% |

| Angry | 0.3% |

| Disgusted | 0.2% |

| Fear | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 92.2% | |

Categories

Imagga

created on 2022-01-15

| paintings art | 61.8% | |

| streetview architecture | 37.7% | |

Captions

Microsoft

created by unknown on 2022-01-15

| text | 23% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

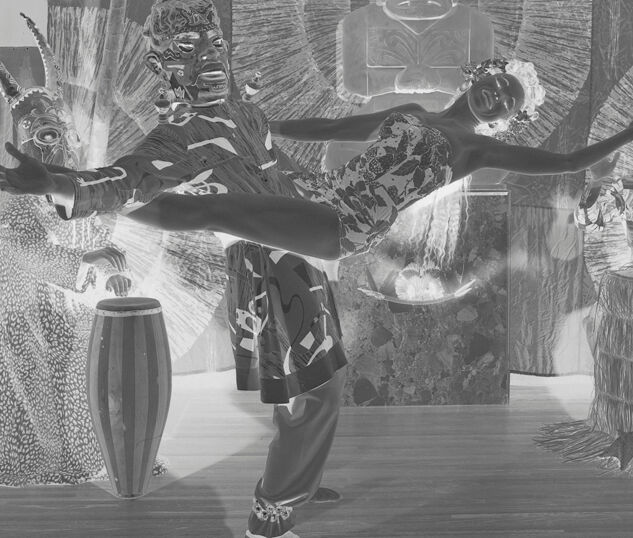

a black and white version of a painting.

Salesforce

Created by general-english-image-caption-blip on 2025-05-18

a photograph of a man in a suit and a woman in a dress

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-18

This image appears to be a photographic negative of a cultural or performance scene. It depicts several individuals in vibrant costumes, with intricate patterns and designs.

Center: A man and woman appear to be in a dynamic pose. The man is lifting the woman in dance-like form, with the woman stretching her arms outward gracefully. Her attire includes a fitted dress with floral motifs and a flower crown. The man is wearing a patterned outfit, and his headpiece suggests cultural significance.

Background: Behind them is an elaborate backdrop featuring a large stylized figure, resembling traditional carvings or sculptures, surrounded by radiating designs that evoke a sunburst or ceremonial energy.

Left: Another person wearing a detailed costume is standing next to a tall drum, possibly playing it. The attire includes a patterned outfit and a mask with horn-like protrusions.

Right: A seated figure is dressed in a costume featuring layers of grass or straw-like material, positioned with a smaller drum or object. Their head adornment or mask indicates cultural symbolism.

The overall setting suggests a performance, ceremony, or celebration of cultural heritage, with elements of music, dance, and traditional dress. The negative format adds to the abstract appearance of the image.

Created by gpt-4o-2024-08-06 on 2025-06-18

The image appears to be a black and white photographic negative of a theatrical or dance performance with a cultural theme. In the center, there is a dancer lifting a female performer who is striking an elegant pose with one leg in the air. The female performer is smiling and wearing a strapless dress adorned with a floral pattern or decoration, and her hair is styled with flowers. The male dancer's attire features bold patterns and a headpiece resembling a headdress.

To the left, a figure wearing a costume with a large mask and holding a drum is visible, suggesting they might be a part of the performance or providing music. On the right, another figure is sitting behind a set of bongo-like drums and is also adorned in an elaborate costume with patterned textures and possibly a grass skirt.

The background includes an intricate set design with large, stylized circular patterns and what seems to be a large face or mask in the center, contributing to a possibly tribal or ceremonial atmosphere. The presence of labels at the edge of the photograph, such as "KODAK" and numbers, indicates that this is a vintage or archival image.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image appears to depict an elaborate theatrical or performance scene. It shows a person dressed in an ornate, colorful costume with feathers and masks, posing dramatically with outstretched arms. The background features various tribal or indigenous-inspired artistic elements, including large carved figures, textured surfaces, and a central sun-like motif. The overall scene has a mystical, ritualistic quality to it, suggesting a cultural or spiritual performance or ceremony. The black and white photograph gives the image a timeless, dramatic quality.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This is a black and white photograph that appears to be from a theatrical or dance performance with an exotic or tribal theme. The scene features a dancer in dramatic pose, with one leg extended, wearing what appears to be traditional or ceremonial costume. The stage setting includes several decorative elements including drums, tribal masks, and a large stylized face or mask with a radiating sunburst pattern behind it. The overall composition has a very theatrical, staged quality with dramatic lighting effects. The set design incorporates various textural elements like straw or grass-like materials and patterned surfaces. This appears to be a professional documentation of what was likely an artistic performance piece exploring cultural or ritualistic themes.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-05

The image is a black-and-white photograph of a man and woman performing in a theatrical setting. The man, dressed in a costume with a mask, is dancing with his arms outstretched, while the woman, also wearing a costume, stands to his left with her arms extended. The background features a large, abstract painting or mural, with various objects such as a drum, a statue, and a table with a vase on it.

The overall atmosphere of the image suggests a performance or rehearsal for a theatrical production, possibly a play or musical. The costumes and props used by the performers add to the sense of drama and spectacle, while the abstract background painting creates a unique and visually striking setting.

The image appears to be a vintage photograph, likely taken in the mid-20th century, given the style of the costumes and the equipment used. The presence of a Kodak label on the right side of the image suggests that it may have been taken using a Kodak camera.

Overall, the image captures a moment of creative expression and performance, showcasing the talents of the two individuals involved. The use of costumes, props, and an abstract background creates a visually striking and dynamic scene that draws the viewer's attention.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-05

The image is a black-and-white photograph of a man and woman in costume, posing in front of a backdrop with various objects. The man is wearing a mask and a long-sleeved shirt with a patterned design, while the woman is wearing a dress with a floral pattern. They are both holding their arms out to the sides, and the man has his right leg bent at the knee.

In the background, there are several objects that appear to be part of a stage set or display. These include a large drum, a statue of a person, and a wall with a patterned design. The overall atmosphere of the image suggests that it was taken during a performance or event, possibly a theatrical production or a cultural celebration.

The image has a vintage feel to it, with a grainy texture and a faded quality that suggests it may have been taken many years ago. The use of black and white also adds to the nostalgic feel of the image, giving it a timeless quality that transcends modern technology and trends. Overall, the image is a fascinating glimpse into the past, offering a unique window into a bygone era and a chance to appreciate the beauty and creativity of a bygone age.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-04

The image is a black-and-white photograph of a stage performance featuring a dancer in a dramatic pose. The dancer is wearing a mask and a costume that appears to be inspired by tribal or indigenous themes. The dancer's arms are extended outwards, and their legs are lifted, creating a dynamic and eye-catching pose. Behind the dancer, there is a backdrop that features a large, abstract design with a central figure that resembles a deity or spirit. The backdrop is illuminated by a bright light, casting shadows and highlights on the dancer and the surrounding objects. On either side of the dancer, there are two smaller figures, possibly representing spirits or ancestors. The image also includes a watermark with the number "34983" in the bottom right corner.

Created by amazon.nova-pro-v1:0 on 2025-06-04

The image is a black-and-white photograph of a performance, possibly from a stage show. The central figure is a woman who is performing a dance while holding a man's hands. She is wearing a dress with a floral pattern and is in a dynamic pose, with her legs spread apart and her arms extended. The man is dressed in a costume that includes a mask and a patterned outfit. Behind them are two large figures, possibly puppets or statues, one on each side, adding to the theatrical atmosphere. In the background, there is a large, ornate backdrop with a central figure and radiating lines, which could be part of the set design. The overall composition suggests a scene from a performance that combines dance, costume, and set design to create a visually striking tableau.

Google Gemini

Created by gemini-2.0-flash on 2025-05-18

Here is a description of the image:

This black and white photo appears to be a film negative, capturing a scene that looks like a theatrical performance or a photo shoot with a cultural theme. In the foreground, a man wearing a tribal or ritualistic mask and attire stands with one leg raised, seemingly in mid-movement or dance pose. To his left, another figure, possibly also masked and costumed, stands near a drum.

In the background, there is a backdrop with various artistic elements including what seems to be a stylized figure, possibly an idol or representation of a deity. To the right of the backdrop, a woman in a floral swimsuit is suspended in mid-air, she is extending her arm towards the mask of the man standing next to her.

The overall impression is one of a staged, vibrant cultural expression, captured in a vintage or archival format, given the Kodak text on the right side of the frame and the large number at the bottom of the image.

Created by gemini-2.0-flash-lite on 2025-05-18

Here's a description of the image:

Overall Impression:

The image is a black and white photograph, a negative, capturing a dynamic scene of what appears to be a theatrical or performance-based moment. The figures are wearing elaborate costumes and performing in front of an artistic backdrop.

Key Elements:

Central Figures: Two main figures dominate the scene. A man is in a dynamic, leaping pose, and is wearing an intricately designed mask, a colorful costume and leggings, and is in a position to either kick or leap. A woman is lying on top of the man in a graceful pose with a head piece.

Supporting Characters/ Props: To the left, a figure, possibly another performer, is dressed in a full-length, patterned costume with a mask that has long horns/antlers. It appears to be interacting with a drum and standing next to a wooden barrel. To the right, another figure, covered in a skirt with the same material as the man's costume and patterned with triangles, is standing behind a table. The backdrop is heavily decorated with large circular designs, a drawing of a face, and foliage, creating a sense of a ritualistic or stage-like setting.

Setting/ Ambience: The scene has an air of ritual, performance, or theatre about it. The masks, costumes, and backdrop suggest a cultural, artistic, or storytelling context. The lighting appears to be artificial, possibly from spotlights, as the image has distinct shadows.

Composition: The photo is well-composed. The leaping figure is the focal point, creating movement and capturing the eye. The supporting figures and backdrop complete the story.

Overall, the image is a striking visual representation of a performance art or theatrical event.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image depicts a scene that appears to be a cultural or ceremonial display, possibly from a museum or exhibition. The focal point is a large, intricately designed backdrop featuring a stylized face with a headdress or sunburst design. In front of this backdrop, there are two large figures dressed in traditional attire, which includes elaborate masks and costumes. One figure on the left is wearing a patterned dress and a mask with antlers, while the figure on the right is adorned with a grass skirt and a similarly detailed mask.

In the foreground, a person dressed in traditional clothing, including a patterned jacket and pants, stands with their back to the camera, facing the display. The setting suggests a focus on indigenous or traditional art and culture, with the costumes and backdrop indicating a rich heritage and ceremonial significance. The overall atmosphere is one of cultural preservation and presentation.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-11

The image appears to be a black-and-white photograph of a performance or dance scene. Two individuals are the focal point of the image, engaged in a dynamic pose. The person on the left, dressed in a costume that includes a headdress with antlers, is balancing an individual on their back. The person on the right is wearing a patterned dress and is being held in mid-air by the person on the left.

The background features a large, ornate decorative piece with circular patterns and what seems to be a carved figure. There are also some other figures or sculptures in the background, including one that resembles a seated figure wearing a headdress. The stage is adorned with fabric and other decorative elements, and there is a drum to the left of the performers, suggesting a cultural or ceremonial context.

The image has a vintage quality, indicated by the text in the bottom right corner, which reads "34983" and includes "KODAK" and "EVE EIX," which could be a photographer's or catalog code. The overall atmosphere suggests a theatrical or cultural performance, possibly from the mid-20th century.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-11

This black-and-white image appears to be a stylized and surreal photograph featuring a group of performers or models. The central figure is a woman in a patterned dress, striking a dramatic pose with one leg lifted and arms extended. Surrounding her are three figures in elaborate costumes. The figure on the left wears a mask with horns and a long dress, the figure on the right is seated on a drum-like object with a mask, and the figure behind the woman is partially obscured but also wearing a mask and a patterned outfit. The background is decorated with large, circular patterns and silhouettes, giving the image a theatrical or ritualistic atmosphere. The overall composition and styling suggest a blend of performance art and cultural or mystical themes.