Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 56.5% |

| Happy | 64.5% |

| Surprised | 25.8% |

| Calm | 4.4% |

| Fear | 3.5% |

| Disgusted | 0.6% |

| Confused | 0.5% |

| Sad | 0.4% |

| Angry | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.2% | |

Categories

Imagga

created on 2022-01-09

| streetview architecture | 91.8% | |

| paintings art | 7.9% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a group of people posing for a photo | 78.9% | |

| a group of people posing for the camera | 78.8% | |

| a group of people posing for a picture | 78.7% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-15

photograph of a family at a christmas party.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a man and two dogs are standing in front of a tent

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-13

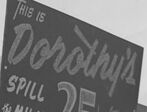

The image depicts two individuals standing in front of a carnival booth or game area, holding plush toys and prizes. The booth has a sign that reads "This is Dorothy's Spill the Milk 25¢" indicating a game where participants likely knock down milk bottles to win prizes. The background shows other stuffed animals displayed as prizes and additional activity in the carnival setting. The scene has a lively and celebratory atmosphere, captured in a black-and-white, inverted tone.

Created by gpt-4o-2024-08-06 on 2025-06-13

The image depicts a carnival or fair scene with two individuals holding large plush toys. The setting includes a booth or tent, prominently displaying signs related to carnival games, specifically a sign that reads "Dorothy’s Spill Milk 25¢" and encouraging a win. The booth is decorated with various plush toys and prizes. The overall atmosphere suggests a festive and playful environment typical of fairs, with people visible in the background, possibly engaging with other games or attractions. The image is a black and white negative, which inversely displays lights and shadows.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image shows a carnival or fair setting, with a booth or stall in the background displaying various stuffed animals and other toys for sale. In the foreground, there are two people, a woman and a young child, posing with several stuffed animals, including what appears to be a large teddy bear. The woman is wearing a patterned dress, and the child is holding a smaller stuffed animal. The overall scene suggests a lively and festive atmosphere, with the booth and its merchandise creating a sense of excitement and anticipation.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-19

The black and white photograph shows a smiling woman and young girl posing together while holding stuffed animal toys at what appears to be a fair or carnival. In the background, there are booths or stalls set up with various items for sale and crowds of people milling about. A sign advertising "Dorothy's Spill & Milk 25 cents" is visible, suggesting this may be some kind of game or attraction at the event. The woman and girl both look happy and are dressed casually, likely enjoying a fun day out together at the fair.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This appears to be a black and white photograph from what looks like a carnival or fair setting. There's a game or prize booth visible with stuffed animals or prizes on display. Several stuffed animals, possibly teddy bears, are hanging from the booth. There's a sign visible in the image that appears to be a price board. The image has that classic vintage feel, likely from the mid-20th century based on the style and quality of the photograph. The booth appears to be set up outdoors, as you can see what looks like ground or grass at the bottom of the image.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black and white photograph of two people, a man and a woman, standing in a tent at an event. The man is holding a stuffed animal, possibly a teddy bear, and the woman is holding a stuffed animal that appears to be a sheep. There are other stuffed animals on display behind them.

The background of the image shows a tent with a sign that reads "Dorothy's Spill da Milk" and a price of 25 cents. There are also other people in the background, some of whom are looking at the stuffed animals on display.

Overall, the image suggests that the man and woman are at a fair or carnival, where they are browsing through a display of stuffed animals. The sign in the background indicates that the stuffed animals are being sold by someone named Dorothy, and the price of 25 cents suggests that they are affordable for most people.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

The image is a black and white photograph of a man and woman standing together, each holding a stuffed animal. The man is on the left side of the image, wearing a light-colored shirt and dark pants. He has short hair and is smiling at the camera. In his right hand, he holds a large teddy bear.

To his right stands the woman, who has short hair and is also smiling at the camera. She wears a light-colored jacket over a light-colored top and dark pants. In her left hand, she grasps a leash attached to two stuffed dogs, one of which appears to be a poodle. The background of the image shows a tent with various stuffed animals on display, including more teddy bears and dogs. A sign above the man's head reads "This is Dorothy's Spill Milk 25 cents." The overall atmosphere suggests that the couple is at a fair or carnival, enjoying themselves amidst the festive surroundings.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image shows a black-and-white photograph of two women wearing white face paint standing in front of a booth. The booth has a sign that reads "This is Dorothy's Spill Milk 25 cents." The women are holding stuffed animals, and one of them is holding a stuffed dog. The booth is filled with stuffed animals, and there is a table with a white cloth and a stuffed animal on it. The image has a watermark with the text "63976" and "2013-07-20" in the bottom right corner.

Created by amazon.nova-pro-v1:0 on 2025-06-08

A black-and-white photo of two people standing under a tent with a sign that reads "This is Dorothy's Spill Milk 25." The two people are smiling and posing for a photo. The person on the left is holding a stuffed toy, while the person on the right is holding a dog and a stuffed toy. Behind them is a table with stuffed toys on top, and a person is standing near it.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

In an eerie, high-contrast black and white photograph, a fairground scene unfolds, centered around a young couple proudly displaying their winnings. The negative presentation of the image casts an unusual light on the textures and details of the scene.

The young man, standing tall, holds a plush bear close to his chest, while the young woman beside him is laden with several plush dog toys. They both emanate joy and accomplishment, their smiles mirroring the excitement of a fairground victory.

Behind them, the background reveals a partially visible booth labeled "Dorothy's Spill the Milk" with a price of 25 cents, suggesting the game from which they won their prizes. The booth is adorned with an array of other stuffed animals, hinting at the variety of prizes available.

Further in the background, other fairgoers mill about, adding depth and life to the setting. A tent structure is visible, adding to the fairground atmosphere. The high contrast amplifies the textures of the plush toys, the fabric of the booth, and the general fairground environment, making the image visually striking and intriguing.

Created by gemini-2.0-flash-lite on 2025-05-17

Certainly! Here's a description of the image:

Overall Impression:

This is a vintage black-and-white photograph, likely taken at a fair or carnival. It features two young people holding stuffed animals, with a booth and other fairgoers in the background.

Foreground:

- A young man and woman are the main subjects, both smiling.

- The man is holding a large stuffed teddy bear.

- The woman is holding the leashes of several stuffed poodle-like dogs.

- The stuffed animals are prizes that were likely won at the fair.

Background:

- A booth is visible behind the man. A sign on the booth reads "This is Dorothy's Spill the Milk 25¢". The booth itself seems to be a game where players try to "spill the milk" to win prizes.

- More prizes, likely stuffed animals, are on display in the booth.

- Other fairgoers are visible in the distance, suggesting a lively and crowded atmosphere.

- There's a clear sense of a fun, festive event.

Composition and Style:

- The photograph has a clear focus on the subjects in the foreground.

- It appears to have been taken with natural lighting, likely outdoors.

- The image has a strong sense of nostalgia due to its vintage style.

Overall, it's a charming snapshot of a day at a fair, capturing the joy and excitement of winning prizes.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image depicts a scene from what appears to be a carnival or fair. In the foreground, there are two individuals standing together, smiling. The person on the left is holding a large teddy bear, and the person on the right is holding two small dogs on leashes. The dogs are groomed and appear to be poodles.

Above them, there is a sign that reads "This is Dorothy's Spill the Milk 25¢," which suggests that they might be at a game booth where the objective is to spill milk from bottles by throwing a ball.

In the background, there are various carnival games and attractions. There are several stuffed animal prizes visible, including teddy bears and other plush toys. The area is bustling with activity, and other people can be seen participating in games and walking around. The overall atmosphere appears lively and festive.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-07

This black-and-white image appears to be from a fair or exhibition, likely from a past era given the style of clothing and signage. The scene includes two individuals in the foreground who are dressed in costumes that resemble milkmaids, with one holding a teddy bear and the other holding two large plush toys that resemble sheep or goats. Both individuals have their faces painted to mimic milk stains, adding to the milkmaid theme. The background features a display with signage reading "This is Dorothy's Spill the Milk 25¢," indicating a booth selling milk-related items or souvenirs. The setting includes other fairgoers and booths, suggesting a lively and bustling atmosphere. The image captures a playful and nostalgic moment, potentially from a promotional event or themed fair.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-07

This black-and-white photograph captures a lively scene at a carnival or fair. In the foreground, a man dressed in a light-colored outfit is holding a large teddy bear. Next to him, a woman is holding a dog on a leash, and another dog is sitting on the ground, seemingly interacting with the first dog. Behind them, there is a booth with a sign that reads "THIS IS Dorothy's SPILL the MILK 25¢," indicating a game where participants can win prizes, including the stuffed animals displayed on the booth. The booth is decorated with various plush toys hanging on the walls, and there are people in the background, adding to the festive atmosphere. The image has a vintage feel, suggesting it was taken in the mid-20th century.