Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 32-48 |

| Gender | Male, 54.7% |

| Angry | 45.1% |

| Sad | 45.3% |

| Calm | 53.8% |

| Disgusted | 45.1% |

| Confused | 45.2% |

| Surprised | 45.5% |

| Fear | 45% |

| Happy | 45% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.3% | |

Categories

Imagga

created on 2019-11-16

| paintings art | 99.5% | |

Captions

Microsoft

created by unknown on 2019-11-16

| a group of people standing in front of a mirror | 53.7% | |

| a group of people in front of a mirror | 53.6% | |

| a person standing in front of a mirror | 53.5% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-02

a photograph of a group of people standing around a table

Created by general-english-image-caption-blip-2 on 2025-07-10

four black and white photos of women in a classroom

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-15

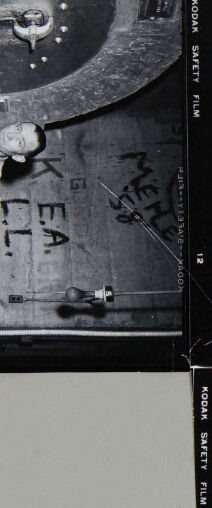

This image consists of three black-and-white photographs.

The upper-left image shows a man working in what appears to be an industrial or mining environment. He stands near a large container filled with coal, and machinery is visible in the background. The wall features graffiti with text including "LEA."

The upper-right image depicts a classroom or educational setting. Several individuals are seated at a table with typewriters, suggesting a typewriting or office training session. Their legs are visible beneath the desk.

The lower-right image shows a classroom scene with people standing and reading from open books. A chalkboard in the background contains handwritten notes or lessons.

Created by gpt-4o-2024-08-06 on 2025-06-15

The image is a composite of three black and white photographs:

The top-left photograph shows a man standing in an industrial setting, possibly a basement or mechanical room. He is wearing work clothes and is beside a large container filled with a dark material, possibly coal. There's a control panel with gauges and valves on the wall behind him, and graffiti that reads "MECA CO."

The top-right photograph depicts four women seated at a table with typewriters. They are engaged in work or a typing class, sitting in a row with papers and typewriters in front of them. The setting appears to be a classroom or office environment.

The bottom photograph displays three individuals standing in front of a blackboard, holding books and reading. It looks like a classroom setting, with chalk writing on the board behind them and a shelf on the side. The individuals are positioned side by side, focused on their books.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-17

The image contains three separate photographs. The top left photograph shows a person lying on a table or platform, surrounded by what appears to be medical or scientific equipment. The top right photograph shows a group of women seated at desks, likely in an office or classroom setting. The bottom photograph shows a group of people, including both men and women, standing around a chalkboard and engaged in what seems to be a discussion or lesson. The images appear to depict various educational or scientific activities from an earlier time period.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-17

This appears to be a vintage black and white photograph, likely from a school or educational setting. The image is split into two parts:

In the top photo, several women are seated at a table or desk, working with what appear to be typewriters or similar office equipment. They're dressed in typical 1940s or 1950s attire, including skirts and blouses.

In the bottom photo, a few students or individuals are standing at what appears to be a chalkboard, holding books or papers, possibly during a study or teaching session. The setting looks institutional, with plain walls and basic furnishings typical of mid-20th century educational facilities.

The overall quality and style of the photographs suggests these were taken sometime in the mid-20th century, possibly as documentation of educational or vocational training programs.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-29

The image presents a collection of three black-and-white photographs, each depicting a distinct scene within a school setting.

Photograph 1:

The top-left photograph features a man standing in front of a large metal door, which is slightly ajar. The door appears to be part of a larger structure, possibly a bunker or a room with a unique design. The man is dressed in a light-colored shirt and pants, and his attire suggests that he may be a maintenance worker or someone responsible for the upkeep of the facility. The background of the photograph reveals a concrete wall with graffiti, adding a touch of urban grit to the scene.

Photograph 2:

The top-right photograph shows a group of four women gathered around a table, engaged in various activities. One woman is typing on an old-fashioned typewriter, while another is holding a piece of paper and looking at it intently. The third woman is seated on a chair, and the fourth woman is standing behind them, observing the scene. The table is cluttered with papers and other objects, giving the impression of a busy workspace. The background of the photograph is a plain gray wall, which helps to focus attention on the women and their activities.

Photograph 3:

The bottom photograph depicts a man and two women standing in front of a chalkboard, engaged in a discussion. The man is holding a piece of paper and appears to be explaining something to the women, who are listening intently. The chalkboard behind them is covered in writing, suggesting that they may be in a classroom or lecture hall. The background of the photograph is a plain gray wall, which helps to create a sense of simplicity and focus on the individuals in the foreground.

Overall, the three photographs provide a glimpse into the daily lives of people working or studying in a school setting. The images showcase a range of activities, from maintenance work to typing and discussions, and offer a unique perspective on the people and places that make up a school community.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-29

The image is a collage of three black-and-white photographs, each depicting a different scene. The top-left photo shows a man in a white shirt and pants standing in front of a large machine with various buttons and levers. The top-right photo features four women sitting at a table, with two of them holding typewriters. The bottom photo shows three people standing in front of a chalkboard, with one person writing on it.

The background of the image is a light gray color, which provides a neutral backdrop for the photographs. Overall, the image appears to be a collection of vintage photographs showcasing different aspects of life, possibly from the mid-20th century.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-04

The image shows four photographs in a collage, depicting various scenes from a classroom setting. The top left photograph shows a person lying on a pile of coal. The top right photograph shows a group of women sitting at a table, each using a typewriter. The bottom left photograph shows a person standing in front of a chalkboard, holding a book, while the bottom right photograph shows a group of students, both male and female, standing in front of a chalkboard, each holding a book.

Created by amazon.nova-pro-v1:0 on 2025-06-04

The image consists of three black-and-white photographs. The first photo shows a man wearing a white shirt and pants, leaning on a machine with a circular object on top. The second photo shows four women sitting on chairs in front of a desk with typewriters. They are wearing dresses and eyeglasses. The third photo shows three people standing in front of a blackboard, with one of them holding a book.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-11

Here's a description of the image:

The image is a series of three black and white photographs arranged on a light gray background, likely a contact sheet or a layout of negatives. Each of the photographs depicts a different scene:

- The Man in the Boiler Room: The first photo shows a man in a boiler room. He appears to be wearing a light-colored shirt and pants and is standing near a coal pile. He is looking directly at the camera. There are industrial components in the background. The boiler room has graffiti on the wall.

- The Typing Class: The second photo shows a group of five young women gathered around a long table. They are all sitting and appear to be in a typing class. Four of them are positioned in front of typewriters. They have neatly arranged hair and are wearing skirts and dresses.

- The Classroom Study: The third photo is of a classroom setting. Three young people are studying in front of a chalkboard. There is writing on the board. They are all holding open books. There is a desk to the side.

Created by gemini-2.0-flash on 2025-05-11

The image is a scanned contact sheet of black and white photographs. The sheet is divided into four frames.

The top left frame features a man in light-colored work clothes standing next to a coal bin, seemingly inside a boiler room. The image is rotated clockwise. The man is looking towards the camera.

The top right frame shows five young women seated at a long table, each with a typewriter in front of them. One of the women is standing behind them.

The bottom right frame captures three students standing in front of a blackboard. They are each holding books and appear to be studying or writing on the board.

The frames are separated by strips of the original film. The words "KODAK SAFETY FILM" are visible along the edges of the film.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-08

The image consists of three black-and-white photographs, each depicting different scenes.

Top Left Photograph:

- This photo shows a person lying on their back inside a large, cylindrical, industrial structure. The person appears to be wearing a white shirt and dark pants. The structure seems to be made of metal and has various mechanical components, including pipes and valves. The person is positioned near a large opening, possibly a hatch or door, and is looking upwards.

Top Right Photograph:

- This photo features five young women seated at a long table in what appears to be a classroom or office setting. They are using typewriters, suggesting a typing class or a secretarial training session. The women are dressed in casual clothing typical of the mid-20th century. The room has a plain background, and the table is equipped with multiple typewriters.

Bottom Right Photograph:

- This photo shows three individuals standing in front of a blackboard in a classroom setting. They are holding books and appear to be reading or studying. The blackboard behind them has some writing on it, indicating an educational environment. The individuals are dressed in formal attire, with the women wearing skirts and the man in a suit.

Overall, the images capture various educational and industrial settings from a past era, highlighting different aspects of learning and work environments.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-20

This image is a black-and-white photograph that has been divided into four quadrants, each depicting a different scene. Here's a description of each quadrant:

Top Left Quadrant: This quadrant shows a person lying on a large, industrial-looking surface with some text and symbols etched into it. The person appears to be in a relaxed or possibly unconscious position with their legs bent. The surface looks heavy-duty, possibly a machine part, with mechanical details and what seems to be a control panel or instrumentation at the top.

Top Right Quadrant: This quadrant features a group of four individuals seated at a table with typewriters in front of them. The setting appears to be a classroom or office, characterized by plain walls and a simple, unadorned desk. The individuals are dressed in attire that suggests a formal or professional context, possibly from the mid-20th century.

Bottom Left Quadrant: This quadrant is blank and features a plain white space, possibly a placeholder or an intentional design choice for the image.

Bottom Right Quadrant: This quadrant shows a group of four individuals standing in front of a chalkboard. They are engaged in a discussion or presentation, as some of them hold papers and one appears to be writing on the board. The environment suggests an educational or professional setting, possibly a classroom or seminar room.

The image as a whole appears to be from a historical context, judging by the clothing, typewriters, and the style of the photographs. The presence of Kodak Safety Film suggests that it is a photograph taken on film, which aligns with the historical aspect.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-20

This image appears to be a collection of three black-and-white photographs, likely from a vintage or archival source, as indicated by the "Kodak Safety Film" markings on the photos. The photographs depict various scenes that seem to be from a school or educational setting:

The top-left photograph shows a person dressed in a white coat lying on a bed or table next to a large circular object, possibly a furnace or a piece of industrial equipment. The person appears to be in a relaxed or possibly unconscious state, and there are various tools and objects around them.

The top-right photograph shows a group of four women sitting at a long table, each working on a typewriter. They are dressed in professional attire, suggesting a formal or office environment.

The bottom-right photograph shows three individuals standing at a chalkboard. Two of them are holding papers and appear to be discussing or studying something, while the third person is writing on the chalkboard. The setting appears to be a classroom or study area.

The overall theme of these photographs seems to be related to education, work, and professional activities from a past era.