Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 77.1% |

| Angry | 12.5% |

| Calm | 17.1% |

| Happy | 36.4% |

| Surprised | 4% |

| Sad | 22.9% |

| Disgusted | 2.5% |

| Confused | 4.7% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 98.2% | |

Categories

Imagga

created on 2019-03-25

| streetview architecture | 54.2% | |

| people portraits | 18.6% | |

| paintings art | 14% | |

| text visuals | 6.4% | |

| events parties | 5.3% | |

Captions

Microsoft

created by unknown on 2019-03-25

| a person standing in front of a mirror posing for the camera | 32.6% | |

| a group of people standing in front of a mirror posing for the camera | 32.5% | |

| a person standing in front of a mirror | 32.4% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

the crew of the space shuttle endeavour.

Salesforce

Created by general-english-image-caption-blip on 2025-05-16

a photograph of a group of people standing around a juke box

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-15

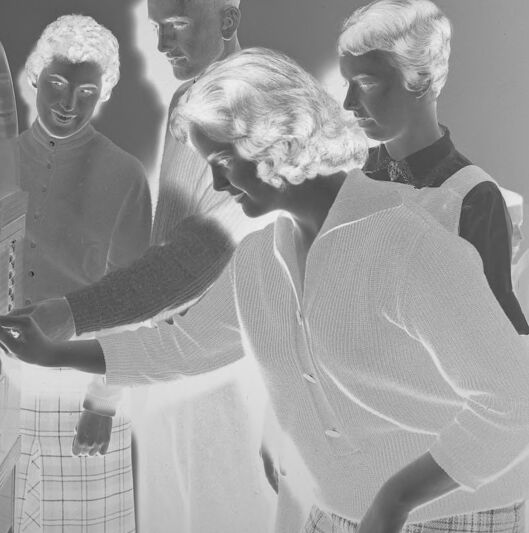

This image is a photographic negative depicting a group of individuals gathered around a jukebox. The jukebox features a curved, glass dome and visible internal components, such as a record player. One person is reaching forward, likely to select a song. The clothing style and hairstyles indicate a vintage setting, possibly mid-20th century.

Created by gpt-4o-2024-08-06 on 2025-06-15

The image is a photographic negative showing a group of people gathered around a jukebox. One person is actively operating the jukebox, possibly selecting a song or inserting a coin. The jukebox is distinctively styled with a rounded transparent cover, revealing part of its mechanism inside. The clothing style of the individuals suggests a mid-20th-century setting, with one person wearing a sweater and another in a cardigan. In the background, there is another person seated, appearing to be part of the same group. The image has text indicating it's Kodak Safety Film along the right side, reinforcing that this is a film negative. The overall atmosphere suggests a social gathering or communal activity focused on music and entertainment.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image appears to be a black and white photograph depicting several people, likely models or actors, posing in front of what seems to be a television or electronic device. The individuals have distinctive hairstyles and clothing that suggest the image was taken in a past era, perhaps the 1950s or 1960s. The overall composition and lighting create a dramatic, cinematic feel to the scene.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This appears to be a vintage black and white photograph, likely from the 1950s or early 1960s, showing a group of people gathered around what appears to be a jukebox or similar music playing device. The image has an interesting negative or inverted quality to it, where the subjects appear bright against a darker background. The people in the photo are wearing typical mid-century casual clothing including sweaters and what looks like a plaid or patterned skirt. The jukebox or music player has the characteristic curved top and illuminated display panel typical of that era's entertainment equipment. The composition suggests this might be in a diner, social club, or similar recreational setting where people would gather to listen to music.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-27

The image is a black-and-white photograph of a group of people gathered around a jukebox.

The jukebox is on the left side of the image. It has a rounded top and a flat front. The front of the jukebox is made of glass or plastic, and it has a series of buttons and dials on the front. The jukebox is standing upright, and it appears to be in good condition.

There are five people in the image, all of whom are standing around the jukebox. They are all wearing casual clothing, and they appear to be in their 20s or 30s. The people are all looking at the jukebox, and they seem to be engaged in conversation.

The background of the image is a plain wall. There is a watermark in the bottom-right corner of the image that says "M.J. TEEA - 2 - KODAK." The overall atmosphere of the image is one of nostalgia and simplicity. The people in the image appear to be enjoying each other's company, and the jukebox seems to be the center of attention.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-27

This image is a black-and-white photograph of a group of people gathered around a jukebox. The individuals are dressed in attire typical of the 1950s, with the women wearing dresses and the men sporting short haircuts.

The jukebox itself features a distinctive design, characterized by a large, rounded top and a flat front panel adorned with buttons and a dial. The overall atmosphere of the image suggests a lively gathering, possibly at a diner or restaurant, where the group is enjoying music and each other's company.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-27

The image is a black-and-white photograph of a group of people standing around a jukebox. The people are dressed in vintage clothing, and the woman in the foreground is wearing a white blouse and a plaid skirt. The jukebox is a large, old-fashioned machine with a glass front, and it is surrounded by several people. The image has a slightly blurry effect, giving it a nostalgic and vintage feel.

Created by amazon.nova-pro-v1:0 on 2025-05-27

The black-and-white image shows a group of people standing around a jukebox. The people are wearing formal attire. The woman in front is wearing a white sweater and is touching the jukebox with her right hand. The man standing next to her is wearing a white shirt and a tie. Behind them, a woman is wearing a dress and a bracelet. The background is blurry, and it seems like they are in a restaurant.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-17

Here's a description of the image:

Overall Impression: The image appears to be a vintage photograph, likely from the mid-20th century, rendered in a negative format. This gives it a unique and striking appearance, with the light and dark values reversed. It captures a group of young people interacting around a jukebox.

Key Elements:

- Jukebox: A prominent feature, positioned on the left. It's a classic jukebox design with a glass front showcasing the internal mechanisms, likely including the records.

- People: There are five people in the scene. They are all young, possibly in their teens or early twenties, and dressed in clothing typical of the era.

- Activity: One person appears to be selecting a song on the jukebox. The others are watching, possibly anticipating the chosen music.

- Setting: The environment suggests a casual setting, perhaps a diner, cafe, or recreational space.

Composition and Style:

- The photograph has a documentary quality, capturing a moment in time with a degree of informality.

- The negative effect emphasizes the light and shadows, creating a dramatic feel.

In essence, the image provides a glimpse into a moment of leisure and enjoyment from a bygone era, with the central focus on the joy of music through the jukebox.

Created by gemini-2.0-flash on 2025-05-17

The image shows an inverted black and white image of a group of young people using a jukebox. A young woman is pointing at the buttons to select a song. Next to her is a young man wearing a light colored sweater, and behind them are two young women, one of whom is wearing a dark dress with a white collar. Behind the group sits another young woman on a bench. The jukebox is on the left of the image, and it appears to be a classic model with a curved top and a display of records inside. The image has a high contrast and a grainy texture.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-28

The image appears to be a vintage black-and-white photograph featuring a group of people gathered around a jukebox. The jukebox is a classic model with a domed top and a selection of records visible inside. The people in the image are dressed in clothing that suggests a mid-20th-century style, with the women wearing dresses and the men in suits or sweaters. The individuals seem to be engaged in selecting or listening to music from the jukebox, indicating a social or recreational setting. The overall atmosphere of the image conveys a sense of nostalgia and camaraderie.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-13

The image is a black-and-white photograph featuring a group of individuals standing in front of a vintage jukebox. The jukebox is an older model, likely from the mid-20th century, with a curved top and a clear window through which the internal mechanisms can be seen. The individuals appear to be dressed in attire that suggests a mid-20th-century fashion style, with short-sleeved shirts, skirts, and button-up tops. The lighting in the image is somewhat dramatic, casting shadows and giving the scene a nostalgic feel. The overall composition suggests a casual, social setting, possibly a gathering or a public event where music could be played using the jukebox.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-13

This black-and-white photograph appears to show a group of people, possibly a family, interacting with a jukebox. The individuals are dressed in what seems to be 1950s or 1960s fashion, with the women wearing dresses and the man in a suit or similar attire. The jukebox is a prominent feature in the foreground, with one of the individuals selecting a record. The image has a vintage feel, likely due to the clothing styles and the presence of the jukebox, which was a common entertainment device during that era. The photograph also has a slight double exposure effect, adding to its artistic and nostalgic quality.