Machine Generated Data

Tags

Amazon

created on 2022-01-15

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-15

| man | 36.3 | |

|

| ||

| person | 31.3 | |

|

| ||

| people | 31.2 | |

|

| ||

| male | 29.8 | |

|

| ||

| adult | 25.1 | |

|

| ||

| men | 19.7 | |

|

| ||

| working | 18.6 | |

|

| ||

| indoors | 18.4 | |

|

| ||

| worker | 17 | |

|

| ||

| patient | 16.1 | |

|

| ||

| lifestyle | 15.9 | |

|

| ||

| professional | 15.5 | |

|

| ||

| work | 14.9 | |

|

| ||

| room | 14.8 | |

|

| ||

| kitchen | 14.3 | |

|

| ||

| musical instrument | 14.3 | |

|

| ||

| portrait | 14.2 | |

|

| ||

| crutch | 14 | |

|

| ||

| happy | 13.8 | |

|

| ||

| smiling | 13.7 | |

|

| ||

| equipment | 13.2 | |

|

| ||

| office | 12.4 | |

|

| ||

| job | 12.4 | |

|

| ||

| home | 12 | |

|

| ||

| business | 11.5 | |

|

| ||

| medical | 11.5 | |

|

| ||

| casual | 11 | |

|

| ||

| occupation | 11 | |

|

| ||

| staff | 10.8 | |

|

| ||

| holding | 10.7 | |

|

| ||

| computer | 10.4 | |

|

| ||

| surgeon | 10.3 | |

|

| ||

| device | 10 | |

|

| ||

| shop | 9.9 | |

|

| ||

| instrument | 9.7 | |

|

| ||

| stick | 9.6 | |

|

| ||

| black | 9.6 | |

|

| ||

| case | 9.4 | |

|

| ||

| happiness | 9.4 | |

|

| ||

| back | 9.2 | |

|

| ||

| hospital | 9.1 | |

|

| ||

| chair | 9 | |

|

| ||

| health | 9 | |

|

| ||

| music | 9 | |

|

| ||

| fun | 9 | |

|

| ||

| one | 9 | |

|

| ||

| newspaper | 8.9 | |

|

| ||

| handsome | 8.9 | |

|

| ||

| women | 8.7 | |

|

| ||

| standing | 8.7 | |

|

| ||

| tool | 8.5 | |

|

| ||

| senior | 8.4 | |

|

| ||

| musician | 8.4 | |

|

| ||

| hand | 8.4 | |

|

| ||

| camera | 8.3 | |

|

| ||

| human | 8.2 | |

|

| ||

| team | 8.1 | |

|

| ||

| clothing | 8.1 | |

|

| ||

| hairdresser | 8 | |

|

| ||

| percussion instrument | 8 | |

|

| ||

| interior | 8 | |

|

| ||

| businessman | 7.9 | |

|

| ||

| life | 7.8 | |

|

| ||

| sixties | 7.8 | |

|

| ||

| 60s | 7.8 | |

|

| ||

| education | 7.8 | |

|

| ||

| modern | 7.7 | |

|

| ||

| sick person | 7.7 | |

|

| ||

| profession | 7.7 | |

|

| ||

| active | 7.7 | |

|

| ||

| two | 7.6 | |

|

| ||

| doctor | 7.5 | |

|

| ||

| leisure | 7.5 | |

|

| ||

| cheerful | 7.3 | |

|

| ||

| industrial | 7.3 | |

|

| ||

| group | 7.3 | |

|

| ||

| student | 7.2 | |

|

| ||

| looking | 7.2 | |

|

| ||

| face | 7.1 | |

|

| ||

| medicine | 7 | |

|

| ||

Google

created on 2022-01-15

| Black-and-white | 84.7 | |

|

| ||

| Style | 83.8 | |

|

| ||

| Chair | 73.1 | |

|

| ||

| Monochrome photography | 72.3 | |

|

| ||

| Monochrome | 71.4 | |

|

| ||

| Vintage clothing | 70.7 | |

|

| ||

| Machine | 70.3 | |

|

| ||

| Art | 68.6 | |

|

| ||

| Room | 67.4 | |

|

| ||

| Font | 66.7 | |

|

| ||

| Hat | 64.9 | |

|

| ||

| Classic | 64.4 | |

|

| ||

| Sitting | 64.1 | |

|

| ||

| Stock photography | 64.1 | |

|

| ||

| Home appliance | 59.9 | |

|

| ||

| Photo caption | 57.9 | |

|

| ||

| Fashion design | 57.6 | |

|

| ||

| Visual arts | 53.1 | |

|

| ||

| Motor vehicle | 52.9 | |

|

| ||

| Photographic paper | 52.6 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

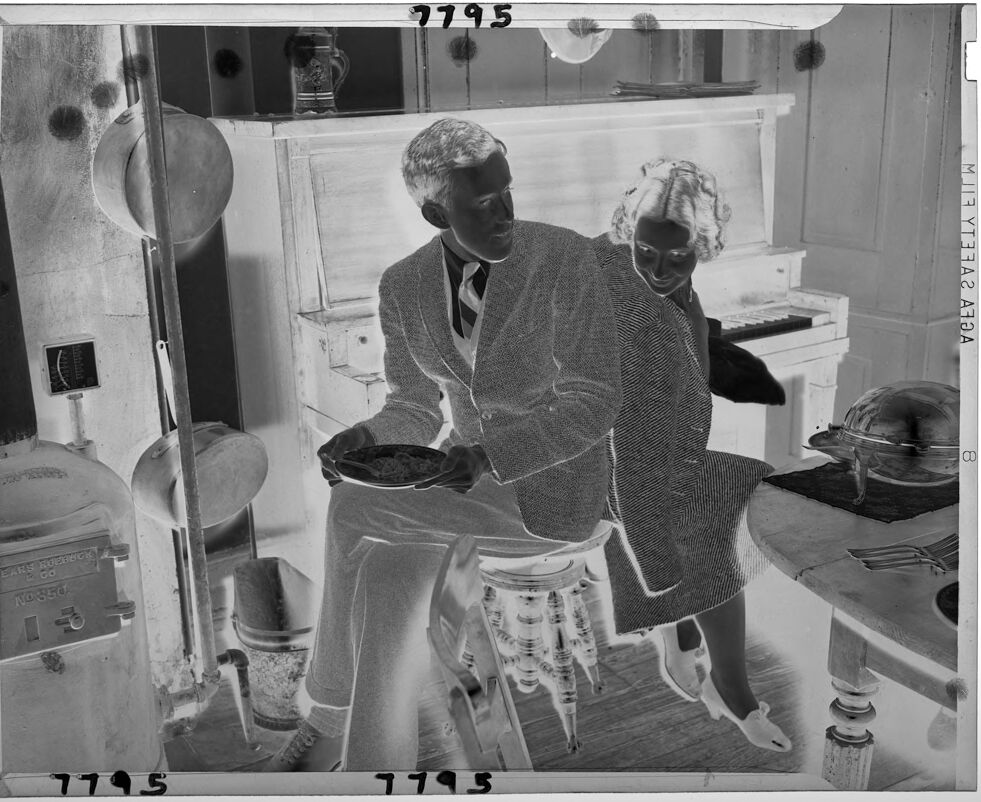

| Age | 29-39 |

| Gender | Female, 89.9% |

| Calm | 98% |

| Happy | 1.1% |

| Sad | 0.5% |

| Angry | 0.1% |

| Disgusted | 0.1% |

| Surprised | 0.1% |

| Fear | 0.1% |

| Confused | 0.1% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| streetview architecture | 91.6% | |

|

| ||

| paintings art | 6.7% | |

|

| ||

Captions

Microsoft

created on 2022-01-15

| a person standing in front of a refrigerator | 43.2% | |

|

| ||

| a man and a woman standing in front of a refrigerator | 27.6% | |

|

| ||

| a person that is standing in front of a refrigerator | 27.5% | |

|

| ||

Text analysis

Amazon

8

350

EARS

GO

7795

NO 350

NO

MJ17

779.5

EARS ROLBERK

MJ17 YEER AFOA

AFOA

YEER

For

ROLBERK

7795

EARS ROEHRY GK

NO350.

1195

1195

MIR YT33A2 AA

7795

EARS

ROEHRY

GK

NO350.

1195

MIR

YT33A2

AA