Machine Generated Data

Tags

Amazon

created on 2022-01-15

Clarifai

created on 2023-10-26

Imagga

created on 2022-01-15

| people | 27.3 | |

|

| ||

| adult | 22.9 | |

|

| ||

| person | 22 | |

|

| ||

| man | 21.5 | |

|

| ||

| clothing | 20.9 | |

|

| ||

| newspaper | 20 | |

|

| ||

| medical | 18.5 | |

|

| ||

| male | 18.4 | |

|

| ||

| nurse | 17.3 | |

|

| ||

| professional | 15.8 | |

|

| ||

| product | 14.8 | |

|

| ||

| laboratory | 14.5 | |

|

| ||

| work | 14.3 | |

|

| ||

| doctor | 14.1 | |

|

| ||

| medicine | 13.2 | |

|

| ||

| hospital | 12.9 | |

|

| ||

| men | 12.9 | |

|

| ||

| worker | 12.7 | |

|

| ||

| health | 12.5 | |

|

| ||

| portrait | 12.3 | |

|

| ||

| clothes | 12.2 | |

|

| ||

| smile | 12.1 | |

|

| ||

| team | 11.6 | |

|

| ||

| blackboard | 11.6 | |

|

| ||

| creation | 11.5 | |

|

| ||

| human | 11.2 | |

|

| ||

| patient | 11.2 | |

|

| ||

| business | 10.9 | |

|

| ||

| equipment | 10.7 | |

|

| ||

| lab | 10.7 | |

|

| ||

| happy | 10.6 | |

|

| ||

| fashion | 10.6 | |

|

| ||

| surgeon | 10.5 | |

|

| ||

| indoors | 10.5 | |

|

| ||

| garment | 10.1 | |

|

| ||

| dress | 9.9 | |

|

| ||

| scientist | 9.8 | |

|

| ||

| attractive | 9.8 | |

|

| ||

| chemistry | 9.7 | |

|

| ||

| research | 9.5 | |

|

| ||

| biology | 9.5 | |

|

| ||

| instrument | 9.5 | |

|

| ||

| window | 9.3 | |

|

| ||

| room | 9.1 | |

|

| ||

| lady | 8.9 | |

|

| ||

| coat | 8.9 | |

|

| ||

| home | 8.8 | |

|

| ||

| scientific | 8.7 | |

|

| ||

| chemical | 8.7 | |

|

| ||

| smiling | 8.7 | |

|

| ||

| crutch | 8.7 | |

|

| ||

| day | 8.6 | |

|

| ||

| happiness | 8.6 | |

|

| ||

| profession | 8.6 | |

|

| ||

| casual | 8.5 | |

|

| ||

| black | 8.4 | |

|

| ||

| pretty | 8.4 | |

|

| ||

| student | 8.3 | |

|

| ||

| care | 8.2 | |

|

| ||

| clinic | 8.2 | |

|

| ||

| technology | 8.2 | |

|

| ||

| family | 8 | |

|

| ||

| working | 7.9 | |

|

| ||

| lifestyle | 7.9 | |

|

| ||

| art | 7.9 | |

|

| ||

| full length | 7.8 | |

|

| ||

| corporate | 7.7 | |

|

| ||

| industry | 7.7 | |

|

| ||

| staff | 7.7 | |

|

| ||

| illness | 7.6 | |

|

| ||

| development | 7.6 | |

|

| ||

| hand | 7.6 | |

|

| ||

| adults | 7.6 | |

|

| ||

| elegance | 7.6 | |

|

| ||

| one | 7.5 | |

|

| ||

| occupation | 7.3 | |

|

| ||

| 20s | 7.3 | |

|

| ||

| looking | 7.2 | |

|

| ||

| women | 7.1 | |

|

| ||

| science | 7.1 | |

|

| ||

| job | 7.1 | |

|

| ||

| interior | 7.1 | |

|

| ||

| modern | 7 | |

|

| ||

Google

created on 2022-01-15

| Hat | 90.1 | |

|

| ||

| Sun hat | 84.4 | |

|

| ||

| Adaptation | 79.2 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Art | 74.1 | |

|

| ||

| Picture frame | 73.7 | |

|

| ||

| Vintage clothing | 72.8 | |

|

| ||

| Monochrome | 71 | |

|

| ||

| Monochrome photography | 70.4 | |

|

| ||

| Event | 67.3 | |

|

| ||

| Photo caption | 66.7 | |

|

| ||

| Illustration | 66.1 | |

|

| ||

| Stock photography | 65.1 | |

|

| ||

| History | 64.6 | |

|

| ||

| Victorian fashion | 64.1 | |

|

| ||

| Font | 63.9 | |

|

| ||

| Fedora | 63.1 | |

|

| ||

| Room | 62.9 | |

|

| ||

| Classic | 60.9 | |

|

| ||

| Sitting | 57.4 | |

|

| ||

Color Analysis

Face analysis

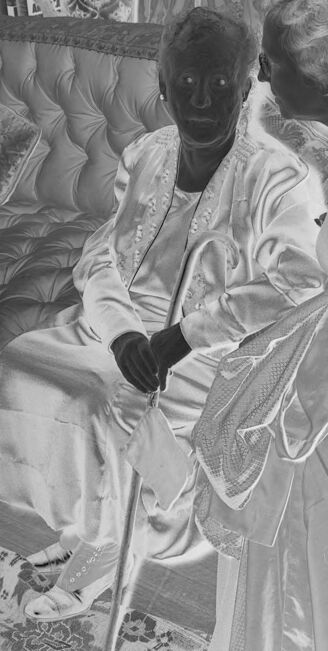

Amazon

AWS Rekognition

| Age | 49-57 |

| Gender | Female, 92.5% |

| Sad | 95.1% |

| Fear | 1.4% |

| Calm | 1.2% |

| Confused | 0.7% |

| Angry | 0.5% |

| Disgusted | 0.4% |

| Happy | 0.4% |

| Surprised | 0.3% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 99.7% | |

|

| ||

Captions

Microsoft

created by unknown on 2022-01-15

| a group of people around each other | 56.2% | |

|

| ||

| a group of people in costumes | 51.8% | |

|

| ||

| a group of people | 51.7% | |

|

| ||

Text analysis

Amazon

21316

day

THAT day

VSIDE

NAT

N2

SIG. N2

SIG.

USA

vagex

VITRA

sens

-lil

NYHAN

316.

-lil

NYHAN

316.