Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 97.8% |

| Calm | 99.9% |

| Sad | 0% |

| Surprised | 0% |

| Confused | 0% |

| Angry | 0% |

| Disgusted | 0% |

| Fear | 0% |

| Happy | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 98.8% | |

Categories

Imagga

created on 2022-01-15

| streetview architecture | 98% | |

Captions

Microsoft

created by unknown on 2022-01-15

| a group of people standing in front of a store | 86.1% | |

| a group of people standing in a room | 86% | |

| a group of people standing around each other | 85.9% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

a wedding ceremony in the mosque.

Salesforce

Created by general-english-image-caption-blip on 2025-05-19

a photograph of a group of people in costumes and hats

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-18

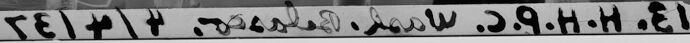

The image is an inverted negative photograph depicting a group of people in formal attire, including coats with fur detailing, dresses, and suits. The setting appears to be opulent, with an ornate wall or ceiling design in the background. Several individuals hold items such as hats or documents, suggesting a formal or social gathering. Steps in the back indicate it could be an entrance or stairway area.

Created by gpt-4o-2024-08-06 on 2025-06-18

The image appears to be a photographic negative and depicts a formal gathering or event. Several men and women are dressed in formal attire typical of an earlier era, possibly the early to mid-20th century. Some men are wearing suits with bow ties, and a few are holding hats. The women are dressed in elegant dresses or gowns, with accessories such as fur stoles. It appears to be set in a grand interior with ornate architectural details visible in the background. The image has a vintage feel, possibly from the 1930s or 1940s, as indicated by the style of clothing and the format of the photographic negative.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image appears to be a black and white photograph depicting a group of people dressed in formal attire, likely from a historical time period. The individuals are standing in what seems to be an indoor setting, possibly a theater or ballroom, with ornate decorations and architectural features visible in the background. The people in the image are wearing a variety of clothing styles, including fur coats, hats, and formal dresses, suggesting this may be a social or cultural event. The overall scene conveys a sense of formality and celebration.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This appears to be a vintage black and white photograph, likely from the early-to-mid 20th century. The image shows a group of people at what seems to be a formal or social gathering. Some are wearing dark suits or dresses while others are in lighter colored attire. There appears to be some fur or feathered accessories worn by some of the individuals. The setting looks to be indoors, with what might be decorative elements visible on the walls in the background. The photograph has some damage or deterioration visible along the edges, which is common for photos from this era. The image appears to be a negative or inverted version of the original photograph, giving it an unusual appearance where light and dark values are reversed.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of two women standing together, surrounded by a group of men. The woman on the left has dark skin and curly hair styled in an updo. She wears a dark coat with white fur trim and a white blouse with a floral pattern. The woman on the right also has dark skin and curly hair styled in an updo. She wears a dark coat with white fur trim and a white blouse with a floral pattern.

The men surrounding them are dressed in suits and ties, with one man wearing a hat. The background of the image appears to be a formal setting, possibly a ballroom or a hotel lobby. The overall atmosphere of the image suggests a sense of elegance and sophistication, with the two women at the center of attention.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

The image appears to be a black-and-white photograph of a group of people in formal attire, possibly from the 1930s or 1940s. The photo is a negative, with the subjects appearing as dark silhouettes against a lighter background.

In the foreground, there are several individuals dressed in suits, dresses, and coats, with some wearing hats and carrying items such as purses or briefcases. The atmosphere suggests a formal event or gathering, possibly a party, wedding, or business meeting.

The background of the image is blurry, but it appears to be an interior setting, possibly a hotel lobby, ballroom, or office building. There are some decorative elements visible, such as chandeliers, mirrors, and ornate moldings.

Overall, the image captures a moment in time from a bygone era, showcasing the fashion and social norms of the past.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph of two women, possibly models, dressed in elegant attire, possibly for a fashion show. The women are standing in front of a wall with intricate designs. The woman on the left is holding a book, and the woman on the right is holding a handbag. Behind them are several people, including a man in a suit and a hat, who appears to be a photographer.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The image is a black-and-white photograph of a group of people in formal attire. The photograph is dated 1932, as indicated by the text at the bottom. The photograph shows a group of people standing in a room with a large window in the background. The two women in the center of the image are wearing long black coats with fur trim and holding books. The men on either side of them are wearing suits and ties.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-18

Here's a description of the image:

Overall Impression:

The image is a black and white negative of a group of people, likely taken indoors. The scene seems to be formal, potentially an event or ceremony.

Key Features and Details:

- Central Figures: Two women are prominently featured in the center. They both appear to be wearing dark coats with fur stoles, which suggests a higher social class or a formal occasion. They are also holding books or papers.

- Other Individuals: There are several men in suits and formal attire. Some appear to be holding documents or serving as ushers. There are a few figures visible in the background, adding to the impression of a crowd.

- Setting: The background suggests an interior setting with some decorative architectural details. There might be columns or arches, hinting at a building of some importance.

- Overall Tone: The image has a classic, historic feel, possibly dating back to the early to mid-20th century. The formal attire of the subjects and the style of photography suggest a formal event.

Possible Interpretations:

Given the clothing and setting, this might be a graduation ceremony, a formal reception, or a similar type of event.

Created by gemini-2.0-flash on 2025-05-18

Here is a description of the image:

This black and white image presents a crowd of people, likely from a mid-20th century setting, engaged in an event or gathering. The subjects' attire suggests a formal occasion.

In the foreground, two women command attention. One wears a dark coat with a fur collar, and the other sports a coat with an elaborate fur stole. Both hold clutches or small bags in their hands. Their hair is styled in fashionable waves, indicating a degree of sophistication.

The backdrop is filled with a collection of men in suits or jackets, some with hats. Their expressions are neutral, seemingly focused on whatever event is unfolding. The setting appears to be a large interior space, possibly a hall or a ballroom, adorned with architectural details.

The image has a somewhat grainy quality, possibly due to its age or the reproduction process. There are also noticeable artifacts or marks along the borders of the image, suggesting it might be a scanned photograph or print.

Overall, the image seems to capture a moment in time, portraying a group of people gathered for a formal occasion, likely in the mid-20th century.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image appears to be a historical black-and-white photograph featuring a group of people, some of whom are in blackface and dressed in elaborate costumes. The setting seems to be an indoor event or gathering, possibly a costume party or a themed event.

Key details include:

Costumes and Makeup:

- Two individuals in the foreground are wearing dark makeup on their faces, which is indicative of blackface.

- One of these individuals is dressed in a costume with a fur stole and a patterned top, while the other wears a white, fur-trimmed jacket with a dark, patterned top.

- Other individuals in the background are dressed in suits and formal attire, some wearing hats and glasses.

Setting:

- The event is taking place indoors, in a room with decorative elements on the walls and ceiling, suggesting a formal or elegant venue.

- There is a large mural or painting on the wall behind the group, depicting a scene with multiple figures.

Interaction:

- The individuals in the foreground appear to be interacting with each other, possibly posing for the photograph.

- One person is extending an arm, possibly gesturing or reaching out to someone else.

Photographic Details:

- The photograph has some markings and notations along the bottom edge, which might be related to the photographer's notes or identification.

The image captures a moment from a past era, reflecting social and cultural practices that are now widely recognized as offensive and problematic, particularly the use of blackface.

Qwen

No captions written