Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 48-56 |

| Gender | Male, 97.8% |

| Calm | 90.9% |

| Sad | 3.5% |

| Surprised | 2.2% |

| Happy | 1.3% |

| Confused | 0.8% |

| Disgusted | 0.7% |

| Angry | 0.4% |

| Fear | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.7% | |

Categories

Imagga

created on 2022-01-15

| streetview architecture | 95.4% | |

| paintings art | 4.5% | |

Captions

Microsoft

created by unknown on 2022-01-15

| a group of people on a court with a racket | 71.3% | |

| a group of people standing on a court with a racket | 71.1% | |

| a group of people standing on a court | 71% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

children playing soccer in the 1950s.

Salesforce

Created by general-english-image-caption-blip on 2025-05-04

a photograph of a black and white photo of a group of people playing baseball

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-10

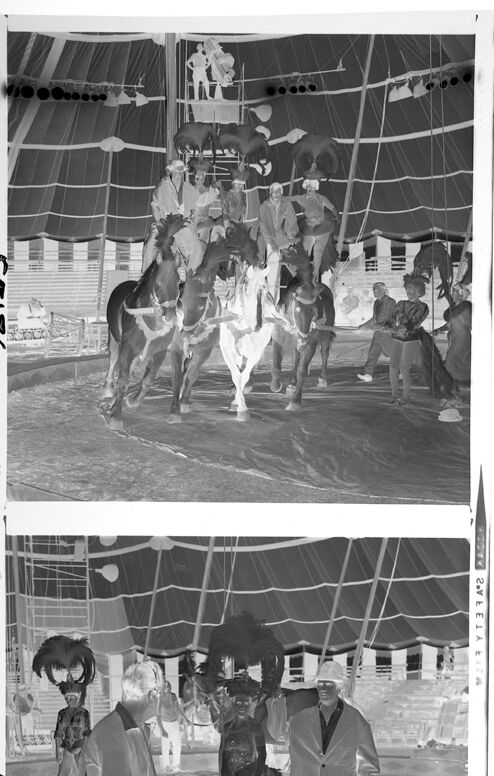

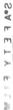

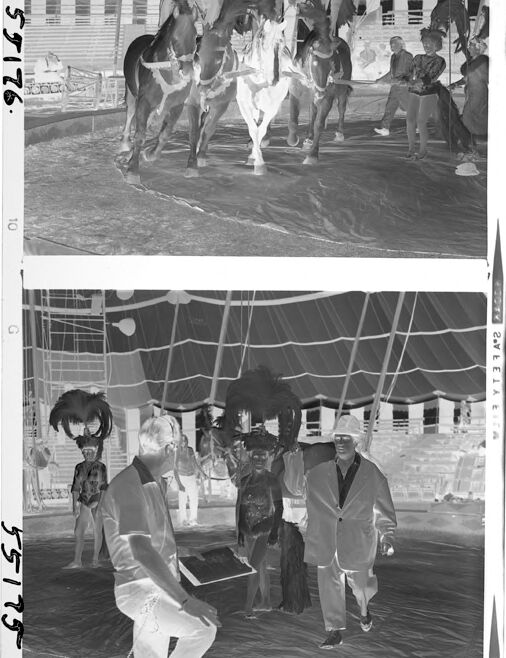

This image appears to be a black-and-white negative, showing two separate scenes from what looks like a circus or performance tent.

Top half: The upper scene depicts a multi-horse act. Several performers, possibly riders or acrobats, are posed together atop a set of horses in the middle of the tent. Above them, additional props or decorations are visible, such as suspended or hanging objects. The scene feels theatrical, with the performers wearing costumes suitable for an act.

Bottom half: The lower scene shows a group of people in the same tent, possibly circus performers or organizers. They are dressed in costumes, with some wearing feathered headpieces, indicating they are part of a show. A man in the foreground holds a book or clipboard, appearing to converse or give instructions. The atmosphere suggests preparation or discussion related to the performance.

Overall, the image showcases the behind-the-scenes or active moments from a circus or performance environment.

Created by gpt-4o-2024-08-06 on 2025-06-10

This image appears to be an inverted photograph negative, which requires a mental inversion of the colors to visualize the true scene. The image is divided into two sections.

In the top section, there is a circus scene under a large tent. Several people are seated or standing on horses, possibly performing as part of a circus act. The horses, which appear dark in the negative, can be assumed to be lighter colored in the actual photograph. The tent is adorned with various decorations and equipment for aerial acts.

In the lower section, there are additional individuals inside the circus tent. Two people are dressed in outfits that suggest performance, possibly entertainers or circus performers with large feather headdresses. Another person stands nearby with a clipboard, possibly someone overseeing the performance or rehearsal. The overall setting is inside a large tent with seats visible in the background.

To fully appreciate the details, one would need to view the positive version of this image, which would show the true colors and details hidden by the negative format.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-15

The image appears to depict a circus or performance event. The top image shows a group of performers on horseback, with people in the background. The bottom image shows a group of people, likely performers or crew, standing on a stage or performance area. The images have a vintage or historical quality, suggesting they may be from an earlier time period.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-15

This appears to be a vintage black and white photograph showing two scenes from what looks like a circus performance. The top image shows several performers on horseback inside what appears to be a circus tent, with riders performing some kind of mounted display or trick riding. There are multiple horses, including what appears to be a white horse among darker ones.

The bottom image shows what appears to be circus performers or trainers on the ground in the same tent setting. The lighting and quality of the photographs suggest these were taken sometime in the mid-20th century. The circus tent's rigging and support lines can be seen in both images, and there appears to be seating or bleachers visible in the background.

The images appear to be part of a contact sheet or series of photographs documenting a circus performance or rehearsal. The number "92155" appears to be marked on the side of the photographs, suggesting these may be from an archive or collection.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of a circus scene, divided into two frames. The top frame features a horse and rider performing a trick, while the bottom frame shows a man in a suit holding a whip, surrounded by other performers.

Top Frame:

- A horse and rider are performing a trick, with the horse jumping over a barrier.

- The horse is dark-colored, and the rider is wearing a light-colored shirt and pants.

- There are several people standing around the ring, watching the performance.

- The background is a circus tent with a dark-colored roof and white stripes.

Bottom Frame:

- A man in a suit is holding a whip, standing in front of a group of performers.

- The man is wearing a dark-colored suit and hat, and is holding the whip in his right hand.

- The performers behind him are dressed in various costumes, including a woman in a dress and a man in a top hat.

- The background is the same circus tent as in the top frame.

Overall:

- The image appears to be a vintage photograph of a circus performance, possibly from the early 20th century.

- The black-and-white tone gives the image a classic and nostalgic feel.

- The image captures a moment in time, showcasing the skill and entertainment of the circus performers.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

This image is a black-and-white photograph of a circus performance, featuring a man on horseback and another man standing in front of him. The top half of the image shows the man on horseback, surrounded by other people and horses, while the bottom half focuses on the man standing in front of him.

The man on horseback is dressed in a light-colored shirt and pants, with a dark-colored vest or jacket. He is holding the reins of the horse and appears to be in control of the animal. The horse is also wearing a harness and saddle, indicating that it is being ridden for a specific purpose.

In the background of the image, there are several other people and horses visible. Some of them appear to be watching the man on horseback, while others seem to be preparing for their own performances. The atmosphere of the image suggests that it was taken during a rehearsal or practice session, rather than an actual performance.

Overall, the image provides a glimpse into the world of circus performers and the skills and techniques they use to entertain audiences. It also highlights the importance of teamwork and coordination between humans and animals in creating a successful performance.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image shows two black-and-white photos of a circus scene. In the top photo, there are people riding horses in a circle, with a few people sitting on the floor. The horses are standing on a platform, and the people are wearing hats and boots. In the bottom photo, there are people standing in a circle, with a man in the middle holding a book and a stick. The people are wearing hats, and the man is wearing a suit and leather shoes.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The image consists of two black-and-white photographs. The top photograph shows a group of people riding horses in a circus ring. The horses are arranged in a circle, and the people are sitting on them. The ring is surrounded by a white cloth, and a few people are standing on the right side. There are a few lights mounted on the top of the ring. The bottom photograph shows a man sitting on the ground, holding a rope in his hand, and looking at the other man standing in front of him. Behind them, a person is standing and holding a stick in his hand. A few people are standing behind them, and a few lights are mounted on the top of the tent.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-04

Here's a description of the image:

The image is a negative print of a circus scene. It's divided into two sections, likely two frames from a single roll of film.

Top Section:

- Scene: Appears to be the performance of a horse act.

- Animals: Several horses are present, some of which are being ridden.

- Performers: There are several people, some dressed in the costumes of performers.

- Background: The setting is a large circus tent. There is a platform above the horses.

Bottom Section:

- Scene: The bottom section appears to depict a group of people, likely circus personnel, on the circus ring floor.

- People: Several people are visible, including individuals in suits and some wearing more elaborate costumes.

- Objects: One person is holding a clipboard and a rope is in the foreground.

- Setting: The scene is also set inside the circus tent.

Overall Impression:

The image captures a snapshot of circus life, showcasing both the performances and the behind-the-scenes activities. The negative print style gives a somewhat surreal and aged quality to the image.

Created by gemini-2.0-flash on 2025-05-04

Here is a description of the image:

The image appears to be a film negative with two separate frames showing scenes from what looks like a circus.

The top frame features a large group of people and horses inside what seems to be a circus tent. There are multiple individuals riding horses, and atop a raised platform, people are also riding and standing with horses. In the background, there's a glimpse of what appears to be a small building outside the tent.

The bottom frame showcases a different scene with a man sitting on a platform, potentially judging or overseeing an event. There are other performers or assistants standing around him, some with elaborate headdresses. The circus tent background is still visible.

The film negative has markings along the sides, including numbers like "55175" and "55176," which are probably frame numbers or some kind of identification for the roll of film. The word "KODAK" is visible in reverse as well.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image consists of two black-and-white photographs depicting scenes from a circus performance.

Top Photograph:

- The scene is set inside a large circus tent with a high, striped canopy.

- The main focus is on a group of performers executing a complex acrobatic act involving horses.

- Multiple performers are standing on the backs of several horses, forming a human pyramid. The performers are dressed in matching outfits, likely costumes for the act.

- There are additional performers and possibly circus staff visible in the background, some standing and some seated.

- The audience is not visible, but the presence of bleachers suggests the tent is set up for spectators.

Bottom Photograph:

- This scene appears to take place in the same circus tent as the top photograph.

- The central figure is a man dressed in a suit and hat, walking towards the camera.

- Accompanying him are several other individuals, including a man in a suit and hat, a woman in a dress, and another person in a darker outfit.

- A performer in a light-colored costume is in the foreground, possibly a clown or an acrobat, holding an object that looks like a box or a small platform.

- The background shows more performers and circus staff, with some equipment and props visible.

Overall, the photographs capture the lively and dynamic atmosphere of a circus performance, showcasing both the acrobatic skills of the performers and the behind-the-scenes activity.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-24

The image appears to be a black-and-white photograph depicting a circus or theatrical performance. The top part of the image shows a group of people riding horses in a circular formation, which suggests a riding act, possibly part of a circus. The riders are dressed in costumes that match the theme of the performance, and the horses are adorned with decorative bridles or headgear.

The bottom part of the image shows two men interacting with each other, with one seated and the other standing. They are dressed in formal attire, and there are additional figures in the background, possibly performers or part of the show. The setting seems to be the interior of a tent, likely a circus tent, with a canvas roof and fabric walls. The overall atmosphere suggests a performance or presentation, possibly during intermission or a break in the show.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-24

The image is a black and white photograph showing two separate moments within a circus setting. The top half of the image captures a thrilling equestrian performance where multiple riders are balancing on the backs of several horses. The riders are dressed in performance attire, and the horses are in motion, adding to the dynamic nature of the scene. The bottom half of the image shows a group of people, including performers and possibly circus staff or officials, standing on the circus floor. One person appears to be holding a clipboard, suggesting they might be a manager or coordinator. The background of both sections features the interior of a circus tent with striped patterns and various circus equipment and decorations. The overall atmosphere conveys the excitement and organized chaos typical of a circus performance.