Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 96.1% |

| Calm | 73.7% |

| Sad | 20.2% |

| Happy | 3.6% |

| Confused | 0.7% |

| Angry | 0.7% |

| Surprised | 0.5% |

| Disgusted | 0.3% |

| Fear | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.1% | |

Categories

Imagga

created on 2022-01-15

| paintings art | 98.8% | |

Captions

Microsoft

created by unknown on 2022-01-15

| an old photo of a person | 82.9% | |

| a group of people posing for a photo | 63.8% | |

| old photo of a person | 63.7% | |

Salesforce

Created by general-english-image-caption-blip on 2025-05-03

a photograph of a couple of women riding on a motorcycle

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-07

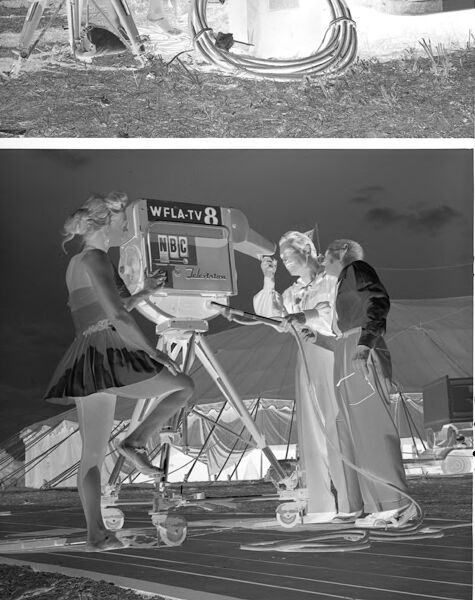

This image contains two negatives of black-and-white photographs stacked vertically. They appear to depict scenes related to television broadcasting, possibly at an outdoor event or circus, due to visible tents in the background.

Top Frame:

The scene shows three people near a utility or service area, possibly backstage. On the left, a woman wearing a costume appears to be holding a large prop or piece of equipment, perhaps a circus performer. Two men dressed casually sit nearby, one wearing sunglasses.

Bottom Frame:

This scene includes three individuals gathered around a large professional television camera labeled "WFLA-TV 8" and "NBC." The camera is mounted on a rolling platform labeled "Television." Two people, one dressed semi-formally and another likely a performer in costume, seem to be interacting with the camera as a technician or operator looks on.

Both images have negative film characteristics, reversed colors, and visible Kodak branding along the edges, indicating they were taken with Kodak Safety Film.

Created by gpt-4o-2024-08-06 on 2025-06-07

The image is a negative film with two distinct photographs, one on top of the other. Both images are visually inverted in terms of color and contrast due to being photographic negatives.

In the top photograph, three people are gathered around some equipment outside a building. The scene appears to be outdoors on a patch of grass. On the left, a woman in what looks like a sequined outfit, possibly suggesting she's a performer, is sitting on the edge of a platform or step. She appears to be talking to two men; one standing and the other sitting. Equipment like coiled cables and cases are visible on the ground.

In the bottom photograph, three people are seen interacting with a large television camera marked with "WFLA-TV 8" and "NBC Telecolor". The camera is mounted on a rolling platform and is pointed to the right of the photo. The woman on the left, possibly the same woman from the first image, is dressed in a costume resembling an entertainer or performer, with a short skirt and fitted top. The other two individuals are likely crew members engaged in setting up or adjusting the camera. They are situated on what seems like a wooden or paved surface, with large tent-like structures in the background, hinting that it might be an event or a circus setting.

Overall, the images convey a mid-20th-century atmosphere, capturing moments of preparation or behind-the-scenes action, possibly for a television production or live event.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-12

The image appears to show two different scenes. The top image depicts a couple, a man and a woman, sitting together outdoors surrounded by various equipment and materials, suggesting they may be working on some kind of project or activity. The bottom image shows a group of people, likely a film or television crew, gathered around what appears to be a television or camera setup, suggesting they are engaged in some kind of production or filming activity.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-12

This appears to be a vintage black and white photograph split into two parts. The top image shows people gathered near what appears to be a building entrance or porch area, with a bicycle visible in the scene. The bottom image shows what appears to be people dancing or engaged in some kind of social activity, with what looks like a television or electronic device visible in the background marked with "WFLA-TV". The clothing and styling suggests these photos are likely from the 1950s or early 1960s. Both images have a casual, social gathering feel to them, capturing moments of everyday life from that era.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-23

This image is a black-and-white film negative, comprising two photographs of individuals engaged in various activities. The top photograph features three people standing outside, with one person holding a long, coiled cable. In the background, a large tent or awning is visible, along with a doorway and a sign that appears to be an arrow pointing upwards.

The bottom photograph depicts four people gathered around a television camera, which is positioned on a tripod. The camera displays the text "WFLA-TV 8" and "ABC" on its front. The individuals are dressed in formal attire, with one person wearing a dark jacket and another sporting a light-colored shirt. The background of this photograph shows a large tent or awning, similar to the one in the top image.

The overall atmosphere of the image suggests that it may be related to a news broadcast or a television production, given the presence of the television camera and the formal attire of the individuals. However, without more context, it is difficult to determine the specific purpose or occasion depicted in the image.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-23

The image is a black-and-white photograph of two scenes, both depicting women working with television equipment.

In the top scene, a woman with blonde hair stands in front of a large metal box, wearing a sleeveless dress and holding a coiled cable. She is positioned in front of a man, who is wearing a dark shirt and light pants, and appears to be operating the box. The background of this scene is dark, with a grassy area and a few objects visible.

In the bottom scene, a woman with blonde hair stands in front of a television camera, wearing a sleeveless dress and holding a microphone. She is positioned in front of another woman, who is wearing a light shirt and dark skirt, and appears to be operating the camera. The background of this scene is also dark, with a grassy area and a few objects visible.

Overall, the image suggests that the women are working together to set up and operate television equipment, possibly for a live broadcast or filming.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-23

The image is a black-and-white photo collage consisting of two distinct scenes. The top scene depicts a woman and two men. The woman is seated on a bicycle, holding a hose, and looking towards the camera. The man on the left is standing behind the bicycle, and the man on the right is seated on a stool, also looking towards the camera. They are in front of a structure that appears to be a trailer or a small building.

The bottom scene shows three people in what seems to be a studio setting. A woman in the foreground is operating a camera mounted on a tripod. Behind her, two other women are standing, one of whom is holding a microphone. They appear to be engaged in a filming or recording activity. The background includes what looks like a tent or a temporary structure, suggesting an outdoor event or a makeshift studio.

The collage has a vintage feel, possibly from the mid-20th century, given the style of clothing and equipment. The images are cropped and placed side by side, with a date stamp "1949" in the bottom right corner of the collage, indicating the year the photos were taken. The overall tone of the images is casual and relaxed, with a sense of camaraderie among the individuals depicted.

Created by amazon.nova-lite-v1:0 on 2025-05-23

The image is a black-and-white photograph featuring two different scenes. In the top scene, a man and a woman are sitting on a chair in front of a door. The man is holding a bicycle, and the woman is looking at him. The woman is wearing a sleeveless top, and the man is wearing a polo shirt. The bicycle is parked in front of them, and there is a tent in the background. In the bottom scene, a woman is standing on a platform with a tripod, and another woman is standing on a wheeled platform. The woman on the platform is holding a camera and seems to be filming the other woman. The woman on the wheeled platform is wearing a dress and seems to be posing for the camera.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-04-28

Here's a description of the image:

This is a black and white negative of two photos taken at what appears to be a circus or event.

Top Photo:

- It shows three men and a woman outside a trailer/truck.

- The woman and a man are talking.

- A third man is sitting in the doorway of the truck.

- A large television camera is positioned on a tripod in the foreground.

- A tent is visible in the background.

Bottom Photo:

- It appears to be behind-the-scenes of filming, with a woman in a short skirt and others gathered around a large television camera.

- The camera has the WFLA-TV 8 NBC logo on it.

- The woman appears to be on stage and another woman is positioned in front of the camera.

- The background reveals a striped pathway, hinting at a performance area or arena.

Overall:

The two images likely capture different aspects of a broadcast or filming event at a circus or show. The top photo gives a glimpse of the setup with the camera. The bottom photo then appears to be a shot during a performance.

Created by gemini-2.0-flash on 2025-04-28

Here's a description of the image based on the provided negative:

The image is split into two sections, depicting scenes related to a television broadcast. Both sections appear to be taken in an outdoor setting with a circus tent visible in the background.

Top Section: Features three people in what seems to be a break or casual moment. Two are seated near the open doorway of a truck or trailer, possibly a mobile broadcasting unit. A woman in what appears to be a bathing suit or leotard sits to the left, and a man in casual clothing is seated next to her. Another man, wearing a plaid shirt, sits further inside the trailer. A large coiled cable and camera equipment are visible in the foreground, suggesting the scene is at a broadcasting location.

Bottom Section: Shows a television camera prominently displaying the call letters "WFLA-TV" and the NBC logo, indicating a broadcast from the Tampa, Florida area. A woman, possibly a performer or dancer, stands near the camera. Two other people, likely crew members, are working with the equipment. They stand on what looks like a track or platform.

The negative shows the typical dark-light inversion, with light areas appearing dark and vice versa. The image seems to capture a moment during a television production, likely a remote broadcast from a circus or outdoor event.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-23

The image is a black and white composite of two photographs, both depicting scenes from what appears to be a television production or broadcast setup from the mid-20th century.

Top Photograph:

- Three individuals are visible. One person, possibly a technician or cameraman, is seated and appears to be handling or adjusting equipment. The other two individuals are standing and seem to be engaged in conversation or coordination.

- The setting includes various pieces of equipment such as cables, a tripod, and other technical gear, suggesting preparation for a broadcast or recording.

- The environment looks like an outdoor or semi-outdoor area, possibly a tent or a temporary structure, with some tarpaulin or fabric visible in the background.

Bottom Photograph:

- This scene shows three individuals, with one person seated in front of a large, vintage television camera labeled "WFLA-TV 8." The camera is mounted on a dolly or wheeled platform, indicating mobility for filming.

- The person seated at the camera is likely the cameraman, adjusting the equipment. The other two individuals, possibly a director and an assistant, are standing nearby, engaged in discussion or giving instructions.

- The background includes a tent-like structure, suggesting an outdoor broadcast setup, similar to the top photograph.

Overall, the images capture the behind-the-scenes activities of a television broadcast crew, highlighting the equipment and teamwork involved in early television production.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-04

The image is a black-and-white photograph divided into two parts, likely taken at an outdoor event or fair.

In the top half of the image:

- There are several people gathered near what appears to be a tent or temporary structure.

- Some individuals are seated or standing, engaged in conversation or activity.

- There are various objects around them, including a large coiled cable and a cylindrical object that might be a microphone or speaker.

- The setting appears to be outdoors, with grass and an open sky visible.

In the bottom half of the image:

- Two women are standing next to a vintage television camera with the NBC and WFLA-TV 8 logos on it.

- One woman is operating the camera, and the other is observing or assisting.

- The scene is also outdoors, with a tent or canopy visible in the background.

- The women are dressed in outfits that suggest a retro or vintage style, potentially from the mid-20th century.

The photographs seem to capture moments related to broadcasting or filming, possibly at an event or festival. The presence of the television camera and the NBC logo indicates that this could be a historical snapshot of early television production or broadcasting.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-04

This image is a two-part black-and-white photograph depicting a scene from a live TV broadcast, likely from the mid-20th century.

Top Section:

The top part shows three individuals inside what appears to be a makeshift studio or outdoor production setup. One person, possibly a performer wearing a costume with a bright design, is standing on the left. The other two individuals are behind a desk, holding microphones and possibly reading from a script. There is equipment around them, including coiled cables, suggesting this is a technical setup for broadcasting.

Bottom Section:

The bottom part shows a woman operating a professional television camera labeled "WFLA-TV 8 NBC." She is wearing a short dress and appears focused on the camera. In the background, another individual in a costume is interacting with a man, possibly a host or another performer. There are also more cables and technical equipment visible, indicating the setup for a live broadcast.

The overall setting suggests a live outdoor TV show or performance, with a focus on the behind-the-scenes technical aspects and the performers.