Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 29-39 |

| Gender | Male, 99.7% |

| Calm | 50.5% |

| Surprised | 45.2% |

| Angry | 1.2% |

| Happy | 1% |

| Fear | 0.7% |

| Sad | 0.6% |

| Disgusted | 0.6% |

| Confused | 0.1% |

Feature analysis

Amazon

| Person | 99.5% | |

Categories

Imagga

| interior objects | 82.7% | |

| streetview architecture | 8.4% | |

| paintings art | 6.6% | |

| people portraits | 1.4% | |

Captions

Microsoft

created by unknown on 2022-01-15

| a group of people posing for a photo | 79.3% | |

| a group of people posing for a photo in front of a window | 74.4% | |

| a group of people standing next to a window | 74.3% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-23

| a photograph of a man and woman sitting on a chair | -100% | |

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-18

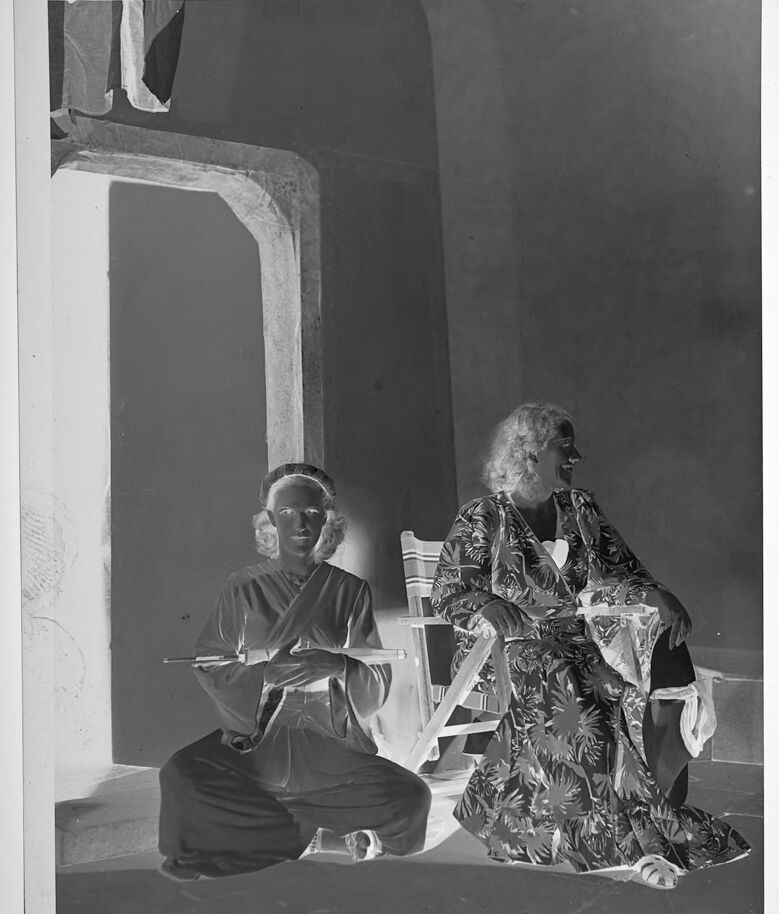

This is a black and white negative photograph showing two women in a dimly lit interior. The woman on the left is sitting on the floor, legs crossed, with what appears to be a handle of some sort in her hands. She is wearing a robe or kimono, with a band around her head. The woman on the right is seated in a chair and she is wearing a patterned dress. The lighting in the room appears to come from a large opening on the left, which suggests a doorway or arched opening, perhaps a window. The negative image gives a slightly eerie feel due to the inversion of colors, but the composition creates a sense of intimacy and quietude.

Created by gemini-2.0-flash on 2025-05-18

Here is a description of the image:

The image is a black and white negative, possibly a photographic print or scan of a negative. It shows two women in what appears to be an indoor setting. On the left, a woman is seated on the floor, cross-legged, wearing what looks like a kimono-style garment with a headband. She is holding an object that could be a rolled-up scroll or a stick.

To the right of her, another woman is seated in a chair. She is wearing a long, patterned dress or robe and sandals. Her hair is styled in curls or waves. The chair appears to be a simple wooden folding chair.

In the background, there is an arched doorway or entrance, partially visible, with a bright light shining through it. The walls of the room are plain.

The image has the inverted tones characteristic of a negative, with light areas appearing dark and vice versa.