Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 98.8% |

| Surprised | 0.5% |

| Angry | 0.4% |

| Happy | 95.2% |

| Fear | 0.1% |

| Calm | 2.2% |

| Disgusted | 1.2% |

| Sad | 0.1% |

| Confused | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 98.2% | |

Categories

Imagga

created on 2019-11-16

| people portraits | 96.7% | |

| paintings art | 3% | |

Captions

Microsoft

created by unknown on 2019-11-16

| Katherine Dunham standing in front of a mirror posing for the camera | 90.1% | |

| Katherine Dunham and woman posing for a photo | 89.9% | |

| Katherine Dunham et al. posing for a photo | 89.8% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-04

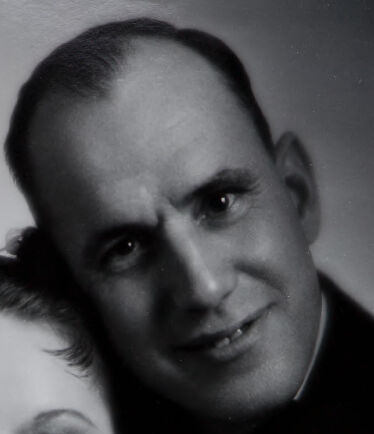

a photograph of a man and woman posing for a photo

Created by general-english-image-caption-blip-2 on 2025-07-10

a black and white photo of a man and woman

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-10

This image is a black-and-white photograph showing two individuals dressed formally. The person on the left is wearing a gown with intricate lace detailing and floral accents in their hair. The person on the right is dressed in a tuxedo, featuring a crisp pocket square. The style and lighting suggest a vintage or classical portrait.

Created by gpt-4o-2024-08-06 on 2025-06-10

The image is a black and white portrait of a couple. The woman is wearing a floral headpiece and a dress with a lace collar. The man is wearing a tuxedo with a bow tie and a pocket square. The image has a slightly soft focus, giving it a classic and elegant appearance. The border of the photograph is visible, with some markings along the bottom edge.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image shows a black and white portrait photograph of a man and a woman. The woman has a floral headpiece and is smiling at the camera. The man is wearing a tuxedo and has a serious expression on his face. They appear to be a couple, possibly from an earlier era based on their attire and the style of the photograph.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a vintage black and white formal portrait photograph, likely from around the 1930s or 1940s. It shows a couple in elegant evening attire. The woman wears a dark dress and has flowers in her styled hair, with classic makeup including defined lips. The man is wearing a tuxedo with a white pocket square. The photograph has a soft, glamorous quality typical of studio portraits from that era, with careful lighting that creates a gentle gradient in the background. The composition is intimate, with the couple posed close together, and the image has the characteristic look of professional photography from Hollywood's golden age.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-07

The image is a black-and-white photograph of a man and woman posing together. The woman is positioned in the foreground, with the man standing behind her, resting his head on her shoulder.

- The woman:

- She has dark hair styled in an updo, adorned with flowers.

- Her attire consists of a dark dress with lace details on the shoulder.

- Her face is blurred, making it difficult to discern her features.

- The man:

- He has short dark hair.

- He wears a dark suit jacket, a white shirt, and a white pocket square.

- His face is also blurred, obscuring his features.

The background of the image is a plain white wall, providing a clean and simple backdrop for the subjects. Overall, the image appears to be a formal portrait, likely taken in the mid-20th century.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-07

The image is a black-and-white photograph of a man and woman, likely from the early 20th century. The woman is positioned in the foreground, while the man stands behind her.

Woman:

- She has dark hair styled with flowers.

- Her attire consists of a lace top or dress.

- Her face is blurred, but she appears to be smiling.

Man:

- He wears a suit jacket, white shirt, and bow tie.

- A pocket square is visible in his left breast pocket.

- His face is also blurred, but he seems to be smiling.

Background:

- The background is a plain white wall.

- The overall atmosphere suggests a formal portrait or publicity photo, possibly for a theatrical performance or social event.

Image Details:

- The image has a sepia tone, indicating it was taken using an older photographic process.

- The edges of the photo are worn and torn, suggesting it has been handled extensively over time.

- The bottom edge features a series of numbers and letters, which may be a code or identifier for the image.

Overall, the image captures a moment in time, showcasing the fashion and style of the era. Despite the blurring of their faces, the subjects' smiles convey a sense of joy and happiness.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-07

The image is a black-and-white photograph of a couple, possibly on their wedding day. The woman is wearing a lace dress and a flower crown on her head, while the man is dressed in a suit and bow tie. They are both smiling and posing for the camera. The photo has a vintage look, with a slightly blurred effect and a white border at the top and bottom.

Created by amazon.nova-pro-v1:0 on 2025-06-07

The image is a black-and-white photograph of a man and a woman standing close to each other. The man is wearing a dark suit with a white shirt and a black bow tie. He has a pocket square in his jacket pocket. The woman is wearing a black dress with a lace design on the shoulders. She has a flower crown on her head. They are both smiling and posing for the photograph.

Google Gemini

Created by gemini-2.0-flash on 2025-05-04

The image is a black and white portrait of a well-dressed man and woman posing for the camera. The woman, positioned slightly to the left, wears a dark-colored, lace-trimmed dress. Her hair, styled with soft curls, is adorned with flowers. She looks directly at the camera, her expression one of subtle contentment.

To her right, the man is dressed in a classic tuxedo, complete with a bow tie and a white pocket square. He is balding, with a few strands of hair neatly combed to the side. He also looks at the camera, a faint smile playing on his lips.

The man and woman are posed close together, suggesting a sense of intimacy or partnership. The background is a soft, blurred gradient, ensuring that the focus remains on the two figures. The overall aesthetic is reminiscent of vintage Hollywood glamour, with its emphasis on elegance and sophistication.

Created by gemini-2.0-flash-lite on 2025-05-04

Here's a description of the image:

Overall Impression:

The image is a formal, black and white portrait of a man and a woman, likely taken in the mid-20th century. They are posed close together, suggesting a romantic or familial relationship. The quality of the image indicates it's a studio portrait.

Details:

The Woman:

- She is positioned slightly forward in the frame.

- She is wearing a dress or top with a decorative, possibly lace, neckline.

- She has flowers in her hair.

- She has an elegant look with makeup, likely in the style of the era.

The Man:

- He is wearing a formal black suit with a bow tie and a white pocket square.

- He has short, slicked-back hair and a slight smile.

- He is leaning in close to the woman.

Composition:

- They are positioned so that the man's head is close to the woman's.

- The background is simple and appears to be a plain backdrop, which is typical for studio portraits.

Technical Aspects:

- The lighting is soft and even, creating a flattering effect.

- The image is well-composed, drawing the viewer's attention to the subjects.

- The negative borders are visible, suggesting this is a print from a photographic negative.

Overall, the image exudes a sense of elegance, formality, and possibly love or affection. The clothing and style strongly indicate a time period in the mid-20th century.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-07

The image is a black-and-white photograph featuring two people, a man and a woman, posing together. The man is standing behind the woman with his arms around her. He is wearing a formal suit with a white handkerchief in the breast pocket. The woman is wearing a dress with a floral design on the shoulder and has a floral headpiece in her hair. Both individuals are smiling and looking directly at the camera. The photograph has a classic, vintage feel, suggesting it may be from an earlier era, possibly the mid-20th century. The background is plain, which keeps the focus on the subjects. The image appears to be a posed portrait, likely taken in a studio setting.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-20

This is a black-and-white portrait of a man and a woman. The woman is positioned slightly in front of the man, with her head turned slightly towards him. She has her hair styled in an updo, adorned with a floral accessory. The man is dressed in formal attire, including a suit and tie, with a white pocket square visible in his suit jacket. The background is plain and light-colored, providing a contrast that highlights the subjects. The photograph appears to be vintage, with a framed border and some numbers or markings visible at the bottom.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-20

This is a black-and-white portrait of a man and a woman, likely taken in a studio setting, given the plain backdrop and lighting. The man is dressed in a formal suit with a white shirt, a bow tie, and a handkerchief in his pocket, indicating a formal or special occasion. The woman wears a dark, possibly lace-trimmed dress and has her hair styled with flowers, adding to the elegance of the image. Both individuals are posing closely together and appear to be smiling softly. The photograph has a vintage quality, possibly from the early to mid-20th century, and the bottom edge of the image shows some details that might be related to the printing or processing of the photograph.