Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 13-23 |

| Gender | Male, 54.9% |

| Surprised | 45.2% |

| Fear | 45.8% |

| Happy | 45.1% |

| Sad | 45.2% |

| Calm | 53.5% |

| Disgusted | 45.1% |

| Angry | 45.1% |

| Confused | 45% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.1% | |

Categories

Imagga

created on 2019-11-16

| paintings art | 71.7% | |

| food drinks | 21.8% | |

| interior objects | 4.4% | |

Captions

Microsoft

created by unknown on 2019-11-16

| a person standing in front of a television | 26.6% | |

| a person standing in front of a television | 26.5% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-27

a photograph of a black and white photo of a woman in a car

Created by general-english-image-caption-blip-2 on 2025-07-10

a black and white photo of a woman sitting in a plane

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-16

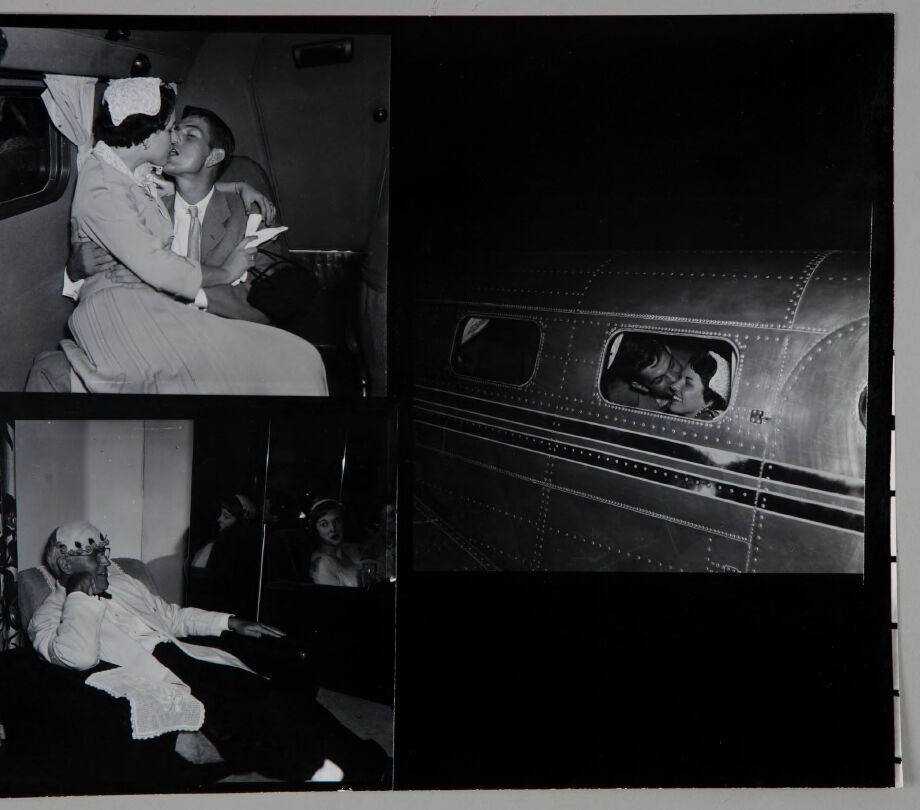

The image is a collage of three black-and-white scenes.

In the top left, a woman is dressed in vintage attire with a bonnet and is situated inside a cabin-like environment, presumably part of a vintage transportation mode, such as an airplane or train. She is interacting closely with someone seated next to her.

The top right shows a metallic surface of the exterior of an aircraft, with rivets visible and a window revealing a passenger inside.

The bottom left depicts a seated individual dressed in ornate clothes, wearing what appears to be a headdress or decorative gear, leaning back in a chair in a space with patterned curtains visible in the background.

Each scene provides a glimpse into various interactions or moments, possibly from a vintage era.

Created by gpt-4o-2024-08-06 on 2025-06-16

The image is a black and white photograph collage consisting of three distinct scenes.

In the top-left section, a man and a woman are seated closely together inside a vehicle. The woman is wearing a dress and a cap, and appears to be holding a piece of paper.

The top-right section shows a man looking out from a small rectangular window of an airplane. The exterior of the airplane is visible, with rivets and metal panels clearly shown.

In the bottom-left section, an elderly man sits in an armchair, wearing a white robe and a crown. He appears to be resting his head on his hand with a contemplative posture. Floral curtains can be seen in the background.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image appears to be a black and white photograph depicting a couple in an intimate embrace inside what looks like a train car or similar enclosed space. The image is divided into two parts, with the couple occupying the left side and the interior of the train car visible on the right side. The couple seems to be engaged in a romantic moment, with one person leaning in close to the other. The overall atmosphere of the image suggests a sense of intimacy and privacy within the confined space of the train car.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a black and white photograph that appears to be from the mid-20th century. It shows what appears to be an emotional farewell scene at a train or bus station. A couple is embracing in a dramatic kiss while sitting on what looks like the side of a transport vehicle, with its distinctive riveted metal panels visible. Below them, another person appears to be leaning out, creating a dynamic composition in the frame. The image captures the intense emotion and drama of wartime goodbyes that were common during that era, particularly during World War II when service members would depart for duty. The photograph has a cinematic quality to it, with strong contrast between light and shadow.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-07

The image is a collage of three black-and-white photographs, each depicting a scene from a train car. The left side of the image features two smaller photos, while the right side contains a larger photo.

In the top-left photo, a man and woman are seated in a train car, with the woman wearing a dress and hat and the man dressed in a suit. They appear to be sharing a romantic moment, as the woman's head is resting on the man's shoulder.

The bottom-left photo shows an older man sitting in a chair, wearing a white shirt and dark pants. He is looking to his right, and his expression is neutral.

The right photo is a larger image of a train car, with a window visible on the side. Through the window, two people can be seen inside the train car, but their faces are not clearly visible.

Overall, the image appears to be a collection of photographs taken on a train, capturing moments of everyday life and human interaction.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-07

The image is a collage of three black-and-white photographs, each depicting a different scene. The top-left photo shows a man and woman sitting together in a train car, with the woman wearing a dress and hat, and the man dressed in a suit. The bottom-left photo features an older man reclining on a couch or chair, smoking a cigar. The right-hand photo appears to be taken from outside a train car at night, showing two people looking out the window.

The overall atmosphere of the images suggests that they were taken during the early 20th century, possibly in the 1920s or 1930s. The clothing and hairstyles of the individuals in the photos are consistent with this time period. The images may have been taken for a magazine or newspaper article, or possibly as part of a personal photo album.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-06

The image is a collage of four black-and-white photographs, each capturing different scenes. In the top left corner, a couple is seated inside what appears to be an airplane. The woman is wearing a nurse's uniform and a white bonnet, while the man is dressed in a suit and tie. They are embracing and sharing a kiss. The top right photo shows a close-up of a silver airplane's exterior, with rivets visible on its surface. The bottom left photo depicts an elderly man sitting in a chair, wearing a white long-sleeve shirt, a bow tie, and glasses. He is gazing at someone or something in front of him. The bottom right photo features a group of people, including a woman in a white dress, who are standing and looking out of a window.

Created by amazon.nova-pro-v1:0 on 2025-06-06

The image is a collage of four black-and-white photographs, each depicting different scenes. The top left photograph shows a man and a woman dressed in formal attire, kissing each other. The man is wearing a suit, and the woman is wearing a dress with a veil. The top right photograph shows a man and a woman kissing each other through a window of a train. The man is wearing a suit, and the woman is wearing a dress. The bottom left photograph shows a man wearing a suit and a hat, sitting on a chair and looking at something. The bottom right photograph shows a train with a window and a door.

Google Gemini

Created by gemini-2.0-flash on 2025-05-11

The image consists of a black and white photograph divided into four sections, arranged in a 2x2 grid.

In the top left section, a man and a woman are embracing and kissing. The woman is wearing a dress, a coat, and a hat, and the man is wearing a suit and tie. They appear to be inside a vehicle, possibly a car, with the window and frame visible in the background.

In the top right section, there's a similar scene where a couple is kissing inside what appears to be the window of an airplane. The exterior of the airplane is visible, with its riveted metal surface standing out against a dark background.

In the bottom left section, an older man, wearing a robe and a cap, is seated in a chair. There's a mirror behind him, reflecting the image of two women. One is partially visible in the mirror and the other is sitting and looking at the mirror.

The bottom right section is mostly black.

Created by gemini-2.0-flash-lite on 2025-05-11

The image is a composite of four separate black and white photographs.

Top Left: A couple is embracing and kissing inside what appears to be a car or bus. The woman is wearing a hat, dress, and coat, while the man is in a suit. They are seated, and the interior suggests travel.

Top Right: A couple is kissing inside a window of a metallic, streamlined structure, likely an airplane or train. The riveted surface gives a clue of the era.

Bottom Left: An older man is seated in a chair. He is wearing glasses and what seems to be a cap and robe. There is a window and a mirror, reflecting the inside of a room and the reflection of two women.

Bottom Right: The image features a dark background that appears to be on the edge of the other photos.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-07

The image is a black-and-white collage of three photographs, each capturing a different scene.

Top Left Photograph:

- This photograph shows two individuals in what appears to be the interior of an airplane. The person on the left is leaning over the person on the right, who is seated and appears to be reading a document or newspaper. The scene suggests a moment of interaction or conversation between the two.

Bottom Left Photograph:

- This photograph depicts a person lying on a bed or couch, possibly in a state of relaxation or rest. The individual is wearing a white outfit and is holding a cigarette. In the background, there is another person, a woman, who is standing and looking towards the camera. The setting appears to be indoors, possibly in a bedroom or living room.

Right Photograph:

- This photograph shows the exterior of an airplane, with two individuals visible through one of the windows. The airplane's fuselage is prominently featured, with rivets and windows clearly visible. The two people inside the airplane are looking out of the window, suggesting they might be passengers or crew members.

Overall, the collage seems to capture moments related to travel and relaxation, with a focus on airplane settings and personal interactions.

Qwen

No captions written