Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

| Age | 25-39 |

| Gender | Female, 89.5% |

| Confused | 0.3% |

| Fear | 0.6% |

| Sad | 4.8% |

| Disgusted | 0.5% |

| Happy | 0.7% |

| Angry | 0.8% |

| Calm | 91.8% |

| Surprised | 0.5% |

Feature analysis

Amazon

| Person | 91.1% | |

Categories

Imagga

| paintings art | 97.9% | |

| pets animals | 1.1% | |

Captions

Microsoft

created by unknown on 2019-11-16

| a black and white photo of a person | 62.6% | |

| a person standing in front of a mirror | 52.8% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-03

| a photograph of a woman with a necklace and a necklace | -100% | |

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-27

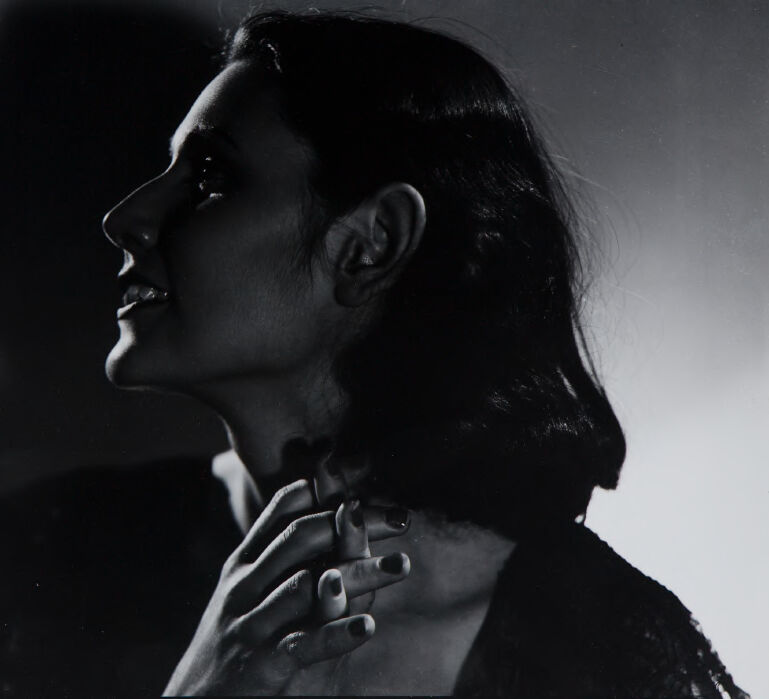

The image is a black-and-white photograph of a woman's profile, with her hand on her neck. The woman has dark hair and is wearing a dark top or jacket. Her hand is resting on her neck, with her fingers interlaced. The background is a gradient of light and dark, with a shadow cast by the woman's head. The overall atmosphere of the image is one of contemplation and introspection, as if the woman is lost in thought.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-27

The image is a black and white photograph of a woman, captured in profile. Her dark hair is styled in a bob, and she wears a dark top or dress. The woman's right hand is raised to her neck, with her fingers curled around it. Her nails are painted dark, matching the color of her attire.

In the background, there is a gradient of light and shadow, with the left side being darker and the right side being lighter. The overall atmosphere of the image is moody and introspective, with the woman's pose and expression conveying a sense of contemplation or melancholy.

The photograph appears to be an artistic or creative work, possibly from the early 20th century, given the style and aesthetic of the image. The use of black and white film and the dramatic lighting suggest a focus on capturing the subject's emotional state rather than simply documenting her physical appearance.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-27

The image is a black-and-white photograph of a woman with short hair, possibly taken in the 1960s. She is looking upward, possibly posing for a photo. Her left hand is on her chest, and her right hand is on her neck. She is wearing a black dress with a lace design. The photo has a vintage look, with a slightly blurry effect and some scratches on the surface.

Created by amazon.nova-pro-v1:0 on 2025-05-27

The image is a black-and-white photograph of a woman. She has short hair and is wearing a black dress. She is looking up and to the side, with her head slightly tilted. She has a hand on her neck, and her fingers are gently touching her skin. The background is dark, and the light is focused on her face, creating a dramatic effect.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-04-30

Here's a description of the image:

Overall Impression:

The image is a black and white portrait of a woman, captured in a classic, almost film noir style. The lighting creates strong shadows, enhancing the drama and focusing on the subject's features.

Subject:

- Appearance: The woman has dark hair styled in a bob. She has a prominent nose and delicate features. The expression on her face is subtle, suggesting contemplation or slight amusement.

- Pose: She is posed in a three-quarter profile, looking upwards. Her hands are clasped gently together near her neck, adding to the sense of introspection.

- Clothing: The woman appears to be wearing a dark outfit, possibly a top or jacket.

Lighting and Composition:

- Shadows: The lighting is dramatic. A large shadow is cast on the wall, suggesting a strong light source from the side.

- Contrast: The high contrast between light and shadow emphasizes the woman's face and the texture of her hair and clothing.

- Composition: The composition is balanced, with the woman's face taking up a significant portion of the frame.

Overall Tone:

The portrait evokes a sense of elegance, mystery, and perhaps a touch of vulnerability. The black and white medium and the use of shadows add to the timeless quality of the photograph.

Created by gemini-2.0-flash on 2025-04-30

The black and white photograph depicts a woman in profile. She has dark hair styled in a short, wavy manner that stops around her jawline. Her head is tilted upwards, and she appears to be looking towards something outside of the frame. Her hands are delicately clasped together near her neck, and her fingernails seem to be painted a dark color. The lighting creates a soft gradient on the background, with a stark shadow of her face and head cast behind her. The image has the feel of a vintage portrait, possibly taken with a film camera due to the visible border details and annotations.