Machine Generated Data

Tags

Amazon

created on 2019-11-16

| Human | 99 | |

|

| ||

| Person | 99 | |

|

| ||

| Person | 97.1 | |

|

| ||

| Person | 96.2 | |

|

| ||

| Tie | 94.6 | |

|

| ||

| Accessories | 94.6 | |

|

| ||

| Accessory | 94.6 | |

|

| ||

| Apparel | 90.3 | |

|

| ||

| Clothing | 90.3 | |

|

| ||

| Person | 87.6 | |

|

| ||

| Furniture | 85.5 | |

|

| ||

| Person | 83.5 | |

|

| ||

| Face | 78.7 | |

|

| ||

| People | 73 | |

|

| ||

| Indoors | 63.6 | |

|

| ||

| Room | 63.6 | |

|

| ||

| Living Room | 63.6 | |

|

| ||

| Plant | 62.6 | |

|

| ||

| Tree | 62.6 | |

|

| ||

| Child | 61.8 | |

|

| ||

| Kid | 61.8 | |

|

| ||

| Girl | 60.2 | |

|

| ||

| Female | 60.2 | |

|

| ||

| Overcoat | 59.3 | |

|

| ||

| Suit | 59.3 | |

|

| ||

| Coat | 59.3 | |

|

| ||

| Tie | 58.6 | |

|

| ||

| Couch | 58.2 | |

|

| ||

Clarifai

created on 2019-11-16

Imagga

created on 2019-11-16

| iron lung | 45.7 | |

|

| ||

| respirator | 36.5 | |

|

| ||

| breathing device | 27.4 | |

|

| ||

| graffito | 23.5 | |

|

| ||

| decoration | 21 | |

|

| ||

| newspaper | 19.2 | |

|

| ||

| device | 18.3 | |

|

| ||

| person | 16.8 | |

|

| ||

| people | 16.2 | |

|

| ||

| man | 16.1 | |

|

| ||

| male | 15 | |

|

| ||

| product | 14.9 | |

|

| ||

| grunge | 12.8 | |

|

| ||

| adult | 12.3 | |

|

| ||

| business | 12.1 | |

|

| ||

| art | 11.7 | |

|

| ||

| black | 11.4 | |

|

| ||

| style | 11.1 | |

|

| ||

| creation | 11.1 | |

|

| ||

| city | 10.8 | |

|

| ||

| fashion | 10.5 | |

|

| ||

| old | 10.4 | |

|

| ||

| aged | 9.9 | |

|

| ||

| vintage | 9.9 | |

|

| ||

| design | 9.6 | |

|

| ||

| snow | 9.1 | |

|

| ||

| paint | 9 | |

|

| ||

| detail | 8.8 | |

|

| ||

| room | 8.7 | |

|

| ||

| wall | 8.5 | |

|

| ||

| studio | 8.4 | |

|

| ||

| retro | 8.2 | |

|

| ||

| technology | 8.2 | |

|

| ||

| dirty | 8.1 | |

|

| ||

| looking | 8 | |

|

| ||

| close | 8 | |

|

| ||

| businessman | 7.9 | |

|

| ||

| urban | 7.9 | |

|

| ||

| face | 7.8 | |

|

| ||

| ancient | 7.8 | |

|

| ||

| men | 7.7 | |

|

| ||

| modern | 7.7 | |

|

| ||

| hand | 7.6 | |

|

| ||

| horizontal | 7.5 | |

|

| ||

| human | 7.5 | |

|

| ||

| one | 7.5 | |

|

| ||

| world | 7.4 | |

|

| ||

| time | 7.3 | |

|

| ||

| travel | 7 | |

|

| ||

Google

created on 2019-11-16

| Photograph | 96.9 | |

|

| ||

| People | 93.4 | |

|

| ||

| Snapshot | 87.6 | |

|

| ||

| Black-and-white | 86.3 | |

|

| ||

| Monochrome | 79.4 | |

|

| ||

| Photography | 78 | |

|

| ||

| Room | 76.5 | |

|

| ||

| Stock photography | 68.5 | |

|

| ||

| Child | 64.5 | |

|

| ||

| Family | 60.5 | |

|

| ||

| Art | 58.1 | |

|

| ||

| Monochrome photography | 57.8 | |

|

| ||

| Vintage clothing | 57 | |

|

| ||

| Picture frame | 54.4 | |

|

| ||

| Sitting | 54.1 | |

|

| ||

| Style | 52.5 | |

|

| ||

Color Analysis

Face analysis

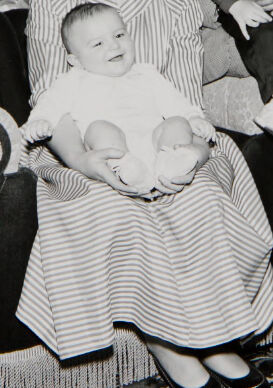

Amazon

Microsoft

AWS Rekognition

| Age | 23-37 |

| Gender | Female, 99.1% |

| Happy | 34.6% |

| Fear | 0.5% |

| Angry | 1.9% |

| Confused | 4% |

| Calm | 55.7% |

| Disgusted | 1.7% |

| Surprised | 0.8% |

| Sad | 0.7% |

AWS Rekognition

| Age | 0-3 |

| Gender | Female, 97.5% |

| Fear | 0% |

| Disgusted | 0% |

| Surprised | 0% |

| Sad | 0% |

| Happy | 99.8% |

| Confused | 0% |

| Angry | 0% |

| Calm | 0.2% |

AWS Rekognition

| Age | 28-44 |

| Gender | Male, 97.3% |

| Fear | 0.1% |

| Calm | 0% |

| Sad | 0% |

| Angry | 0.3% |

| Disgusted | 0.2% |

| Happy | 98.9% |

| Surprised | 0.2% |

| Confused | 0.2% |

AWS Rekognition

| Age | 0-4 |

| Gender | Female, 68.2% |

| Calm | 96.8% |

| Surprised | 0.3% |

| Disgusted | 0.3% |

| Happy | 0.3% |

| Angry | 1.5% |

| Confused | 0.3% |

| Sad | 0.5% |

| Fear | 0% |

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 52.4% |

| Angry | 45.6% |

| Disgusted | 45.1% |

| Happy | 45% |

| Calm | 51.7% |

| Surprised | 45.4% |

| Fear | 45.3% |

| Confused | 45.1% |

| Sad | 46.7% |

Microsoft Cognitive Services

| Age | 34 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 46 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 1 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| people portraits | 83.7% | |

|

| ||

| paintings art | 16% | |

|

| ||

Captions

Microsoft

created on 2019-11-16

| a vintage photo of a group of people posing for the camera | 95.8% | |

|

| ||

| a vintage photo of a group of people posing for a picture | 95.6% | |

|

| ||

| a group of people posing for a photo | 95.5% | |

|

| ||

Text analysis

$13

$13