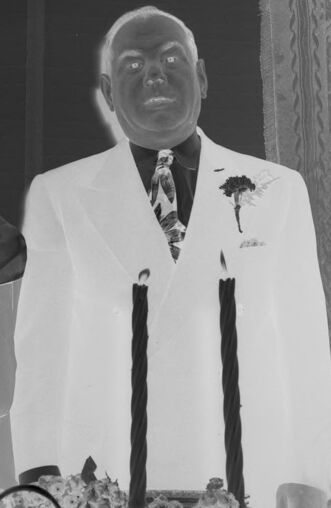

Machine Generated Data

Tags

Amazon

created on 2019-10-29

| Accessories | 99.9 | |

|

| ||

| Accessory | 99.9 | |

|

| ||

| Tie | 99.9 | |

|

| ||

| Apparel | 99.8 | |

|

| ||

| Clothing | 99.8 | |

|

| ||

| Human | 99.2 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Person | 97.8 | |

|

| ||

| Person | 97.1 | |

|

| ||

| Coat | 79.9 | |

|

| ||

| Sleeve | 75.9 | |

|

| ||

| Text | 73.2 | |

|

| ||

| Glass | 72.7 | |

|

| ||

| Food | 66.3 | |

|

| ||

| Meal | 66.3 | |

|

| ||

| Suit | 62.3 | |

|

| ||

| Overcoat | 62.3 | |

|

| ||

| Finger | 62.3 | |

|

| ||

| Dish | 61.9 | |

|

| ||

| Creme | 61.3 | |

|

| ||

| Cake | 61.3 | |

|

| ||

| Cream | 61.3 | |

|

| ||

| Dessert | 61.3 | |

|

| ||

| Icing | 61.3 | |

|

| ||

| Plant | 60.3 | |

|

| ||

| Flower | 60.3 | |

|

| ||

| Blossom | 60.3 | |

|

| ||

| Home Decor | 59.5 | |

|

| ||

| Linen | 57.9 | |

|

| ||

Clarifai

created on 2019-10-29

Imagga

created on 2019-10-29

Google

created on 2019-10-29

| Photograph | 97 | |

|

| ||

| Snapshot | 84.3 | |

|

| ||

| Black-and-white | 74.4 | |

|

| ||

| Photography | 62.4 | |

|

| ||

| Family | 58.6 | |

|

| ||

| Gentleman | 54.5 | |

|

| ||

| Monochrome | 54.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 36-52 |

| Gender | Male, 59.9% |

| Happy | 7% |

| Angry | 5.7% |

| Disgusted | 1.3% |

| Calm | 4.7% |

| Sad | 3.8% |

| Confused | 2.7% |

| Fear | 17.9% |

| Surprised | 56.9% |

AWS Rekognition

| Age | 26-40 |

| Gender | Male, 98.2% |

| Happy | 0.3% |

| Calm | 0.1% |

| Angry | 1.2% |

| Surprised | 76.5% |

| Sad | 0% |

| Confused | 0.3% |

| Disgusted | 0.3% |

| Fear | 21.3% |

AWS Rekognition

| Age | 43-61 |

| Gender | Female, 89.6% |

| Calm | 14% |

| Confused | 1% |

| Sad | 9% |

| Angry | 4.7% |

| Surprised | 1.7% |

| Disgusted | 1.3% |

| Happy | 66.7% |

| Fear | 1.6% |

Feature analysis

Categories

Imagga

| people portraits | 82.1% | |

|

| ||

| paintings art | 13.9% | |

|

| ||

| streetview architecture | 2.6% | |

|

| ||

Captions

Microsoft

created on 2019-10-29

| a group of people posing for a photo | 98.8% | |

|

| ||

| a group of people posing for the camera | 98.7% | |

|

| ||

| a group of people posing for a picture | 98.6% | |

|

| ||

Text analysis

Amazon

SI

223190A9939U2A13A

JS

г23яя ИАЯЯ З9U2 АТЭА

JS

г23яя

ИАЯЯ

З9U2

АТЭА