Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 18-26 |

| Gender | Female, 94% |

| Calm | 98.9% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.4% |

| Confused | 0.2% |

| Happy | 0.1% |

| Disgusted | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Adult | 99.2% | |

Categories

Imagga

created on 2018-08-23

| people portraits | 99.5% | |

| events parties | 0.1% | |

| paintings art | 0.1% | |

| streetview architecture | 0.1% | |

| pets animals | 0.1% | |

Captions

Microsoft

created by unknown on 2018-08-23

| a group of people sitting posing for the camera | 99.1% | |

| a group of people posing for a photo | 98.9% | |

| a group of people sitting posing for a photo | 98.8% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-26

a photograph of a family portrait of a man and woman with a dog

Created by general-english-image-caption-blip-2 on 2025-07-09

an old black and white photo of a family with a dog

OpenAI GPT

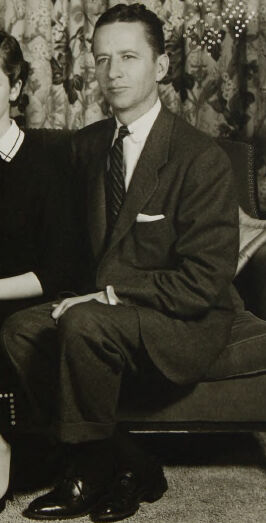

Created by gpt-4o-2024-11-20 on 2025-06-06

This image depicts a vintage family portrait set in a formal living room. The group is seated on a sofa with a patterned floral curtain as the backdrop. The room includes traditional furnishings such as side tables with lamps on either side of the sofa. A small dog sits on the floor near one individual, and another person holds a small cat. The text “DENVER LINCOLN LOG” is visible across the center of the photo. The setting and attire suggest a mid-20th-century time period.

Created by gpt-4o-2024-08-06 on 2025-06-06

The image is a black and white family portrait featuring four individuals sitting on a couch in a living room setting. Two adults are positioned on either side, and in between them are a child and another adult. The child is holding a small pet, which appears to be a cat. There are two side tables flanking the couch, each with a table lamp. A dog is sitting on the floor in front of the family, on the left side of the image. Behind them, there is a floral-patterned curtain. The text "DENVER LARSON 3095" is visible in the lower part of the image.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-10

The image shows a family portrait of four people - a woman, a man, and two children, a boy and a girl. They are sitting together in a living room setting, with floral curtains in the background. The family appears to be dressed formally, with the adults wearing suits and the children wearing formal attire as well. There is also a small dog sitting on the floor in front of the family. The image has a vintage, black and white aesthetic, suggesting it was taken some time in the past.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-10

This appears to be a formal family portrait from around the 1950s, taken in what looks like a living room. The family of four is seated on a couch with floral-patterned curtains in the background and matching table lamps on either side. They are all formally dressed - the adults in dark business attire and the young boy in a suit with a bow tie. There are two pets in the photo: what appears to be a terrier-type dog sitting on the floor, and a Siamese cat being held by one of the family members. The photo has a professional studio quality to it, with good lighting and composition. On the floor, there appears to be some text that might be "GRAND OPENING 1950" or similar lettering.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-06

The image is a black-and-white photograph of a family of four posing for a photo in their living room. The family consists of a mother, father, son, and daughter. The mother is wearing a dark dress with a bow at the neckline, the father is wearing a suit and tie, the son is wearing a dark suit and bow tie, and the daughter is wearing a dark dress with white polka dots on the skirt. They are all sitting on a couch, with the mother and father on either side of the son and daughter.

There is a small dog sitting on the floor in front of the mother's feet. There are two end tables, one on either side of the couch, each with a lamp on it. The background of the image shows a floral curtain behind the couch, and the walls of the room are painted a light color. The overall atmosphere of the image is one of warmth and happiness, as the family appears to be enjoying each other's company.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-06

The image is a black-and-white photograph of a family of four, including two adults and two children, posing for a formal portrait in their living room. The family is seated on a couch, with the mother on the left, wearing a dark dress and heels, and the father on the right, dressed in a suit and tie. Between them are two children, a boy and a girl, both dressed in dark attire.

In front of the couch, two dogs are sitting on the floor, adding to the family's warm and intimate atmosphere. The background of the image features a floral-patterned curtain, which adds a touch of elegance and sophistication to the overall scene. Two lamps are placed on either side of the couch, providing soft lighting that enhances the warm and cozy ambiance of the room.

Overall, the image presents a heartwarming and nostalgic portrayal of a family's love and connection, captured in a moment of quiet intimacy and shared joy.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-07

The black-and-white photograph shows a family sitting on a couch in a room. The family consists of a man, a woman, and two children. The man is wearing a suit and tie, while the woman is wearing a dress. The two children are sitting on the couch with them. A dog is lying on the floor in front of them. The room has a carpeted floor and a curtain hanging on the wall behind them.

Created by amazon.nova-pro-v1:0 on 2025-06-07

The image is a black-and-white photo of a family of five people sitting on a couch. They are all dressed formally, with the men wearing suits and ties and the women wearing dresses. The man on the right is holding a small dog, and the woman on the left is holding a cat. The family is sitting in front of a curtain with a floral pattern, and there are two lamps on either side of the curtain.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-10

Certainly! Here's a description of the image:

Overall Impression:

The photograph appears to be a formal family portrait from the mid-20th century. It's a black and white image, likely a snapshot from the era.

Composition:

- Subject: A family of four is seated on a sofa. There's a mother, a father, a son, and a daughter. A small dog is lying on the floor near the mother. A cat is also visible, being held by the daughter.

- Setting: The family is indoors, likely in their living room. The background features patterned curtains. There are lamps on side tables on either side of the sofa.

- Arrangement: The family is neatly arranged, suggesting a posed photograph. The parents sit on the right side of the sofa, while the children are on the left side. The dog is front and center at the base of the sofa.

- Style: The style is typical of family portraits from the mid-century period, with a focus on neatness, formality, and a sense of togetherness.

Details:

- Clothing: Everyone is dressed in formal attire. The father wears a suit and tie, the mother a dark dress, the son a suit and bow tie, and the daughter a dark outfit.

- Lighting: The lighting is soft and even, possibly from a flash or soft light source.

- Mood/Tone: The image exudes a sense of formality, perhaps even a hint of reserve. It seems to reflect a time when families took special care with their image.

Possible Interpretation:

This is likely a cherished family portrait taken to commemorate a special occasion or simply as a record of family life. It offers a glimpse into the fashion, décor, and social values of its time.

Created by gemini-2.0-flash on 2025-05-10

Here's a description of the image:

This is a black and white photograph of a family portrait, likely taken in the mid-20th century. The family is seated on a sofa in what appears to be a living room setting.

From left to right, the family members are:

Mother: A woman with neatly styled short hair, wearing a dark dress with a decorative brooch.

Son: A young boy in a suit with a bow tie, sitting with his arms crossed.

Daughter: A young woman in a dark dress with a white collar. She is holding a small cat.

Father: A man in a suit and tie, seated on the end of the sofa.

The family is positioned in front of a patterned curtain. There are lamps and side tables on either side of the sofa. A dog lies on the floor in the lower left corner of the photo.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-07

This black-and-white photograph captures a family portrait, likely taken in the mid-20th century. The family consists of four individuals and a pet dog. Here is a detailed description of the image:

Setting: The portrait is taken indoors, in a living room setting. The backdrop features patterned curtains with a floral design. There are two lamps with lampshades on either side of the family, placed on end tables. The furniture includes a sofa and a side table with decorative items.

Family Members:

- Mother: Seated on the left, she is wearing a dark dress with a necklace and has short, curly hair. She is smiling and appears relaxed.

- Son: Standing in the center, he is wearing a suit with a bow tie. He looks young, possibly a preteen or young teenager, and has a neutral expression.

- Daughter: Seated next to the son, she is wearing a dark dress with a white collar and has her hair styled in a bob. She has a serious expression.

- Father: Seated on the right, he is wearing a suit and tie. He has a composed demeanor and is looking directly at the camera.

Pet: A small, shaggy dog is lying on the floor in front of the mother. The dog is wearing a collar and appears to be calm and well-behaved.

Clothing and Accessories: The family is dressed formally, suggesting that this might be a special occasion or a professional portrait. The children's clothing, particularly the son's suit and bow tie, indicates a sense of formality.

Atmosphere: The overall atmosphere of the photograph is formal and composed, reflecting the typical style of family portraits from the mid-20th century. The setting and attire suggest a middle-class family from that era.

This image provides a glimpse into the family dynamics and fashion of the mid-20th century, capturing a moment of togetherness and formality.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-18

The image is a black-and-white photograph depicting a family of four sitting on a couch in a formal pose, likely taken in a living room. The setting includes floral-patterned curtains in the background and two side tables with lamps on either side. The family consists of two adults, one child, and a dog.

- The adult on the left appears to be a woman, dressed in a dark dress with a bow at the collar. She is holding a small dog on her lap.

- The adult on the right appears to be a man, dressed in a suit with a tie. He has a serious expression.

- The child, seated between the two adults, is wearing a formal outfit with a bow tie.

- The dog has a fluffy coat and is resting on the floor next to the woman.

The overall style of the photograph suggests it was taken in the mid-20th century. The composition and attire reflect a formal portrait typical of that era.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-18

This is a black-and-white vintage family portrait featuring four individuals and a dog. There are two adults and two children, all dressed formally. The woman on the left is wearing a dark dress with a bow at the neck, while the boy next to her is in a suit with a bow tie. The woman on the right is holding a small cat and is dressed in a dark blouse with a white collar. The man on the far right is wearing a suit with a tie. The dog is lying on the floor in front of the woman on the left. The background includes patterned curtains and two table lamps on either side of the couch. The image appears to be from the mid-20th century based on the clothing and style.