Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

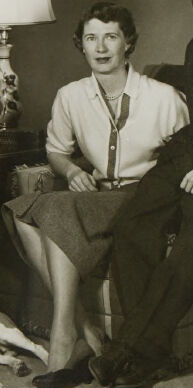

| Age | 36-44 |

| Gender | Female, 100% |

| Calm | 49.3% |

| Surprised | 33.5% |

| Happy | 13.4% |

| Fear | 6.7% |

| Confused | 6.1% |

| Angry | 3.7% |

| Sad | 2.5% |

| Disgusted | 0.7% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.2% | |

Categories

Imagga

created on 2018-08-23

| people portraits | 75.3% | |

| interior objects | 16% | |

| paintings art | 5.2% | |

| pets animals | 1.8% | |

| events parties | 0.9% | |

| text visuals | 0.3% | |

| food drinks | 0.3% | |

| streetview architecture | 0.1% | |

Captions

Microsoft

created by unknown on 2018-08-23

| a group of people posing for a photo | 96.1% | |

| a group of people posing for the camera | 96% | |

| a group of people posing for a picture | 95.9% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-26

a photograph of a man and woman sitting on a chair with a dog

Created by general-english-image-caption-blip-2 on 2025-07-09

a family poses for a photo in a living room with a dog

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-06

The image depicts a vintage family portrait with four individuals sitting together in a living room setting. The group consists of two men and two women, with one of the women wearing a patterned dress and the other dressed in a white blouse and skirt. The setting features a stone fireplace on the left, decorative wall mirrors, and a lamp positioned on a table behind the group. A dog, resembling a beagle, is lying on the floor in front of them. The room exudes a warm, mid-century aesthetic.

Created by gpt-4o-2024-08-06 on 2025-06-06

The image is a vintage black and white photograph depicting a family of four seated in a living room setting. On the left, a fireplace with a stone facade is visible, with two candlesticks placed on the mantel. The rest of the living room includes a floor lamp with a decorative base and what seems to be a couch or armchair where the family is seated. A dog is comfortably lying on the floor in front of the family. The photo has a watermark from a studio, "PROOF JAMESON BERNER," indicating it is a proof copy. The wall behind the family has some decorative plates or artwork mounted on it. The overall ambiance suggests a typical mid-20th-century family portrait setup.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-10

The image appears to be a family portrait from the mid-20th century. It shows a group of four people - a man, a woman, and two younger individuals, likely their children - sitting together in a living room setting. There is also a dog present in the image. The room has some decorative elements, including wall hangings and lamps. The people are dressed in formal attire, suggesting this is a special occasion or portrait. The overall mood of the image is one of a close-knit family gathering in their home.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-10

The black and white photograph shows a family of four posing together in what appears to be their living room. The room has wood paneling on the walls and a stone fireplace. The family is dressed nicely, with the women wearing dresses and the men in suits and ties. They are seated on chairs with a dog lying on the floor in front of them. The room is decorated with framed pictures on the walls and lamps hanging from the ceiling. Overall, the image captures a formal family portrait taken in a cozy home setting.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-10

This appears to be a formal family portrait from the 1950s, featuring a family of four and their beagle dog. The setting is a living room with a stone fireplace visible on the left and decorative plates on the wall. The family is formally dressed - the young woman on the left wears a patterned dress with a full skirt, the man wears a suit with a striped tie, the woman in the middle wears a light-colored cardigan and skirt, and the young man on the right wears a suit with a tie. The beagle is sitting on the floor in front of them. The photo has a classic mid-century formal portrait style, with careful positioning and lighting. There appears to be some decorative text or signage visible in the background, though it's not clearly legible in this image.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-06

The image is a black and white photograph of a family of four, with a dog, posing for a photo in their living room.

The family consists of a man, a woman, and two children. The man is sitting on a chair, wearing a dark suit and tie. The woman is sitting on a couch, wearing a light-colored blouse and skirt. The girl is sitting on a chair, wearing a dark dress with white trim. The boy is sitting on a couch, wearing a dark suit and tie.

The dog is lying on the floor between the man's feet. It has a white face and brown ears.

The background of the image shows a living room with a fireplace, a lamp, and several plates on the wall. The walls are painted a dark color, and the floor is covered with a rug.

The overall atmosphere of the image is one of warmth and comfort. The family appears to be happy and relaxed, and the dog adds to the sense of coziness. The image suggests that this is a special occasion, possibly a holiday or a family gathering.

The image is likely from the 1950s or 1960s, based on the clothing and hairstyles of the family members. The style of the photograph, with its formal pose and dark background, is also consistent with the aesthetic of the time period.

Overall, the image is a charming and nostalgic portrayal of a family in their home. It captures a moment in time that is both familiar and distant, and invites the viewer to imagine what life was like for this family in the past.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-06

The image depicts a black-and-white photograph of a family of four, including two adults and two children, posing for a portrait in their living room. The family is seated in a formal arrangement, with the father and mother positioned in the center, flanked by their son and daughter.

Family Members:

- Father: Wearing a dark suit, white shirt, and striped tie, he sits on the left side of the image.

- Mother: Dressed in a light-colored blouse and dark skirt, she sits on the right side of the image.

- Son: Wearing a dark suit, white shirt, and tie, he stands behind his mother.

- Daughter: Dressed in a dark dress with a white collar and dark shoes, she stands behind her father.

Pet:

- A beagle lies on the floor in front of the family, adding a touch of warmth to the scene.

Room Decor:

- The room features a fireplace with a stone surround and a mantle adorned with decorative items.

- A lamp with a white shade sits on a table behind the family, providing soft lighting.

- Three plates hang on the wall above the lamp, adding a decorative element to the space.

- The walls are painted a dark color, which contrasts with the lighter tones of the furniture and decor.

Overall:

- The image exudes a sense of formality and tradition, capturing a moment in time when families would gather for formal portraits.

- The use of black and white photography adds a timeless quality to the image, making it feel both nostalgic and enduring.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-07

The image is a black-and-white photograph of a family sitting in a living room. The family consists of two adults and two children, along with a dog. The adults are seated on a couch, while the children are sitting on a chair. The family is posing for a photograph, with the adults smiling and the children looking straight ahead. The living room has a fireplace, a lamp, and a wall with framed pictures and plates. The family's dog is lying on the floor next to them.

Created by amazon.nova-pro-v1:0 on 2025-06-07

A black-and-white image of a family sitting in the living room. There is a dog lying in front of them. The man is sitting on a chair between two women. A boy is sitting on a chair on the right side. Behind them is a fireplace with a lamp and a mirror. On the wall, there are some decorative items, including a frame and a decorative plate.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-10

Here's a description of the image:

This is a black and white family portrait from what appears to be the mid-20th century. The photograph features four people: a man and woman, a teenage boy, and a teenage girl. They are seated in a living room setting.

Setting: The backdrop is a dark wall, possibly with a textured finish. Behind the family, there are three decorative plates on the wall, suggesting a touch of mid-century modern design. To the left, there is a stone fireplace, complete with a decorative mirror and candleholders. A large lamp with a patterned base sits to the right of the man and woman. The room is carpeted.

People:

- The man is seated in a chair. He is wearing a suit and tie.

- The woman is sitting next to him, wearing a skirt, blouse, and a necklace.

- The teenage boy is leaning against his mother wearing a jacket and tie.

- The teenage girl is sitting on the other side of her dad. She wears a dress with a contrasting white edge.

Additional elements: A beagle dog lies on the floor in front of the man.

The overall impression is one of formality and a moment captured from a family's life. There are watermarks from a photography studio.

Created by gemini-2.0-flash on 2025-05-10

This is a black and white photograph of a family of four, along with their dog, posing indoors. The image appears to be taken in a living room, likely in the mid-20th century, based on the clothing and decor.

The family consists of a mother, a father, a daughter, and a son. The father is seated and wearing a dark suit with a striped tie. He has his arm around the daughter, who is seated next to him. She wears a dress with a patterned design and a light-colored trim. At their feet is a beagle-type dog lying down.

The mother is seated to the right of the father, wearing a light-colored blouse and a skirt. The son is standing behind the mother, with his arm around the back of her chair. He is wearing a suit with a tie.

In the background, there is a stone fireplace on the left, and above it are candle holders. Behind the mother and son, the wall features three round decorative items. A table lamp is positioned between the father and the mother.

There is some watermark-type text on the image, which partially obscures the subjects. The overall tone of the photo is formal and posed, typical of family portraits from this era.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-07

The image is a black-and-white photograph of a family posing together in a living room setting. The family consists of four members: a mother, a father, a daughter, and a son, along with a pet dog.

- The mother is seated on the left side of the image, wearing a patterned dress with a white trim.

- The father is standing next to the mother, dressed in a suit and tie.

- The daughter is seated next to the father, wearing a skirt and a sweater.

- The son is standing on the right side of the image, dressed in a suit and tie, similar to the father.

- The pet dog, which appears to be a beagle, is lying on the floor in front of the family members.

The background features a wall with decorative plates arranged in a pattern, a lamp on a small table, and a fireplace on the left side. The overall setting and attire suggest that the photograph was taken in the mid-20th century, likely in the 1950s or 1960s. The family appears to be posing formally for the photograph, possibly for a special occasion or a family portrait.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-18

This is a black-and-white photograph of a family posing for a portrait. The image appears to be from the mid-20th century, based on the clothing styles, hairstyles, and interior decor. The family consists of four individuals: two adults and two children, with a dog sitting between the children.

The adult on the left is wearing a patterned dress with a dark bodice and a lighter-colored skirt, and has her hair styled neatly. She is seated on a chair near a fireplace with a brick mantel, which has some decorative items on it, including what looks like candlesticks.

The adult in the center is dressed in a dark suit with a striped tie, sitting in a chair. He has a serious expression and is looking directly at the camera.

The adult on the right is wearing a light-colored blouse and a pleated skirt, with a belt around her waist. She is seated in a chair and appears to be looking at the camera with a neutral expression.

The child on the right is wearing a dark suit and tie, sitting in a chair. He is looking directly at the camera with a neutral expression.

The child on the left is wearing a patterned dress with a dark bodice and a lighter-colored skirt, with her hair styled neatly. She is seated on the floor next to the dog and appears to be looking at the camera with a slight smile.

In the background, there are framed pictures on the wall, a lamp with a white shade on a side table, and decorative plates or bowls arranged on the wall. The room has a carpeted floor, and the overall atmosphere of the photograph is formal and posed, typical of portrait photography from the mid-20th century.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-18

This is a black-and-white photograph of a family of four seated together in a living room setting. The family consists of a man, a woman, a teenage girl, and a teenage boy, all dressed formally. The man is wearing a suit and tie, the woman is in a blouse and skirt, the girl is wearing a dress with a pattern, and the boy is in a suit jacket and tie. They are seated on a couch with a lamp on a small table between them. A dog is lying on the floor in front of the family. The room has a stone fireplace with candlesticks on the mantel, framed pictures on the wall, and decorative plates hanging on the wall. The text on the image indicates that it is a proof from "Lindson Studio" and "Lindson Dealer."