Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

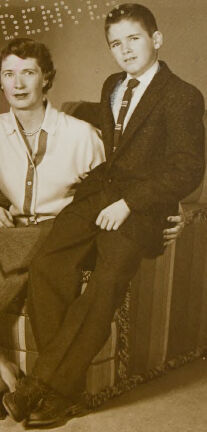

| Age | 18-24 |

| Gender | Male, 98.8% |

| Calm | 51.3% |

| Happy | 20% |

| Confused | 17.8% |

| Surprised | 7.5% |

| Fear | 6.2% |

| Angry | 3.8% |

| Sad | 3.2% |

| Disgusted | 1.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.1% | |

Categories

Imagga

created on 2018-08-23

| interior objects | 32.6% | |

| people portraits | 32.5% | |

| paintings art | 32.4% | |

| pets animals | 1.8% | |

| food drinks | 0.2% | |

| events parties | 0.2% | |

| text visuals | 0.2% | |

| streetview architecture | 0.1% | |

Captions

Microsoft

created by unknown on 2018-08-23

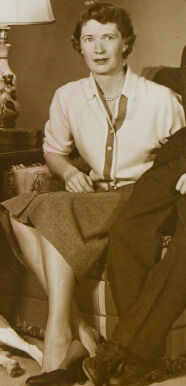

| a group of people posing for a photo | 94.7% | |

| a group of people posing for a picture | 94.6% | |

| a group of people posing for the camera | 94.5% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-17

a photograph of a group of people sitting around a dog

Created by general-english-image-caption-blip-2 on 2025-07-09

a family poses for a photo in a living room with a dog

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-06

This is a vintage family portrait featuring four individuals seated in a living room setting. The furnishings include a stone fireplace on the left with decorative candles, a lamp with a patterned base placed on an end table, and wall art consisting of plates and framed items. The group is seated on chairs or sofas, with one visible pet—a dog—lying comfortably near their feet. The room has a warm, classic design typical of mid-20th century interiors.

Created by gpt-4o-2024-08-06 on 2025-06-06

The image depicts a family sitting together in a living room setting. The family consists of four people: two adults and two children. The woman on the left is wearing a patterned dress and is seated on the arm of a chair, next to the man in a suit seated on the chair. Next to him is a woman in a blouse and skirt, followed by a boy in a suit. There is a dog lying on the floor between the adults, partially under the man’s legs. In the background, a fireplace with candles is visible on the left, and a decorative lamp is placed on a small table beside the seated woman. Three decorative plates are hung on the wall behind the group. There is a watermark on the image with the words "PROOF PARROT LAINSON DENVER" indicating it is a proof photograph.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-10

The image appears to be a family portrait from the mid-20th century. It shows a group of four people - two adults and two children - sitting together in a living room setting. The adults are dressed in formal attire, with the woman wearing a polka dot dress and the man wearing a suit and tie. The two children, a boy and a girl, are also dressed nicely. There is a dog sitting on the floor in front of them. The room is decorated with wall hangings and a lamp, creating a cozy and domestic atmosphere.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-10

This appears to be a vintage black and white photograph, likely from the 1940s or 1950s, showing a group of people in a home setting. The room features a stone or brick partial wall, a floor lamp with a shade, and what appears to be some decorative plates or wall hangings. The group includes two women wearing polka dot dresses and two men in suits with ties. A dog, possibly a beagle or similar breed, is sitting on the floor with the group. The overall composition suggests this might be a family photo, with everyone dressed formally for the occasion. The lighting and interior decor are typical of mid-20th century American homes.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-06

The image is a sepia-toned photograph of a family of four, including a dog, posing in their living room.

The family is seated in a formal arrangement, with the woman on the left wearing a dark dress with white polka dots and black heels, sitting in a chair. The man beside her is dressed in a suit and tie, sitting on the floor with his legs crossed. The woman on the right is wearing a light-colored blouse and a dark skirt, sitting in a chair. The boy on the far right is wearing a dark suit and tie, sitting on a couch.

The dog, a beagle, is lying on the floor between the man and the woman on the left. The background of the image features a fireplace with a mirror above it, a lamp, and a wall adorned with plates and pictures. The overall atmosphere of the image suggests a formal family portrait, possibly taken in the 1950s or 1960s.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-06

The image is a sepia-toned photograph of a family of four, including two adults and two children, posing in their living room. The family is seated on a couch and chair, with a dog lying on the floor in front of them.

Family Members:

- A woman wearing a dark dress with white trim sits on the left side of the couch.

- A man wearing a suit and tie sits next to her.

- A woman wearing a light-colored blouse and skirt sits on the right side of the couch.

- A boy wearing a suit and tie sits in a chair to the right of the couch.

Room Decor:

- The room has a fireplace with a stone surround on the left side.

- There are several framed pictures on the wall above the fireplace.

- A lamp with a white shade sits on a table behind the couch.

- Three decorative plates hang on the wall above the lamp.

Overall:

- The image appears to be a formal family portrait taken in the mid-20th century.

- The sepia tone gives the image a vintage feel.

- The family's clothing and hairstyles suggest that the photo was taken in the 1950s or 1960s.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-07

An old photo of a family sitting on a couch in the living room with a dog in front of them. The woman on the left is wearing a polka-dot dress, while the man in the middle is wearing a suit and tie. The woman on the right is wearing a blazer. The living room has a lamp, a fireplace, and a shelf with picture frames. The photo has a watermark that reads "Photographs are our finest finish."

Created by amazon.nova-pro-v1:0 on 2025-06-07

The image is a vintage photograph of a family sitting in a living room. The family consists of four members: two women and two men. The man on the left is wearing a suit, and the man on the right is wearing a suit and tie. The woman on the left is wearing a dress, and the woman on the right is wearing a blouse and skirt. A dog is sitting in front of them.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-10

Certainly! Here's a description of the image:

Overview

The image is a sepia-toned family portrait from what appears to be the mid-20th century. The family is posed in a living room setting.

Composition

- Family: A family of four (two parents, a teenage daughter, and a teenage son) and a dog are the subjects. They are seated on a chair.

- Dog: A Beagle is resting on the floor, at the feet of the family.

- Setting: The scene is set against a backdrop of a living room. There is a fireplace on the left, some wall decorations, and a lamp on the right side.

- Photo Quality: The photo appears to be a proof print, as evidenced by the stamped text "PROOF LANTSON DENVER" in white and a text at the top of the photo "GOOD TINGS IS OUR FINISH KIND"

People

- Father: The father is in a suit, wearing a tie, and looking at the camera.

- Mother: The mother is wearing a dress, a light-colored blouse. She's sitting next to the son.

- Daughter: The teenage daughter is wearing a dress and seated on a chair.

- Son: The teenage son is wearing a suit and leaning over the chair arm.

Overall Impression

The photo conveys a sense of formality and nostalgia. It's a classic family portrait, capturing a moment in time with a touch of vintage charm.

Created by gemini-2.0-flash on 2025-05-10

The photo depicts a family of four, along with their beagle, posing in what appears to be their living room. The scene evokes a sense of the 1950s or early 1960s, captured in a classic sepia tone.

To the left stands a young woman in a knee-length, patterned dress with a white trim at the hem and neckline, paired with black pumps. She sits next to the father, who is seated and wears a dark suit with a striped tie. His demeanor is confident and he wears a ring on his left hand. The family beagle sits loyally at their feet, looking directly at the camera.

To the right, the mother is seated in what seems to be an upholstered armchair with decorative fringe. She wears a collared top, skirt, and necklace. Next to her stands a teenage boy in a jacket, tie, and dark pants, also looking at the camera with a serious expression.

In the background, the room is decorated with framed artwork or photos and decorative plates hang on the wall. A table lamp sits next to the armchair. There's also a stone fireplace to the left with decorative objects on the mantelpiece.

The image is somewhat obscured by the word "PROOF LAINSCH DENNIS" repeated in dotted white lettering across the frame, suggesting it's a proof copy of a photograph. At the top right corner of the image, the phrase "GOOD THINGS ARE OUR THREE FINNS" can be seen in small print.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-08

The image depicts a vintage family portrait, likely from the mid-20th century, given the style of clothing and the overall setting. Here is a detailed description:

Setting: The family is posed in what appears to be a living room. The room has a fireplace with a stone facade on the left side, and a lamp on a small table to the right. The walls are adorned with decorative plates and a festive "Merry Christmas" sign made of lights.

Family Members:

- Father: He is seated on the floor, wearing a suit and tie, and smiling at the camera. He has a dog sitting next to him.

- Mother: She is seated on a chair, wearing a dress with a cardigan and a skirt. She has a gentle smile and is looking directly at the camera.

- Daughter: Standing to the left, she is wearing a polka-dot dress with a white collar and cuffs. She has a composed expression.

- Son: Standing to the right, he is wearing a suit and tie, similar to his father. He has a slight smile.

Pet: A medium-sized dog is sitting on the floor next to the father, looking calm and relaxed.

Decorations: The "Merry Christmas" sign on the wall suggests that the photo might have been taken during the holiday season. The room is modestly decorated, reflecting a cozy and warm family atmosphere.

The overall mood of the image is warm and familial, capturing a moment of togetherness and celebration.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-18

This is a vintage black-and-white photograph featuring a family of four, along with a dog, posing in a living room setting. The family consists of a man, a woman, a young girl, and a young boy. The daughter is seated on a chair to the left, wearing a patterned dress, while the son is seated on a loveseat to the right, wearing a suit. The parents are standing between them, both dressed formally. The room has a cozy feel with a fireplace, a lamp, and decorative plates on the wall. The dog is lying on the floor in front of the family. The photo appears to be a posed family portrait, typical of mid-20th-century photography.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-18

This is a vintage black-and-white photograph of a family of four sitting together in a living room with their dog. The family consists of a woman in a polka dot dress standing on the left, a man in a suit and tie sitting on a couch in the center, another woman in a blouse and skirt sitting on the right side of the couch, and a boy in a suit sitting next to her. The dog is lying on the floor in front of the couch. The room features a brick fireplace on the left, a lamp on a side table, and decorative plates on the wall. The photograph has a watermark that reads "Price Laminson Denver."