Machine Generated Data

Tags

Amazon

created on 2023-10-25

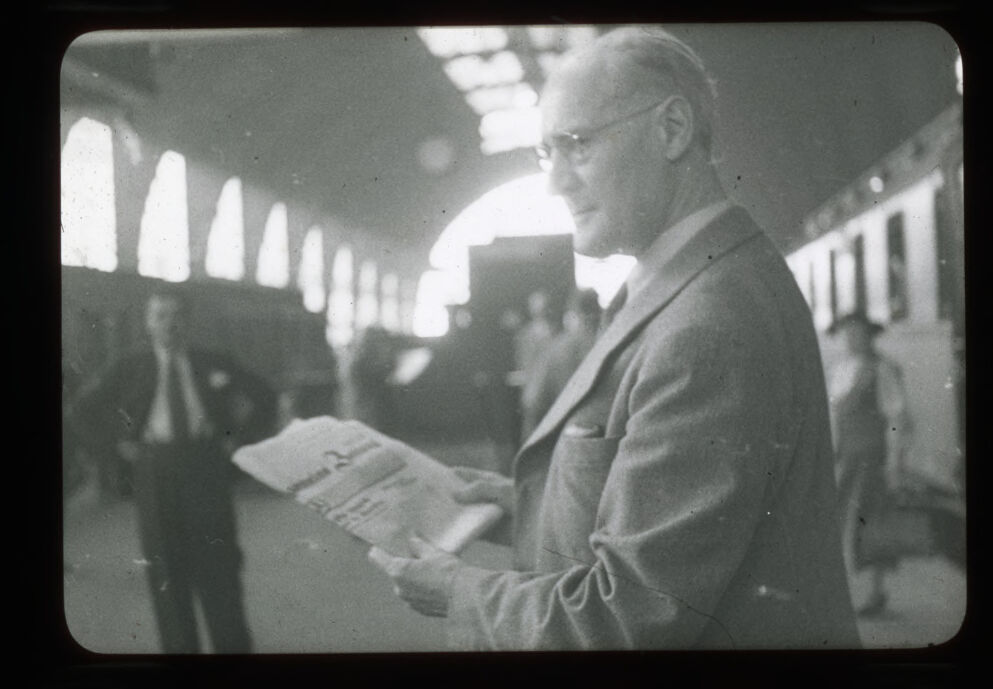

| Reading | 100 | |

|

| ||

| Person | 99.5 | |

|

| ||

| Adult | 99.5 | |

|

| ||

| Male | 99.5 | |

|

| ||

| Man | 99.5 | |

|

| ||

| Person | 96.9 | |

|

| ||

| Adult | 96.9 | |

|

| ||

| Male | 96.9 | |

|

| ||

| Man | 96.9 | |

|

| ||

| Photography | 95.8 | |

|

| ||

| Person | 94.3 | |

|

| ||

| Clothing | 92.8 | |

|

| ||

| Formal Wear | 92.8 | |

|

| ||

| Terminal | 91.6 | |

|

| ||

| Newspaper | 91.5 | |

|

| ||

| Text | 91.5 | |

|

| ||

| Face | 90.4 | |

|

| ||

| Head | 90.4 | |

|

| ||

| Coat | 86.6 | |

|

| ||

| Person | 84.8 | |

|

| ||

| Suit | 74.4 | |

|

| ||

| Person | 72.4 | |

|

| ||

| Car | 66.6 | |

|

| ||

| Transportation | 66.6 | |

|

| ||

| Vehicle | 66.6 | |

|

| ||

| Portrait | 56.5 | |

|

| ||

| Railway | 56.3 | |

|

| ||

| Train | 56.3 | |

|

| ||

| Train Station | 56.3 | |

|

| ||

| Accessories | 55.5 | |

|

| ||

| Glasses | 55.5 | |

|

| ||

Clarifai

created on 2023-10-15

| monochrome | 99.6 | |

|

| ||

| people | 99.5 | |

|

| ||

| street | 98.3 | |

|

| ||

| portrait | 96.7 | |

|

| ||

| adult | 96.1 | |

|

| ||

| man | 94.5 | |

|

| ||

| one | 91.3 | |

|

| ||

| window | 90.8 | |

|

| ||

| leader | 89.1 | |

|

| ||

| vehicle window | 88.8 | |

|

| ||

| administration | 87.6 | |

|

| ||

| train | 86.6 | |

|

| ||

| group | 84.7 | |

|

| ||

| newspaper | 84.7 | |

|

| ||

| vehicle | 84.1 | |

|

| ||

| two | 83.7 | |

|

| ||

| transportation system | 83.3 | |

|

| ||

| airport | 81.3 | |

|

| ||

| chair | 79.1 | |

|

| ||

| wear | 78.8 | |

|

| ||

Imagga

created on 2019-01-31

| man | 42.3 | |

|

| ||

| person | 32.4 | |

|

| ||

| male | 31.2 | |

|

| ||

| bow tie | 29.6 | |

|

| ||

| people | 29 | |

|

| ||

| senior | 24.4 | |

|

| ||

| necktie | 23.7 | |

|

| ||

| adult | 22.9 | |

|

| ||

| portrait | 20.1 | |

|

| ||

| business | 20 | |

|

| ||

| businessman | 19.4 | |

|

| ||

| groom | 18.3 | |

|

| ||

| face | 17 | |

|

| ||

| black | 16.8 | |

|

| ||

| happy | 16.3 | |

|

| ||

| office | 16.2 | |

|

| ||

| looking | 16 | |

|

| ||

| corporate | 15.5 | |

|

| ||

| old | 15.3 | |

|

| ||

| bartender | 15.3 | |

|

| ||

| couple | 14.8 | |

|

| ||

| men | 14.6 | |

|

| ||

| smiling | 14.5 | |

|

| ||

| handsome | 14.3 | |

|

| ||

| spectator | 14 | |

|

| ||

| lifestyle | 13.7 | |

|

| ||

| smile | 13.5 | |

|

| ||

| sitting | 12.9 | |

|

| ||

| garment | 12.7 | |

|

| ||

| manager | 12.1 | |

|

| ||

| mature | 12.1 | |

|

| ||

| computer | 12 | |

|

| ||

| work | 11.8 | |

|

| ||

| suit | 11.7 | |

|

| ||

| hand | 11.4 | |

|

| ||

| executive | 11.3 | |

|

| ||

| one | 11.2 | |

|

| ||

| love | 11 | |

|

| ||

| communication | 10.9 | |

|

| ||

| working | 10.6 | |

|

| ||

| worker | 10.5 | |

|

| ||

| barbershop | 10 | |

|

| ||

| holding | 9.9 | |

|

| ||

| professional | 9.9 | |

|

| ||

| cheerful | 9.8 | |

|

| ||

| shop | 9.6 | |

|

| ||

| clothing | 9.6 | |

|

| ||

| television | 9.5 | |

|

| ||

| serious | 9.5 | |

|

| ||

| desk | 9.4 | |

|

| ||

| day | 9.4 | |

|

| ||

| happiness | 9.4 | |

|

| ||

| expression | 9.4 | |

|

| ||

| laptop | 9.3 | |

|

| ||

| glasses | 9.3 | |

|

| ||

| head | 9.2 | |

|

| ||

| passenger | 9.1 | |

|

| ||

| fun | 9 | |

|

| ||

| employee | 8.9 | |

|

| ||

| grandfather | 8.9 | |

|

| ||

| success | 8.8 | |

|

| ||

| elderly | 8.6 | |

|

| ||

| inside | 8.3 | |

|

| ||

| human | 8.2 | |

|

| ||

| gray | 8.1 | |

|

| ||

| job | 8 | |

|

| ||

| indoors | 7.9 | |

|

| ||

| retired | 7.8 | |

|

| ||

| one person | 7.5 | |

|

| ||

| phone | 7.4 | |

|

| ||

| grandma | 7.2 | |

|

| ||

| eye | 7.1 | |

|

| ||

| pensioner | 7.1 | |

|

| ||

| beard | 7.1 | |

|

| ||

| to | 7.1 | |

|

| ||

| look | 7 | |

|

| ||

Google

created on 2019-01-31

| Photograph | 97.4 | |

|

| ||

| White | 96.5 | |

|

| ||

| Black | 94.8 | |

|

| ||

| Black-and-white | 94.6 | |

|

| ||

| Monochrome photography | 89.7 | |

|

| ||

| Snapshot | 88.4 | |

|

| ||

| Monochrome | 87.2 | |

|

| ||

| Photography | 81.8 | |

|

| ||

| Gentleman | 81.4 | |

|

| ||

| Stock photography | 68.5 | |

|

| ||

| Style | 57.9 | |

|

| ||

Microsoft

created on 2019-01-31

| person | 97.9 | |

|

| ||

| man | 97.1 | |

|

| ||

| old | 95.1 | |

|

| ||

| bus | 90.1 | |

|

| ||

| white | 71.7 | |

|

| ||

| black | 68.1 | |

|

| ||

| black and white | 58.6 | |

|

| ||

| street | 22.8 | |

|

| ||

| music | 19.8 | |

|

| ||

| monochrome | 19.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 43-51 |

| Gender | Male, 100% |

| Calm | 98% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 1.2% |

| Happy | 0.3% |

| Angry | 0.1% |

| Disgusted | 0.1% |

Feature analysis

Categories

Imagga

| paintings art | 98.7% | |

|

| ||

Captions

Microsoft

created on 2019-01-31

| a black and white photo of a man | 91.4% | |

|

| ||

| an old black and white photo of a man | 90.1% | |

|

| ||

| an old photo of a man | 90% | |

|

| ||